I had another crash over night. Same as before. Powered off and on to reboot it. Over night I did stop that video LXC with cameras and GPU "sharing" (not true passthru). Since it still crashed that LXC is clearly not the problem. I have disconnected now KVM from HDMI and USB on the server before I left for work.

As I thought, that is from a GUI (unspecified) added by yourself to PVE.

GUI? After server reboots it shows on HDMI (if I connect a monitor it it) plain text terminal screen with login prompt. No GUI or anything custom added to the Proxmox host. As I said this is fresh Proxmox installation to new NVMe disk. Nothing out of stock is installed on host.

The GUI itself may have crashed. The NanoKVM may have lost its host connection (in parallel with the GUI crash). How is the NanoKVM powered? Does it reboot/need rebooting after repowering the host? I'd try disconnecting that NanoKVM at least for testing/stability purposes.

KVM is just that. K(eyboard) V(ideo) M(mouse). To the server it is conntected with two cables. One is HDMI and one is USB (but not for power). KVM has extra USB power that is connected to external 5V power supply. It doesn't get its power from the server. It has no GUI, but since it has ethernet ports I can connect to KVM over browser to his IP and it will display the HDMI input he receives from the server. So there is no GUI (like KDE, Gnome, ...) on the Proxmox host.

What do you mean? Tried Ping/SSH from another client to the host? Maybe that unifi-server is misbehaving?

Proxmox server has for example IP 192.168.28.70. I can not ping it from anywhere in my network (wireless or wired) or even directly from the router. Network port is still up on router when server crashed. Not related connection to the Unifi wireless network. Unifi software just handles the three access poiints I have around the house and its connected clients.

You wrote earlier, that you have your GUI/browser on the host, so you can access PVE when the NW is down. Does this happen often? To use PVE it is to be assumed that you have a stable enough NW.

My home network is stable. What I mean was that that even if network is down (router upgrades for example), I can still connect remotly over KVM to the server to work in its terminal. Thing is that server is in server cabinet where I do not have a monitor. That is why this small KVM is perfect for me.

So again, the important change is the NVMe drive change. You again fail to disclose which model you are using. Also what prompted you to change the NVMe - maybe you try putting the original back in? (I now see at the end of your post that you are considering this).

Sorry. Did not know that matter. Stock NVMe HDD was ASRock 512GB. I replaced it with WD Blue NVMe 1GB of size.

I'm hoping you don't actually mean apt-get upgrade. This must never be run on Proxmox. Should only be dist-upgrade. Concerning the GUI part - see my point documented further on.

On LXC servers I have I do run apt update from time to time to upgrade the packages. There is not dist-upgrade command that I can use.

I don't have direct experience with this, but I can only theorize, that a voltage surge backported across the USB hub etc. could potentially have that effect.

On USB I have my external storage

Terramaster D2-320 on USB-C port on my server and then on other USB ports I have APC UPS (to monitor), Google Coral (for Frigate) and Zigbee controller (passing it to Home Assistant VM). None of this devices pull many USB power from server. Maybe only Google Coral on USB3 port.

Just ordered one. So I will be able to connect my old NVMe disk. Maybe I can find anything on it that will remind of a change I made years ago that will make the server more stable. Low chance but still.

I now would like to comment on your general setup. It appears you have a highly unconventional (& unsupported) setup:

I appreciate it, but have in mind that server was 100 % stable with this setup before fresh installation. I did not have any reliability issues with current existing setup.

- You have the iGPU passed through to the LXC, yet you still use (actively) the HDMI (of the same iGPU?) on the host. I guess the LXC is only using the renderer. This is probably already a point-of-failure.

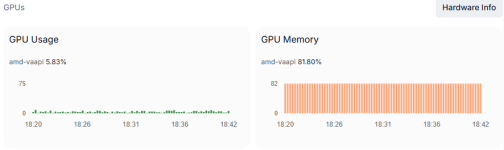

I only use the hardware decoding of the GPU (AMD VAAPI). That has nothing to do with GPU HDMI output. It only offloads the CPU by letting GPU decode the video. All this is happening on software lever, but utilizing the GPU hardware. It is exactly same as if I were using hardware decoding on Proxmox host level or inside a VM.

- You have installed a GUI/Browser on the host. See here that clearly states this is for developmental purposes only & is not supported. Add that to the above passthrough, & I'd be amazed it ever worked on that Mini PC.

That I explained above. There is not GUI/Browser on the host. It is stock Proxmox v9 installation. I just restored the virtual servers from backups that I made before replacing NVMe.

- You are running docker in an LXC instead of a VM. See here.

Again there were no issues before with that and even if it is suggested to move them to VM, it is still not forbidden or impossible. I just have to enable unprivileged container and set nesting to 1. But if you think this is 100 % cause of this new crashes I can setup a VM instaed of LXC for all LXC servers I have now with docker inside. Which VM OS would you suggest?

- You have unconfined the

apparmor profile for this LXC. This is highly unsecure - as you probably already know.

No I did not know. That config was from official Frigate documentation and other boards that explained how to share GPU for HW decoding or any other USB device to the LXC container. If you are familliar with this custom LXC config can you suggest what part of it is not needed and can be removed?

- You also appear to be running this LXC privileged, coupled with the

apparmor unconfined & the running docker to this, & your system is extremely vulnerable.

For the attacks from outside? My LXC ports are not opened to the outside works. I have VPN directly to my router and can access them only by their local IP.

- You also have

lxc.cap.drop: without any specifications, AFAIK that will give all permissions (which would be otherwise unpermitted) to the root user of that LXC. That is a recipe for havoc on your host.

Stability problem or again just possible security issues?

While I wait for USB NVMe enclosure to arrive, maybe I can try to downgrade Kernel to version that was used in Proxmox v8. Would it even work on Proxmox v9? If it would do you know how can I install it?

Thanks a lot for help.