Hello,

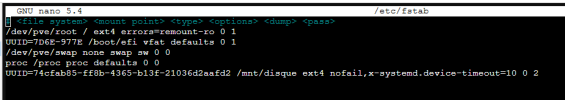

I'm having an issue with my 500 GB external hard drive. I formatted it twice using the ext4 file system with GParted inside a Linux virtual machine running on my PC. The formatting process seems to go smoothly, but after some time, the drive's capacity shows 50 GB instead of the expected 500 GB.

I don't understand why this is happening. Could it be a hardware issue, a partitioning problem, something related to the virtual machine, or the file system itself?

Thanks in advance for your help!

I'm having an issue with my 500 GB external hard drive. I formatted it twice using the ext4 file system with GParted inside a Linux virtual machine running on my PC. The formatting process seems to go smoothly, but after some time, the drive's capacity shows 50 GB instead of the expected 500 GB.

I don't understand why this is happening. Could it be a hardware issue, a partitioning problem, something related to the virtual machine, or the file system itself?

Thanks in advance for your help!