Hi

@bbgeek17, thanks for your response.

I find that the lack of snapsnots is a concern. I have read that backups are still possbile - can someone explain how backups are possible without snapshots.

For vm backups with Proxmox native backup functionality or Proxmox backup server qemus snapshots are used ( so on the hypervisor Level) who don't need support in the storage backend. I guess Veeam does something similiar.

I was playing with the Veeam proxmox capability and it was able to backup a VM that I had on an LVM volume so clearly backups work without snapshots.

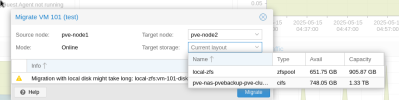

Do you know if you can live migrate machines to/from LVM shared storage to a local LVM thin disk ? If so this might allow you to snapshot (you move the VM from shared storage to local, snapshot and then when you have finished and cleared the snapshot, move it back?).

This should be possible obviouvsly you would need enough local storage space for such an operation:

Personally I would go with ZFS (if you don't have HW raid), since then you could also replicate the local saved image to the other nodes in your cluster so in case of a failure of your local node you still have a working (although some minutes older) copy of your VM:

https://pve.proxmox.com/wiki/Storage_Replication

But ZFS and HW RAID don't play nice together and storage replication only works with ZFS.

I have seen various articles about with people using other filesystems for shared storage which are not specifically supported by proxmox. OCFS was an example I saw.

Is anyone using a non supported cluster file system with proxmox and if so what are you using please ?

I know that the service provider company Heinlein Support is using this for customers who would want such functionality:

https://www.heinlein-support.de/sites/default/files/media/documents/2022-03/Proxmox und Storage vom SAN.pdf

There was a discussion in the German forum here with their staff member

@gurubert https://forum.proxmox.com/threads/datacenter-und-oder-cluster-mit-local-storage-only.145189/ In it he mentioned also that his company is happy to offer their services if one might want support on it

One issue I see is that OCFS is not really in active development and (due to being not supported officially) seldom used in the Proxmox eco system, see here:

Hey everyone,

I have problem with OCFS2 shared storage after upgrading one of the cluster nodes from 8.0 to 8.1.

Shared volume was correctly mounted on the upgraded node. VMs could be started, but can't correctly work because file system inside of VM is in read-only mode.

The error messages in syslog of host:

Code:

kernel: (kvm,85106,7):ocfs2_dio_end_io:2423 ERROR: Direct IO failed, bytes = -5

kernel: (kvm,85106,7):ocfs2_dio_end_io:2423 ERROR: Direct IO failed, bytes = -5

kernel: (kvm,85106,7):ocfs2_dio_end_io:2423 ERROR: Direct IO failed, bytes = -5

VM uses SCSI controller in VirtIO SCSI...

But since ProxmoxVE is basically a Debian Linux nobody can or will people stopping from using anything which might also run on a normal Debian including OCFS.

Here is one more thread on it although

@LnxBil wasn't very happy with OCFS in it:

https://forum.proxmox.com/threads/ocfs2-support.142407/

So my take (although I'm using ProxmoxVE just in my homelab, not at work) from that is, that I would only go with OCFS if I have a service provider (be it Heinlein Support or some other company) who will help if any problems arise and only as a temporary workaround until the next time your storage hardware needs to be renewed. At the next hardware renewal I then would switch to a storage which is natively supported on ProxmoxVE (be it Ceph, ZFS with storage replication or ZFS over ISCSI or Storage hardware with PVE support like from Blockbridge). The exact choice would depend on the actual needs and environment obviouvsly.