Hi guys

total new guy here trying my best but.. well its not good enough i have a msa 2040 connected by fiber into my hp gen 9 dl380 and proxmox installed on the tin.

i have a msa 2040 connected by fiber into my hp gen 9 dl380 and proxmox installed on the tin.

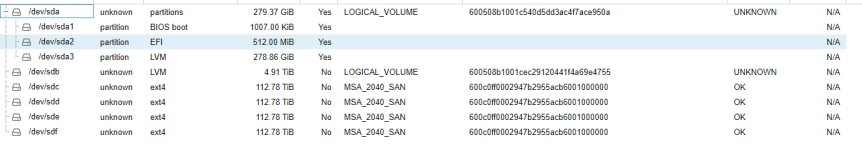

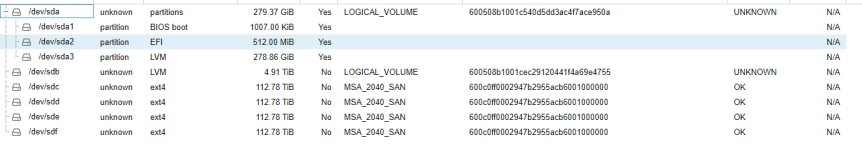

in proxmox i can see this

i can see all 4 fiber connections. and i have installed muitipath

but i cant add it as a lvm disk as its saying there are no unused disks!

any help would be awesome cheers!

total new guy here trying my best but.. well its not good enough

in proxmox i can see this

i can see all 4 fiber connections. and i have installed muitipath

but i cant add it as a lvm disk as its saying there are no unused disks!

any help would be awesome cheers!