Hello Proxmox,

heres my feedback on the new tool (thanks for doing):

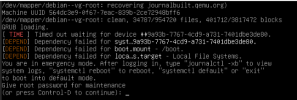

What does this mean? Used the defaults + live-restore checkbox. VM is running on esxi. Seems like the error does not happen when the vm on esxi-site is shut down. Is this how it should be? If yes, then I might misunderstand the "live-restore"? I read Thomas explanation in the news-post, but I get the error from above.

Offline-Migration is currently running right now:

Thanks Jonas

heres my feedback on the new tool (thanks for doing):

Code:

scsi0: successfully created disk 'vm_nvme:vm-107-disk-0,size=16G'

kvm: -drive file.filename=rbd:vm_nvme/vm-107-disk-0:conf=/etc/pve/ceph.conf:id=admin:keyring=/etc/pve/priv/ceph/vm_nvme.keyring,if=none,id=drive-scsi0,format=alloc-track,file.driver=rbd,cache=none,aio=io_uring,file.detect-zeroes=on,backing=drive-scsi0-restore,auto-remove=on: warning: RBD options encoded in the filename as keyvalue pairs is deprecated

restore-scsi0: transferred 0.0 B of 16.0 GiB (0.00%) in 0s

restore-scsi0: stream-job finished

restore-drive jobs finished successfully, removing all tracking block devices

An error occurred during live-restore: VM 107 qmp command 'blockdev-del' failed - Node 'drive-scsi0-restore' is busy: node is used as backing hd of '#block289'

TASK ERROR: live-restore failedWhat does this mean? Used the defaults + live-restore checkbox. VM is running on esxi. Seems like the error does not happen when the vm on esxi-site is shut down. Is this how it should be? If yes, then I might misunderstand the "live-restore"? I read Thomas explanation in the news-post, but I get the error from above.

Offline-Migration is currently running right now:

Code:

transferred 15.2 GiB of 16.0 GiB (95.09%)

transferred 15.4 GiB of 16.0 GiB (96.09%)

transferred 15.5 GiB of 16.0 GiB (97.09%)

transferred 15.7 GiB of 16.0 GiB (98.10%)

transferred 15.9 GiB of 16.0 GiB (99.10%)

transferred 16.0 GiB of 16.0 GiB (100.00%)

transferred 16.0 GiB of 16.0 GiB (100.00%)

scsi0: successfully created disk 'vm_nvme:vm-107-disk-0,size=16G'

TASK OKThanks Jonas

Last edited: