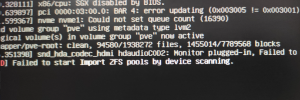

failed to start import zfs pools by device scanning

- Thread starter why-be-banned

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Hello

can you runlsblkandzpool status?

Code:

root@pve:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 698.6G 0 disk

├─sda1 8:1 0 698.6G 0 part

└─sda9 8:9 0 8M 0 part

sdb 8:16 1 14.6T 0 disk

├─sdb1 8:17 1 100M 0 part

├─sdb2 8:18 1 16M 0 part

├─sdb3 8:19 1 77.5G 0 part

├─sdb4 8:20 1 571M 0 part

├─sdb5 8:21 1 100M 0 part

├─sdb6 8:22 1 12.4T 0 part

└─sdb7 8:23 1 2.1T 0 part

sdc 8:32 1 30.2G 0 disk

├─sdc1 8:33 1 1007K 0 part

├─sdc2 8:34 1 512M 0 part /boot/efi

└─sdc3 8:35 1 29.7G 0 part

└─pve-root 253:0 0 29.7G 0 lvm /

nvme0n1 259:0 0 931.5G 0 disk

└─nvme0n1p1 259:1 0 931.5G 0 part /mnt/pve/nvme970ep

root@pve:~# zpool status

no pools availableI seem to have found the reason. I passed through a hard disk to a virtual machine, and created zpool in this disk.

when the HOST is booting , HOST will scan all disk to find all zpool.

Hi I have exactly this issue and don't know why

root@proxmox:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sda 8:0 0 111.8G 0 disk

├─sda1 8:1 0 1007K 0 part

├─sda2 8:2 0 1G 0 part

└─sda3 8:3 0 110.8G 0 part

├─pve-swap 252:0 0 7.7G 0 lvm [SWAP]

├─pve-root 252:1 0 37.8G 0 lvm /

├─pve-data_tmeta 252:2 0 1G 0 lvm

│ └─pve-data-tpool 252:4 0 49.6G 0 lvm

│ ├─pve-data 252:5 0 49.6G 1 lvm

│ ├─pve-vm--100--disk--0 252:6 0 32G 0 lvm

│ └─pve-vm--101--disk--0 252:7 0 8G 0 lvm

└─pve-data_tdata 252:3 0 49.6G 0 lvm

└─pve-data-tpool 252:4 0 49.6G 0 lvm

├─pve-data 252:5 0 49.6G 1 lvm

├─pve-vm--100--disk--0 252:6 0 32G 0 lvm

└─pve-vm--101--disk--0 252:7 0 8G 0 lvm

sdb 8:16 0 9.1T 0 disk

├─sdb1 8:17 0 9.1T 0 part

└─sdb9 8:25 0 8M 0 part

sdc 8:32 0 1.8T 0 disk

└─sdc1 8:33 0 1.8T 0 part

sdd 8:48 0 9.1T 0 disk

├─sdd1 8:49 0 9.1T 0 part

└─sdd9 8:57 0 8M 0 part

root@proxmox:~# zpool status

pool: Stor10TB

state: ONLINE

config:

NAME STATE READ WRITE CKSUM

Stor10TB ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

ata-ST10000NM0046_ZA23520J ONLINE 0 0 0

ata-ST10000NM0046_ZA21K1R2 ONLINE 0 0 0

errors: No known data errors

root@proxmox:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sda 8:0 0 111.8G 0 disk

├─sda1 8:1 0 1007K 0 part

├─sda2 8:2 0 1G 0 part

└─sda3 8:3 0 110.8G 0 part

├─pve-swap 252:0 0 7.7G 0 lvm [SWAP]

├─pve-root 252:1 0 37.8G 0 lvm /

├─pve-data_tmeta 252:2 0 1G 0 lvm

│ └─pve-data-tpool 252:4 0 49.6G 0 lvm

│ ├─pve-data 252:5 0 49.6G 1 lvm

│ ├─pve-vm--100--disk--0 252:6 0 32G 0 lvm

│ └─pve-vm--101--disk--0 252:7 0 8G 0 lvm

└─pve-data_tdata 252:3 0 49.6G 0 lvm

└─pve-data-tpool 252:4 0 49.6G 0 lvm

├─pve-data 252:5 0 49.6G 1 lvm

├─pve-vm--100--disk--0 252:6 0 32G 0 lvm

└─pve-vm--101--disk--0 252:7 0 8G 0 lvm

sdb 8:16 0 9.1T 0 disk

├─sdb1 8:17 0 9.1T 0 part

└─sdb9 8:25 0 8M 0 part

sdc 8:32 0 1.8T 0 disk

└─sdc1 8:33 0 1.8T 0 part

sdd 8:48 0 9.1T 0 disk

├─sdd1 8:49 0 9.1T 0 part

└─sdd9 8:57 0 8M 0 part

root@proxmox:~# zpool status

pool: Stor10TB

state: ONLINE

config:

NAME STATE READ WRITE CKSUM

Stor10TB ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

ata-ST10000NM0046_ZA23520J ONLINE 0 0 0

ata-ST10000NM0046_ZA21K1R2 ONLINE 0 0 0

errors: No known data errors

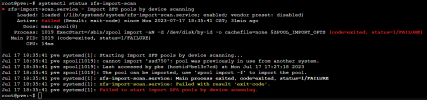

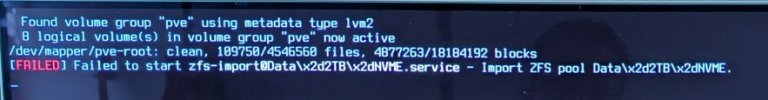

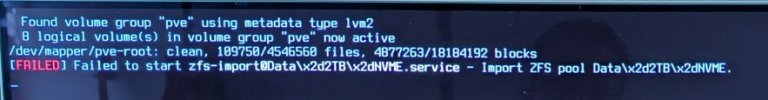

Hi, i also have an error message "failed to start ZFS-import .." when booting proxmox

Is this a reason to worry , or can I just ignore it? Everythings working fine so far.

Probably, it's the same reason as elaborated above:

I passed through a hard disk to a virtual machine, and maybe (I don't remember doing this ..) theres a zpool in this disk.

when the HOST is booting , HOST will scan all disk to find all zpool.

Thank you for your advice !

Is this a reason to worry , or can I just ignore it? Everythings working fine so far.

Probably, it's the same reason as elaborated above:

I passed through a hard disk to a virtual machine, and maybe (I don't remember doing this ..) theres a zpool in this disk.

when the HOST is booting , HOST will scan all disk to find all zpool.

Thank you for your advice !

Last edited:

Here's the output :What does lsblk, zpool list and df say ?

root@pve-HSM:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

nvme0n1 259:0 0 1.9T 0 disk

└─nvme0n1p1 259:6 0 1.9T 0 part

nvme1n1 259:2 0 238.5G 0 disk

├─nvme1n1p1 259:3 0 1007K 0 part

├─nvme1n1p2 259:4 0 1G 0 part /boot/efi

└─nvme1n1p3 259:5 0 237.5G 0 part

├─pve-swap 252:0 0 8G 0 lvm [SWAP]

├─pve-root 252:1 0 69.4G 0 lvm /

├─pve-data_tmeta 252:2 0 1.4G 0 lvm

│ └─pve-data-tpool 252:4 0 141.2G 0 lvm

│ ├─pve-data 252:5 0 141.2G 1 lvm

│ ├─pve-vm--104--disk--0 252:6 0 20G 0 lvm

│ ├─pve-vm--100--disk--0 252:7 0 8G 0 lvm

│ ├─pve-vm--102--disk--0 252:8 0 8G 0 lvm

│ ├─pve-vm--105--disk--0 252:9 0 2G 0 lvm

│ └─pve-vm--106--disk--0 252:10 0 6G 0 lvm

└─pve-data_tdata 252:3 0 141.2G 0 lvm

└─pve-data-tpool 252:4 0 141.2G 0 lvm

├─pve-data 252:5 0 141.2G 1 lvm

├─pve-vm--104--disk--0 252:6 0 20G 0 lvm

├─pve-vm--100--disk--0 252:7 0 8G 0 lvm

├─pve-vm--102--disk--0 252:8 0 8G 0 lvm

├─pve-vm--105--disk--0 252:9 0 2G 0 lvm

└─pve-vm--106--disk--0 252:10 0 6G 0 lvm

root@pve-HSM:~# zpool status

no pools available

root@pve-HSM:~# df say

df: say: No such file or directory

Last edited:

What does "df" say ...  zpool status isn't zpool list ... but anyway ... lsblk don't show any zfs disk with part1+9 and or but maybe nvme0n1 was a zfs single disk which has part1 but noadays part9 is gone ... So if nvme0n1 was a zfs disk and was given zfs as whole disk and part9 is missing now then that nvme is gone to heaven or hell and you need a new one but last ... what does "blkid" say ?

zpool status isn't zpool list ... but anyway ... lsblk don't show any zfs disk with part1+9 and or but maybe nvme0n1 was a zfs single disk which has part1 but noadays part9 is gone ... So if nvme0n1 was a zfs disk and was given zfs as whole disk and part9 is missing now then that nvme is gone to heaven or hell and you need a new one but last ... what does "blkid" say ?

Hi , thanks for your quick reply ..

Here's the output :

Is this a reason to worry , or can I just ignore it?

Everythings working fine so far. I am not aware of any problems, but I wanted to doublecheck what you think about this error message at startup / booting proxmox.

Thanks so much!

Here's the output :

Code:

root@pve-HSM:~# df

Filesystem 1K-blocks Used Available Use% Mou nted on

udev 16286656 0 16286656 0% /de v

tmpfs 3264088 2332 3261756 1% /ru n

/dev/mapper/pve-root 71017632 17893236 49471176 27% /

tmpfs 16320440 34320 16286120 1% /de v/shm

tmpfs 5120 0 5120 0% /ru n/lock

efivarfs 150 79 67 55% /sy s/firmware/efi/efivars

/dev/nvme1n1p2 1046512 52608 993904 6% /bo ot/efi

/dev/fuse 131072 20 131052 1% /et c/pve

//ip/Musik 1967874324 1671665280 296209044 85% /mn t/LMS-Music

//ip/RestDaten/syncFolders 1967874324 1671665280 296209044 85% /mn t/syncFolders

tmpfs 3264088 0 3264088 0% /ru n/user/0

root@pve-HSM:~# zpool list

no pools available

root@pve-HSM:~# blkid

/dev/mapper/pve-root: UUID="f7b1a8ec-6858-4cad-8d18-d62fe75fcf10" BLOCK_SIZE="4096" TYPE="ext4"

/dev/mapper/pve-vm--106--disk--0: UUID="01d8fb4b-2cdb-4c03-80aa-74835f851fd8" BLOCK_SIZE="4096" TYPE="ext4"

/dev/nvme0n1p1: UUID="a5d49e1c-4918-44d9-979f-7e861e2256fc" BLOCK_SIZE="4096" TYPE="ext4" PARTUUID="eda25130-c8b3-4013-801c-d5308b33bc66"

/dev/mapper/pve-vm--102--disk--0: UUID="78e022bb-03fb-4e89-9e60-10c1c05878f4" BLOCK_SIZE="4096" TYPE="ext4"

/dev/mapper/pve-swap: UUID="551f3995-a1bc-4c8f-bfd7-a50a6bf20c36" TYPE="swap"

/dev/mapper/pve-vm--105--disk--0: UUID="f5e6810b-83ae-4f0e-b053-3b7d7bc4fb91" BLOCK_SIZE="4096" TYPE="ext4"

/dev/mapper/pve-vm--100--disk--0: UUID="b136ebdd-1ddc-4e44-b83c-ae81b12a4d89" BLOCK_SIZE="4096" TYPE="ext4"

/dev/nvme1n1p2: UUID="BFDC-4824" BLOCK_SIZE="512" TYPE="vfat" PARTUUID="fdd67148-a50e-4ec4-a7e7-204fdd8289aa"

/dev/nvme1n1p3: UUID="M9aaHl-wcS7-23wG-mU7p-25XS-aG4v-sXLTrk" TYPE="LVM2_member" PARTUUID="e36f5203-3c9f-4e5d-a24c-ac1ec78dfb08"

/dev/mapper/pve-vm--104--disk--0: PTUUID="edc0b1f6" PTTYPE="dos"

/dev/nvme1n1p1: PARTUUID="299ea344-d02b-4836-8d82-83a47caed8f2"Is this a reason to worry , or can I just ignore it?

Everythings working fine so far. I am not aware of any problems, but I wanted to doublecheck what you think about this error message at startup / booting proxmox.

Thanks so much!

Last edited: