Hi.

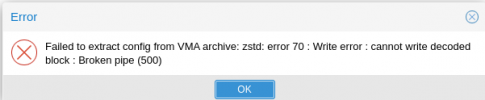

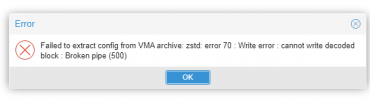

I'm try to make 'Show configuration' on ANY of my VM backups (.zstd) and get 'Failed to extract config from VMA archive: zstd: error 70 : Write error : cannot write decoded block : Broken pipe (500)' . But if I'm try to restore VM - everything OK.

'Show configuration' with .lzo or .gz backups working good.

pveversion -v

Upd1. Try latest zstd version from https://github.com/facebook/zstd. The same error.

I'm try to make 'Show configuration' on ANY of my VM backups (.zstd) and get 'Failed to extract config from VMA archive: zstd: error 70 : Write error : cannot write decoded block : Broken pipe (500)' . But if I'm try to restore VM - everything OK.

'Show configuration' with .lzo or .gz backups working good.

pveversion -v

proxmox-ve: 6.3-1 (running kernel: 5.4.78-2-pve)

pve-manager: 6.3-3 (running version: 6.3-3/eee5f901)

pve-kernel-5.4: 6.3-3

pve-kernel-helper: 6.3-3

pve-kernel-5.3: 6.1-6

pve-kernel-5.4.78-2-pve: 5.4.78-2

pve-kernel-5.4.78-1-pve: 5.4.78-1

pve-kernel-5.3.18-3-pve: 5.3.18-3

ceph-fuse: 14.2.16-pve1

corosync: 3.0.4-pve1

criu: 3.11-3

glusterfs-client: 8.0-2~bpo10+1

ifupdown: residual config

ifupdown2: 3.0.0-1+pve3

ksmtuned: 4.20150325+b1

libjs-extjs: 6.0.1-10

libknet1: 1.16-pve1

libproxmox-acme-perl: 1.0.7

libproxmox-backup-qemu0: 1.0.2-1

libpve-access-control: 6.1-3

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.3-2

libpve-guest-common-perl: 3.1-3

libpve-http-server-perl: 3.1-1

libpve-storage-perl: 6.3-3

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.3-1

lxcfs: 4.0.3-pve3

novnc-pve: 1.1.0-1

openvswitch-switch: 2.12.0-1

proxmox-backup-client: 1.0.6-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.4-3

pve-cluster: 6.2-1

pve-container: 3.3-2

pve-docs: 6.3-1

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-3

pve-firmware: 3.1-3

pve-ha-manager: 3.1-1

pve-i18n: 2.2-2

pve-qemu-kvm: 5.1.0-7

pve-xtermjs: 4.7.0-3

qemu-server: 6.3-2

smartmontools: 7.1-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 0.8.5-pve1

pve-manager: 6.3-3 (running version: 6.3-3/eee5f901)

pve-kernel-5.4: 6.3-3

pve-kernel-helper: 6.3-3

pve-kernel-5.3: 6.1-6

pve-kernel-5.4.78-2-pve: 5.4.78-2

pve-kernel-5.4.78-1-pve: 5.4.78-1

pve-kernel-5.3.18-3-pve: 5.3.18-3

ceph-fuse: 14.2.16-pve1

corosync: 3.0.4-pve1

criu: 3.11-3

glusterfs-client: 8.0-2~bpo10+1

ifupdown: residual config

ifupdown2: 3.0.0-1+pve3

ksmtuned: 4.20150325+b1

libjs-extjs: 6.0.1-10

libknet1: 1.16-pve1

libproxmox-acme-perl: 1.0.7

libproxmox-backup-qemu0: 1.0.2-1

libpve-access-control: 6.1-3

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.3-2

libpve-guest-common-perl: 3.1-3

libpve-http-server-perl: 3.1-1

libpve-storage-perl: 6.3-3

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.3-1

lxcfs: 4.0.3-pve3

novnc-pve: 1.1.0-1

openvswitch-switch: 2.12.0-1

proxmox-backup-client: 1.0.6-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.4-3

pve-cluster: 6.2-1

pve-container: 3.3-2

pve-docs: 6.3-1

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-3

pve-firmware: 3.1-3

pve-ha-manager: 3.1-1

pve-i18n: 2.2-2

pve-qemu-kvm: 5.1.0-7

pve-xtermjs: 4.7.0-3

qemu-server: 6.3-2

smartmontools: 7.1-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 0.8.5-pve1

Upd1. Try latest zstd version from https://github.com/facebook/zstd. The same error.

Code:

zstd -V

*** zstd command line interface 64-bits v1.4.8, by Yann Collet ***

Last edited: