Hi all.

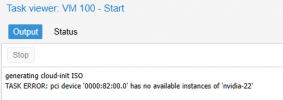

I have some error with vGPU.

My machine configuration is E5-2696 v4 and Nvidia Tesla M40.

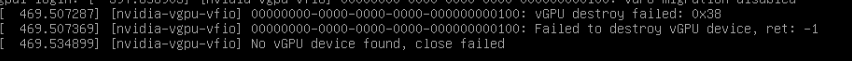

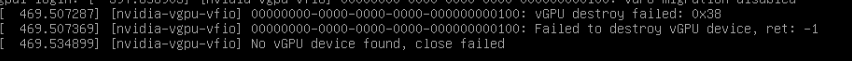

If I shutdown VM with shutdown buttom on Proxmox GUI then i have error Failed to destroy vGPU,

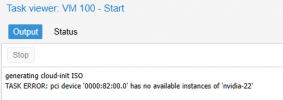

And i don't start this VM again.

I have running kernel 5.15.74-1-pve and pve version: 7.3-4.

Config files /etc/default/grub

GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on iommu=pt initcall_blacklist=sysfb_init"

I have attach dmesg bellow.

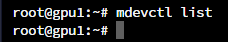

Check deeper with nvidia-smi i see vGPU for VM still running. It not destroy.

And mvdevctl not listen it.

this doesn't happen if I choose stop instead of shutting down the VM.

Plese help me. Thanks

I have some error with vGPU.

My machine configuration is E5-2696 v4 and Nvidia Tesla M40.

If I shutdown VM with shutdown buttom on Proxmox GUI then i have error Failed to destroy vGPU,

And i don't start this VM again.

I have running kernel 5.15.74-1-pve and pve version: 7.3-4.

Config files /etc/default/grub

GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on iommu=pt initcall_blacklist=sysfb_init"

I have attach dmesg bellow.

Check deeper with nvidia-smi i see vGPU for VM still running. It not destroy.

And mvdevctl not listen it.

this doesn't happen if I choose stop instead of shutting down the VM.

Plese help me. Thanks