Hello,

I'm trying to migrate a VM from one node to another in the same cluster, and it fail at the end with this error:

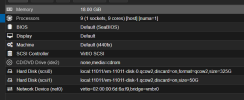

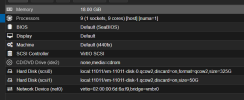

There is no snapshot, no TPM, and here are the spec of the VM:

Should I try to plan a downtime of 30 minutes, shut down the VM and try the migration again with a powered off VM?

Your help would be appreciated

Thank you.

I'm trying to migrate a VM from one node to another in the same cluster, and it fail at the end with this error:

Code:

2025-04-09 10:23:55 use dedicated network address for sending migration traffic (192.168.150.26)

2025-04-09 10:23:55 starting migration of VM 11011 to node 'proxmox26s' (192.168.150.26)

2025-04-09 10:23:55 found local disk 'local:11011/vm-11011-disk-0.qcow2' (attached)

2025-04-09 10:23:55 found local disk 'local:11011/vm-11011-disk-1.qcow2' (attached)

2025-04-09 10:23:55 starting VM 11011 on remote node 'proxmox26s'

2025-04-09 10:23:57 volume 'local:11011/vm-11011-disk-0.qcow2' is 'local:11011/vm-11011-disk-0.qcow2' on the target

2025-04-09 10:23:57 volume 'local:11011/vm-11011-disk-1.qcow2' is 'local:11011/vm-11011-disk-1.qcow2' on the target

2025-04-09 10:23:57 start remote tunnel

2025-04-09 10:23:58 ssh tunnel ver 1

2025-04-09 10:23:58 starting storage migration

2025-04-09 10:23:58 scsi1: start migration to nbd:unix:/run/qemu-server/11011_nbd.migrate:exportname=drive-scsi1

drive mirror is starting for drive-scsi1

drive-scsi1: transferred 196.9 MiB of 50.0 GiB (0.38%) in 1s

... skipped 170 lines...

drive-scsi1: transferred 50.2 GiB of 50.2 GiB (100.00%) in 2m 55s, ready

all 'mirror' jobs are ready

2025-04-09 10:26:53 scsi0: start migration to nbd:unix:/run/qemu-server/11011_nbd.migrate:exportname=drive-scsi0

drive mirror is starting for drive-scsi0

drive-scsi0: transferred 124.9 MiB of 325.0 GiB (0.04%) in 12s

... skipped 1610 lines...

drive-scsi0: transferred 325.2 GiB of 325.2 GiB (100.00%) in 27m 13s, ready

all 'mirror' jobs are ready

2025-04-09 10:54:06 switching mirror jobs to actively synced mode

drive-scsi0: switching to actively synced mode

drive-scsi1: switching to actively synced mode

drive-scsi0: successfully switched to actively synced mode

drive-scsi1: successfully switched to actively synced mode

2025-04-09 10:54:07 starting online/live migration on unix:/run/qemu-server/11011.migrate

2025-04-09 10:54:07 set migration capabilities

2025-04-09 10:54:07 migration downtime limit: 100 ms

2025-04-09 10:54:07 migration cachesize: 2.0 GiB

2025-04-09 10:54:07 set migration parameters

2025-04-09 10:54:07 start migrate command to unix:/run/qemu-server/11011.migrate

2025-04-09 10:54:08 migration active, transferred 134.9 MiB of 18.0 GiB VM-state, 119.3 MiB/s

... skipped 165 lines...

2025-04-09 10:56:56 migration active, transferred 18.6 GiB of 18.0 GiB VM-state, 122.7 MiB/s

2025-04-09 10:56:57 migration active, transferred 18.7 GiB of 18.0 GiB VM-state, 114.4 MiB/s

2025-04-09 10:56:58 migration active, transferred 18.8 GiB of 18.0 GiB VM-state, 110.9 MiB/s

2025-04-09 10:56:59 migration active, transferred 18.9 GiB of 18.0 GiB VM-state, 111.7 MiB/s

2025-04-09 10:57:00 migration active, transferred 19.0 GiB of 18.0 GiB VM-state, 123.9 MiB/s

2025-04-09 10:57:01 migration active, transferred 19.1 GiB of 18.0 GiB VM-state, 115.6 MiB/s

2025-04-09 10:57:02 migration active, transferred 19.2 GiB of 18.0 GiB VM-state, 116.6 MiB/s

2025-04-09 10:57:03 migration active, transferred 19.3 GiB of 18.0 GiB VM-state, 15.7 MiB/s, VM dirties lots of memory: 62.9 MiB/s

2025-04-09 10:57:04 migration active, transferred 19.4 GiB of 18.0 GiB VM-state, 112.2 MiB/s

2025-04-09 10:57:05 migration active, transferred 19.6 GiB of 18.0 GiB VM-state, 114.4 MiB/s

2025-04-09 10:57:06 migration active, transferred 19.7 GiB of 18.0 GiB VM-state, 115.5 MiB/s

2025-04-09 10:57:07 migration active, transferred 19.8 GiB of 18.0 GiB VM-state, 111.2 MiB/s

2025-04-09 10:57:08 migration active, transferred 19.9 GiB of 18.0 GiB VM-state, 109.9 MiB/s

2025-04-09 10:57:09 migration active, transferred 20.0 GiB of 18.0 GiB VM-state, 109.3 MiB/s

2025-04-09 10:57:10 migration active, transferred 20.1 GiB of 18.0 GiB VM-state, 140.1 MiB/s

2025-04-09 10:57:10 xbzrle: send updates to 14120 pages in 20.4 MiB encoded memory, cache-miss 99.10%, overflow 1361

2025-04-09 10:57:11 migration active, transferred 20.2 GiB of 18.0 GiB VM-state, 142.4 MiB/s

2025-04-09 10:57:11 xbzrle: send updates to 25842 pages in 50.9 MiB encoded memory, cache-miss 99.10%, overflow 2803

2025-04-09 10:57:12 migration active, transferred 20.3 GiB of 18.0 GiB VM-state, 135.7 MiB/s

2025-04-09 10:57:12 xbzrle: send updates to 37807 pages in 82.2 MiB encoded memory, cache-miss 99.10%, overflow 4290

2025-04-09 10:57:13 migration active, transferred 20.4 GiB of 18.0 GiB VM-state, 134.0 MiB/s

2025-04-09 10:57:13 xbzrle: send updates to 48124 pages in 108.6 MiB encoded memory, cache-miss 75.58%, overflow 5518

2025-04-09 10:57:13 auto-increased downtime to continue migration: 200 ms

2025-04-09 10:57:14 migration active, transferred 20.5 GiB of 18.0 GiB VM-state, 128.4 MiB/s

2025-04-09 10:57:14 xbzrle: send updates to 57719 pages in 135.9 MiB encoded memory, cache-miss 75.58%, overflow 6849

2025-04-09 10:57:15 migration active, transferred 20.7 GiB of 18.0 GiB VM-state, 133.0 MiB/s

2025-04-09 10:57:15 xbzrle: send updates to 69871 pages in 169.5 MiB encoded memory, cache-miss 75.58%, overflow 8582

2025-04-09 10:57:16 migration active, transferred 20.8 GiB of 18.0 GiB VM-state, 139.9 MiB/s

2025-04-09 10:57:16 xbzrle: send updates to 79971 pages in 194.5 MiB encoded memory, cache-miss 75.58%, overflow 9929

2025-04-09 10:57:17 migration active, transferred 20.9 GiB of 18.0 GiB VM-state, 160.3 MiB/s

2025-04-09 10:57:17 xbzrle: send updates to 97533 pages in 236.9 MiB encoded memory, cache-miss 75.58%, overflow 12522

2025-04-09 10:57:18 migration active, transferred 21.0 GiB of 18.0 GiB VM-state, 164.2 MiB/s

2025-04-09 10:57:18 xbzrle: send updates to 115012 pages in 272.2 MiB encoded memory, cache-miss 64.16%, overflow 15182

2025-04-09 10:57:19 migration active, transferred 21.1 GiB of 18.0 GiB VM-state, 805.5 MiB/s

2025-04-09 10:57:19 xbzrle: send updates to 168193 pages in 293.6 MiB encoded memory, cache-miss 64.16%, overflow 16554

2025-04-09 10:57:20 average migration speed: 95.6 MiB/s - downtime 58 ms

2025-04-09 10:57:20 migration status: completed

all 'mirror' jobs are ready

drive-scsi0: Completing block job...

drive-scsi0: Completed successfully.

drive-scsi1: Completing block job...

drive-scsi1: Completed successfully.

drive-scsi0: Cancelling block job

drive-scsi1: Cancelling block job

drive-scsi0: Done.

WARN: drive-scsi1: Input/output error (io-status: ok)

drive-scsi1: Done.

2025-04-09 10:57:22 ERROR: online migrate failure - Failed to complete storage migration: block job (mirror) error: drive-scsi0: Input/output error (io-status: ok)

2025-04-09 10:57:22 aborting phase 2 - cleanup resources

2025-04-09 10:57:22 migrate_cancel

2025-04-09 10:57:28 ERROR: migration finished with problems (duration 00:33:33)

TASK ERROR: migration problemsThere is no snapshot, no TPM, and here are the spec of the VM:

Should I try to plan a downtime of 30 minutes, shut down the VM and try the migration again with a powered off VM?

Your help would be appreciated

Thank you.