Update Tools musst du immer im Root OS also hier Proxmox laufen lassen.

Was ist den sda, eine HDD?

Was ist den sda, eine HDD?

Ich sehe gerade, dass ich mich wohl verguckt hatte: sda ist eine HDD, von WD aus der RED-serie (4TB). Ist schon sehr alt. Gerade nochmal einen Test laufen lassen:Das update-tool scheint es nur für windows 10 und 11 zu geben :-(

ja, sda ist eine HDD: 10TB Toshiba IronWolf. SMART-werte hatte ich oben gepostet: https://forum.proxmox.com/threads/failed-failed-to-mount-mnt-pve-cephfs.122514/post-533794

sudo smartctl --attributes /dev/sda

=== START OF READ SMART DATA SECTION ===

SMART Attributes Data Structure revision number: 16

Vendor Specific SMART Attributes with Thresholds:

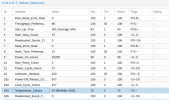

ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE

1 Raw_Read_Error_Rate 0x002f 200 200 051 Pre-fail Always - 28

3 Spin_Up_Time 0x0027 195 170 021 Pre-fail Always - 7233

4 Start_Stop_Count 0x0032 100 100 000 Old_age Always - 247

5 Reallocated_Sector_Ct 0x0033 200 200 140 Pre-fail Always - 0

7 Seek_Error_Rate 0x002e 200 200 000 Old_age Always - 0

9 Power_On_Hours 0x0032 008 008 000 Old_age Always - 67874

10 Spin_Retry_Count 0x0032 100 100 000 Old_age Always - 0

11 Calibration_Retry_Count 0x0032 100 100 000 Old_age Always - 0

12 Power_Cycle_Count 0x0032 100 100 000 Old_age Always - 230

192 Power-Off_Retract_Count 0x0032 200 200 000 Old_age Always - 175

193 Load_Cycle_Count 0x0032 199 199 000 Old_age Always - 3758

194 Temperature_Celsius 0x0022 123 102 000 Old_age Always - 29

196 Reallocated_Event_Count 0x0032 200 200 000 Old_age Always - 0

197 Current_Pending_Sector 0x0032 200 200 000 Old_age Always - 0

198 Offline_Uncorrectable 0x0030 100 253 000 Old_age Offline - 0

199 UDMA_CRC_Error_Count 0x0032 200 200 000 Old_age Always - 0

200 Multi_Zone_Error_Rate 0x0008 200 200 000 Old_age Offline - 0Apr 25 14:28:05 s301 smartd[807]: Device: /dev/sdc [SAT], SMART Usage Attribute: 194 Temperature_Celsius changed from 71 to 70CHECK POWER STATUS spins up disk (0x81 -> 0xff)

[LIST=1]

[*]Apr 26 13:34:10 s301 kernel: pcieport 0000:00:01.0: AER: Corrected error received: 0000:02:00.0

[*]Apr 26 13:34:10 s301 kernel: atlantic 0000:02:00.0: PCIe Bus Error: severity=Corrected, type=Physical Layer, (Receiver ID)

[*]Apr 26 13:34:10 s301 kernel: atlantic 0000:02:00.0: device [1d6a:d107] error status/mask=00000001/0000a000

[*]Apr 26 13:34:10 s301 kernel: atlantic 0000:02:00.0: [ 0] RxErr (First)

[/LIST]

root@s301:~# sensors

adt7473-i2c-3-2e

Adapter: nvkm-0000:01:00.0-bus-0002

in1: 3.00 V (min = +0.00 V, max = +2.99 V)

+3.3V: 3.28 V (min = +0.00 V, max = +4.39 V)

fan1: 4873 RPM (min = 0 RPM)

fan2: 0 RPM (min = 0 RPM)

fan3: 0 RPM (min = 164 RPM) ALARM

fan4: 0 RPM (min = 0 RPM)

temp1: +31.8°C (low = +72.0°C, high = +92.0°C) ALARM

(crit = +191.0°C, hyst = +189.0°C)

Board Temp: +27.5°C (low = +20.0°C, high = +60.0°C)

(crit = +100.0°C, hyst = +96.0°C)

temp3: +32.0°C (low = +80.0°C, high = +105.0°C) ALARM

(crit = +100.0°C, hyst = +96.0°C)

ens4-pci-0200

Adapter: PCI adapter

MAC Temperature: +39.4°C

nouveau-pci-0100

Adapter: PCI adapter

GPU core: 1.20 V (min = +1.20 V, max = +1.32 V)

fan1: 0 RPM

temp1: +48.0°C (high = +95.0°C, hyst = +3.0°C)

(crit = +115.0°C, hyst = +2.0°C)

(emerg = +130.0°C, hyst = +10.0°C)

coretemp-isa-0000

Adapter: ISA adapter

Package id 0: +35.0°C (high = +86.0°C, crit = +96.0°C)

Core 0: +28.0°C (high = +86.0°C, crit = +96.0°C)

Core 1: +26.0°C (high = +86.0°C, crit = +96.0°C)

Core 2: +30.0°C (high = +86.0°C, crit = +96.0°C)

Core 3: +28.0°C (high = +86.0°C, crit = +96.0°C)

Core 4: +30.0°C (high = +86.0°C, crit = +96.0°C)

Core 8: +27.0°C (high = +86.0°C, crit = +96.0°C)

Core 9: +25.0°C (high = +86.0°C, crit = +96.0°C)

Core 10: +30.0°C (high = +86.0°C, crit = +96.0°C)

Core 11: +30.0°C (high = +86.0°C, crit = +96.0°C)

Core 12: +25.0°C (high = +86.0°C, crit = +96.0°C)

nvme-pci-0300

Adapter: PCI adapter

Composite: +26.9°C (low = -0.1°C, high = +69.8°C)

(crit = +89.8°C)

root@s301:~#

root@s301:~# sudo smartctl --attributes /dev/sdb

smartctl 7.2 2020-12-30 r5155 [x86_64-linux-6.2.9-1-pve] (local build)

Copyright (C) 2002-20, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF READ SMART DATA SECTION ===

SMART Attributes Data Structure revision number: 10

Vendor Specific SMART Attributes with Thresholds:

ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE

1 Raw_Read_Error_Rate 0x000f 079 064 044 Pre-fail Always - 74917864

3 Spin_Up_Time 0x0003 096 089 000 Pre-fail Always - 0

4 Start_Stop_Count 0x0032 100 100 020 Old_age Always - 387

5 Reallocated_Sector_Ct 0x0033 100 100 010 Pre-fail Always - 0

7 Seek_Error_Rate 0x000f 086 060 045 Pre-fail Always - 432444172

9 Power_On_Hours 0x0032 093 093 000 Old_age Always - 6263

10 Spin_Retry_Count 0x0013 100 100 097 Pre-fail Always - 0

12 Power_Cycle_Count 0x0032 100 100 020 Old_age Always - 388

18 Head_Health 0x000b 100 100 050 Pre-fail Always - 0

187 Reported_Uncorrect 0x0032 100 100 000 Old_age Always - 0

188 Command_Timeout 0x0032 100 086 000 Old_age Always - 14

190 Airflow_Temperature_Cel 0x0022 071 049 040 Old_age Always - 29 (Min/Max 28/37)

192 Power-Off_Retract_Count 0x0032 100 100 000 Old_age Always - 324

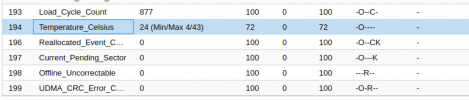

193 Load_Cycle_Count 0x0032 085 085 000 Old_age Always - 30802

194 Temperature_Celsius 0x0022 029 043 000 Old_age Always - 29 (0 10 0 0 0)

195 Hardware_ECC_Recovered 0x001a 079 064 000 Old_age Always - 74917864

197 Current_Pending_Sector 0x0012 100 100 000 Old_age Always - 0

198 Offline_Uncorrectable 0x0010 100 100 000 Old_age Offline - 0

199 UDMA_CRC_Error_Count 0x003e 200 200 000 Old_age Always - 22

200 Pressure_Limit 0x0023 100 100 001 Pre-fail Always - 0

240 Head_Flying_Hours 0x0000 100 253 000 Old_age Offline - 5118h+56m+32.426s

241 Total_LBAs_Written 0x0000 100 253 000 Old_age Offline - 98253963914

242 Total_LBAs_Read 0x0000 100 253 000 Old_age Offline - 314816655635

root@s301:~#

TASK ERROR: command '/bin/systemctl start ceph-osd@1' failed: exit code 1May 18 11:42:35 s301 ceph-crash[847]: WARNING:ceph-crash:post /var/lib/ceph/crash/2023-05-18T09:31:39.422123Z_7d306587-c0f3-4629-88bb-cd61b730a5d1 as client.crash failed: (None, b'2023-05-18T11:42:35.931+0200 7faaf927b700 -1 auth: unable to find a keyring on /etc/pve/priv/ceph.client.crash.keyring: (2) No such file or directory\n2023-05-18T11:42:35.931+0200 7faaf927b700 -1 AuthRegistry(0x7faaf4060640) no keyring found at /etc/pve/priv/ceph.client.crash.keyring, disabling cephx\n2023-05-18T11:42:35.935+0200 7faaf927b700 -1 auth: unable to find a keyring on /etc/pve/priv/ceph.client.crash.keyring: (2) No such file or directory\n2023-05-18T11:42:35.935+0200 7faaf927b700 -1 AuthRegistry(0x7faaf4065a40) no keyring found at /etc/pve/priv/ceph.client.crash.keyring, disabling cephx\n2023-05-18T11:42:35.935+0200 7faaf927b700 -1 auth: unable to find a keyring on /etc/pve/priv/ceph.client.crash.keyring: (2) No such file or directory\n2023-05-18T11:42:35.935+0200 7faaf927b700 -1 AuthRegistry(0x7faaf927a0d0) no keyring found at /etc/pve/priv/ceph.client.crash.keyring, disabling cephx\n[errno 2] RADOS object not found (error connecting to the cluster)\n')

May 18 11:42:53 s301 systemd[1]: Starting Cleanup of Temporary Directories...

May 18 11:42:53 s301 systemd[1]: systemd-tmpfiles-clean.service: Succeeded.

May 18 11:42:53 s301 systemd[1]: Finished Cleanup of Temporary Directories.

May 18 11:43:05 s301 ceph-crash[847]: WARNING:ceph-crash:post /var/lib/ceph/crash/2023-05-18T09:31:39.422123Z_7d306587-c0f3-4629-88bb-cd61b730a5d1 as client.admin failed: (None, b'')

May 18 11:43:21 s301 systemd[1]: Starting Daily apt upgrade and clean activities...

May 18 11:43:21 s301 systemd[1]: apt-daily-upgrade.service: Succeeded.

May 18 11:43:21 s301 systemd[1]: Finished Daily apt upgrade and clean activitiesroot@s301:/etc/pve/priv# ls -al

total 4

drwx------ 2 root www-data 0 Dec 14 20:30 .

drwxr-xr-x 2 root www-data 0 Jan 1 1970 ..

drwx------ 2 root www-data 0 Dec 14 20:30 acme

-rw------- 1 root www-data 1675 May 18 11:28 authkey.key

-rw------- 1 root www-data 1154 May 18 11:28 authorized_keys

drwx------ 2 root www-data 0 Dec 18 20:16 ceph

-rw------- 1 root www-data 151 Dec 14 21:18 ceph.client.admin.keyring

-rw------- 1 root www-data 228 Dec 14 21:18 ceph.mon.keyring

-rw------- 1 root www-data 1124 May 18 11:28 known_hosts

drwx------ 2 root www-data 0 Dec 14 20:30 lock

-rw------- 1 root www-data 3243 Dec 14 20:30 pve-root-ca.key

-rw------- 1 root www-data 3 Dec 14 20:30 pve-root-ca.srl

root@s301:/etc/pve/priv#root@s301:~# cat /etc/pve/ceph.conf

[global]

auth_client_required = cephx

auth_cluster_required = cephx

auth_service_required = cephx

cluster_network = 192.168.1.89/24

fsid = 93764ec6-4ae0-46d7-b3ac-5ba31a407168

mon_allow_pool_delete = true

mon_host = 192.168.1.89

ms_bind_ipv4 = true

ms_bind_ipv6 = false

osd_pool_default_min_size = 2

osd_pool_default_size = 2

public_network = 192.168.1.89/24

[client]

keyring = /etc/pve/priv/$cluster.$name.keyring

[mds]

keyring = /var/lib/ceph/mds/ceph-$id/keyring

[mon.s301]

public_addr = 192.168.1.89

root@s301:~#no keyring found at /etc/pve/priv/ceph.client.crash.keyring, disabling cephx\n[errno 2] RADOS object not found (error connecting to the cluster)ceph-osd@1.service - Ceph object storage daemon osd.1

Loaded: loaded (/lib/systemd/system/ceph-osd@.service; enabled-runtime; ven>

Drop-In: /usr/lib/systemd/system/ceph-osd@.service.d

└─ceph-after-pve-cluster.conf

Active: failed (Result: signal) since Fri 2023-05-19 12:02:31 CEST; 7min ago

Process: 5186 ExecStartPre=/usr/libexec/ceph/ceph-osd-prestart.sh --cluster >

Process: 5190 ExecStart=/usr/bin/ceph-osd -f --cluster ${CLUSTER} --id 1 --s>

Main PID: 5190 (code=killed, signal=ABRT)

CPU: 40.784s

May 19 12:02:31 s301 systemd[1]: ceph-osd@1.service: Scheduled restart job, rest>

May 19 12:02:31 s301 systemd[1]: Stopped Ceph object storage daemon osd.1.

May 19 12:02:31 s301 systemd[1]: ceph-osd@1.service: Consumed 40.784s CPU time.

May 19 12:02:31 s301 systemd[1]: ceph-osd@1.service: Start request repeated too >

May 19 12:02:31 s301 systemd[1]: ceph-osd@1.service: Failed with result 'signal'.

May 19 12:02:31 s301 systemd[1]: Failed to start Ceph object storage daemon osd.>

May 19 12:04:10 s301 systemd[1]: ceph-osd@1.service: Start request repeated too >

May 19 12:04:10 s301 systemd[1]: ceph-osd@1.service: Failed with result 'signal'.

May 19 12:04:10 s301 systemd[1]: Failed to start Ceph object storage daemon osd.>root@s301:~# ceph -s

cluster:

id: 93764ec6-4ae0-46d7-b3ac-5ba31a407168

health: HEALTH_ERR

7 scrub errors

Possible data damage: 7 pgs inconsistent

13 pgs not deep-scrubbed in time

4 pgs not scrubbed in time

28 daemons have recently crashed

services:

mon: 1 daemons, quorum s301 (age 15m)

mgr: s301(active, since 15m)

osd: 4 osds: 3 up (since 13m), 3 in (since 4d); 29 remapped pgs

data:

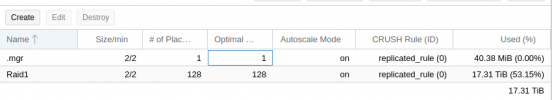

pools: 2 pools, 129 pgs

objects: 2.20M objects, 8.3 TiB

usage: 18 TiB used, 17 TiB / 35 TiB avail

pgs: 223920/4391346 objects misplaced (5.099%)

93 active+clean

28 active+remapped+backfilling

6 active+clean+inconsistent

1 active+clean+scrubbing+deep

1 active+remapped+inconsistent+backfilling

io:

recovery: 23 MiB/s, 5 objects/sWe use essential cookies to make this site work, and optional cookies to enhance your experience.