Hello,

I am long-term fan of Proxmox ecosystem, a lot of work has beed done to bring cloud to masses. As I typically search a lot before posting to forums, I am pretty out of mind now. A problem:

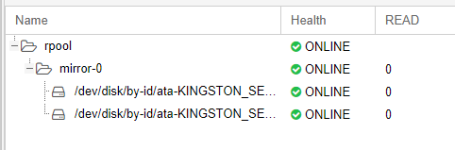

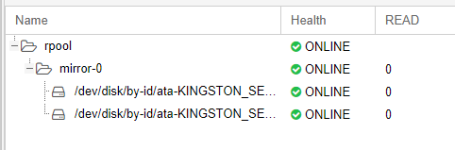

1) Latest Proxmox 6.3 installation on 2x Kingston DC450R rpool.

2) Dell server with 192G RAM, 24x CPU etc... very fast in general

3) only a single VM wih 96G RAM (rest is left for ZFS so it can breathe) running, classic LAMP server on Centos 8, setted up all things like ulimit to raise file pointers limits.

- running only 20 low-traffic websites on WordPress. VM on the same rpool as system

4) tried multiple times to play with arc (returned to defaults then), with writeback cache,discard.

5) zpool trim rpool on daily basis on Proxmox root performed daily, drive full from 30%

Even though traffic is almost ZERO, a server is worth few thousands $ and Kingstons are not Intel DC for 2.000USD and are promoted to be more read-optimized, but I really expected that couple of SSD's from 2021 will easily beat 10 years old 5400RPM Seagates. The reality :

1) when running on config above, the WordPress administration take easily 10-20 seconds to load.

2) when I copy this website to a old-school server with 5400RPM Seagates, I load WP Admin area under 2s.

Did I really miss something when setting up the environment, or are SSD FOR 300$, called ,,DC" suitable just for Win10 and Youtube watching?

I would tip that trim would not come through Raid & ZFS to wipe the empty cells, since I think it used to be faster on the start. But I have not found how to check that.

Thank you, you probably save my day

\

\

zpool iostat 30

capacity operations bandwidth

pool alloc free read write read write

---------- ----- ----- ----- ----- ----- -----

rpool 244G 644G 57 136 739K 4.29M

seagate 195G 269G 2 1 600K 291K

---------- ----- ----- ----- ----- ----- -----

rpool 244G 644G 0 116 1.33K 3.44M

seagate 195G 269G 0 0 0 0

---------- ----- ----- ----- ----- ----- -----

rpool 244G 644G 0 85 409 2.02M

seagate 195G 269G 0 0 0 0

---------- ----- ----- ----- ----- ----- -----

rpool 244G 644G 0 114 409 2.87M

seagate 195G 269G 0 0 0 0

---------- ----- ----- ----- ----- ----- -----

rpool 244G 644G 0 104 2.27K 2.52M

seagate 195G 269G 0 0 0 0

---------- ----- ----- ----- ----- ----- -----

rpool 244G 644G 0 105 136 2.30M

seagate 195G 269G 0 0 0 0

I am long-term fan of Proxmox ecosystem, a lot of work has beed done to bring cloud to masses. As I typically search a lot before posting to forums, I am pretty out of mind now. A problem:

1) Latest Proxmox 6.3 installation on 2x Kingston DC450R rpool.

2) Dell server with 192G RAM, 24x CPU etc... very fast in general

3) only a single VM wih 96G RAM (rest is left for ZFS so it can breathe) running, classic LAMP server on Centos 8, setted up all things like ulimit to raise file pointers limits.

- running only 20 low-traffic websites on WordPress. VM on the same rpool as system

4) tried multiple times to play with arc (returned to defaults then), with writeback cache,discard.

5) zpool trim rpool on daily basis on Proxmox root performed daily, drive full from 30%

Even though traffic is almost ZERO, a server is worth few thousands $ and Kingstons are not Intel DC for 2.000USD and are promoted to be more read-optimized, but I really expected that couple of SSD's from 2021 will easily beat 10 years old 5400RPM Seagates. The reality :

1) when running on config above, the WordPress administration take easily 10-20 seconds to load.

2) when I copy this website to a old-school server with 5400RPM Seagates, I load WP Admin area under 2s.

Did I really miss something when setting up the environment, or are SSD FOR 300$, called ,,DC" suitable just for Win10 and Youtube watching?

I would tip that trim would not come through Raid & ZFS to wipe the empty cells, since I think it used to be faster on the start. But I have not found how to check that.

Thank you, you probably save my day

\

\zpool iostat 30

capacity operations bandwidth

pool alloc free read write read write

---------- ----- ----- ----- ----- ----- -----

rpool 244G 644G 57 136 739K 4.29M

seagate 195G 269G 2 1 600K 291K

---------- ----- ----- ----- ----- ----- -----

rpool 244G 644G 0 116 1.33K 3.44M

seagate 195G 269G 0 0 0 0

---------- ----- ----- ----- ----- ----- -----

rpool 244G 644G 0 85 409 2.02M

seagate 195G 269G 0 0 0 0

---------- ----- ----- ----- ----- ----- -----

rpool 244G 644G 0 114 409 2.87M

seagate 195G 269G 0 0 0 0

---------- ----- ----- ----- ----- ----- -----

rpool 244G 644G 0 104 2.27K 2.52M

seagate 195G 269G 0 0 0 0

---------- ----- ----- ----- ----- ----- -----

rpool 244G 644G 0 105 136 2.30M

seagate 195G 269G 0 0 0 0