Hello everyone,

I have a 4 node proxmox cluster.

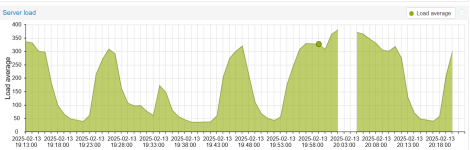

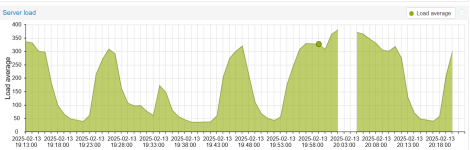

The load average, which has been 40-50 for the last day, has increased excessively for each server. It goes up to 300 times. It drops momentarily and then increases again.

When I checked the services using resources, I was not sure what to do.

I check the running processes on each node.

I have a 4 node proxmox cluster.

The load average, which has been 40-50 for the last day, has increased excessively for each server. It goes up to 300 times. It drops momentarily and then increases again.

When I checked the services using resources, I was not sure what to do.

I check the running processes on each node.

host1:

top - 20:06:35 up 5 days, 1:17, 1 user, load average: 124.34, 128.80, 109.11

Tasks: 1699 total, 18 running, 1681 sleeping, 0 stopped, 0 zombie

%Cpu(s): 33.6 us, 16.8 sy, 0.0 ni, 12.4 id, 0.7 wa, 0.0 hi, 7.6 si, 0.0 st

MiB Mem : 515865.6 total, 61195.2 free, 414942.9 used, 44703.2 buff/cache

MiB Swap: 8192.0 total, 8192.0 free, 0.0 used. 100922.8 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

74599 root 20 0 17.3g 15.9g 9728 S 479.5 3.2 12d+7h kvm

192807 root 20 0 8706116 2.9g 25088 S 242.3 0.6 25,30 kvm

6799 root 20 0 3766108 2.1g 29184 S 101.6 0.4 4d+11h kvm

24 root 20 0 0 0 0 R 98.7 0.0 80:41.87 ksoftirqd/1

48 root 20 0 0 0 0 R 94.8 0.0 70:14.88 ksoftirqd/5

60 root 20 0 0 0 0 S 91.5 0.0 64:10.26 ksoftirqd/7

175281 ceph 20 0 4580048 3.0g 39424 S 89.9 0.6 11,33 ceph-osd

36 root 20 0 0 0 0 R 87.6 0.0 69:38.30 ksoftirqd/3

54 root 20 0 0 0 0 R 87.0 0.0 44:28.48 ksoftirqd/6

3979602 root 20 0 5211556 2.8g 27136 S 83.1 0.6 17,46 kvm

173999 ceph 20 0 4466756 2.9g 38400 S 70.7 0.6 9,09 ceph-osd

4015885 root 20 0 8474396 6.1g 26624 S 70.7 1.2 9,41 kvm

172816 ceph 20 0 4649496 3.0g 38912 S 66.8 0.6 9,13 ceph-osd

189327 root 20 0 4089224 2.0g 27648 S 65.1 0.4 6,32 kvm

16 root 20 0 0 0 0 S 59.0 0.0 52:21.47 ksoftirqd/0

35171 root 20 0 4326804 2.1g 27136 S 52.8 0.4 35,00 kvm

host2:

top - 20:06:55 up 17:02, 3 users, load average: 336.81, 340.48, 263.24

Tasks: 1441 total, 37 running, 1404 sleeping, 0 stopped, 0 zombie

%Cpu(s): 26.8 us, 23.7 sy, 0.0 ni, 14.7 id, 1.2 wa, 0.0 hi, 13.6 si, 0.0 st

MiB Mem : 515872.3 total, 65422.2 free, 408159.8 used, 47238.1 buff/cache

MiB Swap: 8192.0 total, 8192.0 free, 0.0 used. 107712.5 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

14166 root 20 0 10.2g 8.1g 26880 S 121.7 1.6 8,29 kvm

1128637 root 20 0 11216 4032 2688 R 99.7 0.0 0:03.70 ps

42 root 20 0 0 0 0 R 94.4 0.0 11,37 ksoftirqd/4

234 root 20 0 0 0 0 R 91.1 0.0 208:58.70 ksoftirqd/36

246 root 20 0 0 0 0 R 89.8 0.0 6,21 ksoftirqd/38

66 root 20 0 0 0 0 R 75.0 0.0 201:04.67 ksoftirqd/8

210 root 20 0 0 0 0 R 65.5 0.0 160:47.38 ksoftirqd/32

2768 ceph 20 0 5245924 3.1g 38976 S 59.2 0.6 11,47 ceph-osd

2766 ceph 20 0 5095508 3.0g 38976 S 55.6 0.6 10,59 ceph-osd

162 root 20 0 0 0 0 R 54.6 0.0 9,36 ksoftirqd/24

186 root 20 0 0 0 0 S 47.7 0.0 181:43.11 ksoftirqd/28

2753 ceph 20 0 3463688 2.2g 39424 S 42.1 0.4 6,13 ceph-osd

2765 ceph 20 0 3528792 2.5g 38976 S 41.8 0.5 7,29 ceph-osd

16362 root 20 0 5403772 2.1g 26880 S 40.5 0.4 6,59 kvm

21318 root 20 0 5302988 2.1g 27328 S 36.8 0.4 6,45 kvm

2748 ceph 20 0 3440820 2.4g 38976 S 36.2 0.5 6,42 ceph-osd

host3:

top - 20:07:10 up 16:43, 1 user, load average: 71.51, 69.51, 63.13

Tasks: 1885 total, 6 running, 1879 sleeping, 0 stopped, 0 zombie

%Cpu(s): 25.6 us, 17.5 sy, 0.0 ni, 30.7 id, 0.5 wa, 0.0 hi, 3.9 si, 0.0 st

MiB Mem : 386839.6 total, 38315.4 free, 316422.8 used, 36177.3 buff/cache

MiB Swap: 8192.0 total, 1415.4 free, 6776.6 used. 70416.9 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

67495 root 20 0 5405808 3.1g 21888 S 182.4 0.8 21,18 kvm

3959 ceph 20 0 4991516 2.6g 27072 S 127.4 0.7 17,39 ceph-osd

70434 root 20 0 10.6g 7.2g 21312 S 123.1 1.9 32,05 kvm

23600 root 20 0 4291716 2.1g 22464 S 97.1 0.6 12,52 kvm

3939 ceph 20 0 3413584 2.2g 27648 S 95.4 0.6 10,40 ceph-osd

46325 root 20 0 4285472 2.1g 21888 S 89.6 0.5 6,56 kvm

63986 root 20 0 4513784 2.1g 20160 S 71.7 0.6 9,44 kvm

710525 root 20 0 18.6g 12.6g 25344 S 69.1 3.3 34:54.61 kvm

3957 ceph 20 0 3321368 2.1g 26496 S 64.8 0.6 9,08 ceph-osd

9140 root 20 0 4279312 1.7g 22464 S 64.8 0.4 6,45 kvm

3952 ceph 20 0 3495888 2.1g 25344 S 60.9 0.5 9,55 ceph-osd

29836 root 20 0 5353376 1.5g 21888 S 60.9 0.4 358:25.66 kvm

3944 ceph 20 0 3459360 2.2g 25344 S 60.6 0.6 10,20 ceph-osd

431274 root 20 0 3998672 250708 24768 S 58.3 0.1 299:21.32 kvm

13843 root 20 0 3566008 2.0g 21888 S 57.0 0.5 10,20 kvm

64685 root 20 0 5406932 3.1g 20736 S 53.7 0.8 9,12 kvm

host4:

top - 20:07:24 up 5 days, 5:05, 1 user, load average: 215.26, 197.52, 164.83

Tasks: 1620 total, 12 running, 1608 sleeping, 0 stopped, 0 zombie

%Cpu(s): 38.6 us, 11.9 sy, 0.0 ni, 8.0 id, 0.5 wa, 0.0 hi, 5.1 si, 0.0 st

MiB Mem : 515855.2 total, 78871.5 free, 414473.9 used, 26271.3 buff/cache

MiB Swap: 8192.0 total, 8192.0 free, 0.0 used. 101381.2 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

3257143 root 20 0 10.4g 7.5g 25344 S 420.5 1.5 47,09 kvm

12306 root 20 0 18.3g 16.1g 26752 S 222.1 3.2 18,18 kvm

4000720 root 20 0 4666804 1.6g 22528 S 181.8 0.3 39:48.28 kvm

3257591 root 20 0 5212584 2.8g 26048 S 130.5 0.6 22,25 kvm

3879666 root 20 0 6760764 4.2g 27456 S 122.7 0.8 180:51.11 kvm

445967 ceph 20 0 4907020 3.1g 38016 S 121.4 0.6 76,23 ceph-osd

13637 root 20 0 4623760 3.1g 26048 S 113.0 0.6 56,37 kvm

2660847 root 20 0 4245096 2.1g 24640 S 110.1 0.4 45,23 kvm

32162 root 20 0 4171708 1.0g 26048 S 106.5 0.2 49,38 kvm

31854 root 20 0 5531336 3.2g 26048 S 105.2 0.6 5d+7h kvm

38101 root 20 0 4350572 2.1g 26752 S 105.2 0.4 5d+6h kvm

34074 root 20 0 3618340 1.3g 25344 S 104.9 0.3 5d+6h kvm

3266982 root 20 0 3557392 2.0g 26048 S 104.5 0.4 16,34 kvm

19744 root 20 0 3704808 2.1g 27456 S 101.3 0.4 5d+6h kvm

61403 root 20 0 4352644 2.1g 25344 S 100.0 0.4 5d+5h kvm

26263 root 20 0 3767140 2.1g 26048 S 98.7 0.4 78,05 kvm

top - 20:06:35 up 5 days, 1:17, 1 user, load average: 124.34, 128.80, 109.11

Tasks: 1699 total, 18 running, 1681 sleeping, 0 stopped, 0 zombie

%Cpu(s): 33.6 us, 16.8 sy, 0.0 ni, 12.4 id, 0.7 wa, 0.0 hi, 7.6 si, 0.0 st

MiB Mem : 515865.6 total, 61195.2 free, 414942.9 used, 44703.2 buff/cache

MiB Swap: 8192.0 total, 8192.0 free, 0.0 used. 100922.8 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

74599 root 20 0 17.3g 15.9g 9728 S 479.5 3.2 12d+7h kvm

192807 root 20 0 8706116 2.9g 25088 S 242.3 0.6 25,30 kvm

6799 root 20 0 3766108 2.1g 29184 S 101.6 0.4 4d+11h kvm

24 root 20 0 0 0 0 R 98.7 0.0 80:41.87 ksoftirqd/1

48 root 20 0 0 0 0 R 94.8 0.0 70:14.88 ksoftirqd/5

60 root 20 0 0 0 0 S 91.5 0.0 64:10.26 ksoftirqd/7

175281 ceph 20 0 4580048 3.0g 39424 S 89.9 0.6 11,33 ceph-osd

36 root 20 0 0 0 0 R 87.6 0.0 69:38.30 ksoftirqd/3

54 root 20 0 0 0 0 R 87.0 0.0 44:28.48 ksoftirqd/6

3979602 root 20 0 5211556 2.8g 27136 S 83.1 0.6 17,46 kvm

173999 ceph 20 0 4466756 2.9g 38400 S 70.7 0.6 9,09 ceph-osd

4015885 root 20 0 8474396 6.1g 26624 S 70.7 1.2 9,41 kvm

172816 ceph 20 0 4649496 3.0g 38912 S 66.8 0.6 9,13 ceph-osd

189327 root 20 0 4089224 2.0g 27648 S 65.1 0.4 6,32 kvm

16 root 20 0 0 0 0 S 59.0 0.0 52:21.47 ksoftirqd/0

35171 root 20 0 4326804 2.1g 27136 S 52.8 0.4 35,00 kvm

host2:

top - 20:06:55 up 17:02, 3 users, load average: 336.81, 340.48, 263.24

Tasks: 1441 total, 37 running, 1404 sleeping, 0 stopped, 0 zombie

%Cpu(s): 26.8 us, 23.7 sy, 0.0 ni, 14.7 id, 1.2 wa, 0.0 hi, 13.6 si, 0.0 st

MiB Mem : 515872.3 total, 65422.2 free, 408159.8 used, 47238.1 buff/cache

MiB Swap: 8192.0 total, 8192.0 free, 0.0 used. 107712.5 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

14166 root 20 0 10.2g 8.1g 26880 S 121.7 1.6 8,29 kvm

1128637 root 20 0 11216 4032 2688 R 99.7 0.0 0:03.70 ps

42 root 20 0 0 0 0 R 94.4 0.0 11,37 ksoftirqd/4

234 root 20 0 0 0 0 R 91.1 0.0 208:58.70 ksoftirqd/36

246 root 20 0 0 0 0 R 89.8 0.0 6,21 ksoftirqd/38

66 root 20 0 0 0 0 R 75.0 0.0 201:04.67 ksoftirqd/8

210 root 20 0 0 0 0 R 65.5 0.0 160:47.38 ksoftirqd/32

2768 ceph 20 0 5245924 3.1g 38976 S 59.2 0.6 11,47 ceph-osd

2766 ceph 20 0 5095508 3.0g 38976 S 55.6 0.6 10,59 ceph-osd

162 root 20 0 0 0 0 R 54.6 0.0 9,36 ksoftirqd/24

186 root 20 0 0 0 0 S 47.7 0.0 181:43.11 ksoftirqd/28

2753 ceph 20 0 3463688 2.2g 39424 S 42.1 0.4 6,13 ceph-osd

2765 ceph 20 0 3528792 2.5g 38976 S 41.8 0.5 7,29 ceph-osd

16362 root 20 0 5403772 2.1g 26880 S 40.5 0.4 6,59 kvm

21318 root 20 0 5302988 2.1g 27328 S 36.8 0.4 6,45 kvm

2748 ceph 20 0 3440820 2.4g 38976 S 36.2 0.5 6,42 ceph-osd

host3:

top - 20:07:10 up 16:43, 1 user, load average: 71.51, 69.51, 63.13

Tasks: 1885 total, 6 running, 1879 sleeping, 0 stopped, 0 zombie

%Cpu(s): 25.6 us, 17.5 sy, 0.0 ni, 30.7 id, 0.5 wa, 0.0 hi, 3.9 si, 0.0 st

MiB Mem : 386839.6 total, 38315.4 free, 316422.8 used, 36177.3 buff/cache

MiB Swap: 8192.0 total, 1415.4 free, 6776.6 used. 70416.9 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

67495 root 20 0 5405808 3.1g 21888 S 182.4 0.8 21,18 kvm

3959 ceph 20 0 4991516 2.6g 27072 S 127.4 0.7 17,39 ceph-osd

70434 root 20 0 10.6g 7.2g 21312 S 123.1 1.9 32,05 kvm

23600 root 20 0 4291716 2.1g 22464 S 97.1 0.6 12,52 kvm

3939 ceph 20 0 3413584 2.2g 27648 S 95.4 0.6 10,40 ceph-osd

46325 root 20 0 4285472 2.1g 21888 S 89.6 0.5 6,56 kvm

63986 root 20 0 4513784 2.1g 20160 S 71.7 0.6 9,44 kvm

710525 root 20 0 18.6g 12.6g 25344 S 69.1 3.3 34:54.61 kvm

3957 ceph 20 0 3321368 2.1g 26496 S 64.8 0.6 9,08 ceph-osd

9140 root 20 0 4279312 1.7g 22464 S 64.8 0.4 6,45 kvm

3952 ceph 20 0 3495888 2.1g 25344 S 60.9 0.5 9,55 ceph-osd

29836 root 20 0 5353376 1.5g 21888 S 60.9 0.4 358:25.66 kvm

3944 ceph 20 0 3459360 2.2g 25344 S 60.6 0.6 10,20 ceph-osd

431274 root 20 0 3998672 250708 24768 S 58.3 0.1 299:21.32 kvm

13843 root 20 0 3566008 2.0g 21888 S 57.0 0.5 10,20 kvm

64685 root 20 0 5406932 3.1g 20736 S 53.7 0.8 9,12 kvm

host4:

top - 20:07:24 up 5 days, 5:05, 1 user, load average: 215.26, 197.52, 164.83

Tasks: 1620 total, 12 running, 1608 sleeping, 0 stopped, 0 zombie

%Cpu(s): 38.6 us, 11.9 sy, 0.0 ni, 8.0 id, 0.5 wa, 0.0 hi, 5.1 si, 0.0 st

MiB Mem : 515855.2 total, 78871.5 free, 414473.9 used, 26271.3 buff/cache

MiB Swap: 8192.0 total, 8192.0 free, 0.0 used. 101381.2 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

3257143 root 20 0 10.4g 7.5g 25344 S 420.5 1.5 47,09 kvm

12306 root 20 0 18.3g 16.1g 26752 S 222.1 3.2 18,18 kvm

4000720 root 20 0 4666804 1.6g 22528 S 181.8 0.3 39:48.28 kvm

3257591 root 20 0 5212584 2.8g 26048 S 130.5 0.6 22,25 kvm

3879666 root 20 0 6760764 4.2g 27456 S 122.7 0.8 180:51.11 kvm

445967 ceph 20 0 4907020 3.1g 38016 S 121.4 0.6 76,23 ceph-osd

13637 root 20 0 4623760 3.1g 26048 S 113.0 0.6 56,37 kvm

2660847 root 20 0 4245096 2.1g 24640 S 110.1 0.4 45,23 kvm

32162 root 20 0 4171708 1.0g 26048 S 106.5 0.2 49,38 kvm

31854 root 20 0 5531336 3.2g 26048 S 105.2 0.6 5d+7h kvm

38101 root 20 0 4350572 2.1g 26752 S 105.2 0.4 5d+6h kvm

34074 root 20 0 3618340 1.3g 25344 S 104.9 0.3 5d+6h kvm

3266982 root 20 0 3557392 2.0g 26048 S 104.5 0.4 16,34 kvm

19744 root 20 0 3704808 2.1g 27456 S 101.3 0.4 5d+6h kvm

61403 root 20 0 4352644 2.1g 25344 S 100.0 0.4 5d+5h kvm

26263 root 20 0 3767140 2.1g 26048 S 98.7 0.4 78,05 kvm

Applications that use the most CPU:

root@cmt6770:~# ps -eo pid,ppid,cmd,%mem,%cpu --sort=-%cpu | head -20

PID PPID CMD %MEM %CPU

1132978 230099 python3 /sbin/ifquery -a -c 0.0 141

2768 1 /usr/bin/ceph-osd -f --clus 0.5 69.2

42 2 [ksoftirqd/4] 0.0 68.1

2766 1 /usr/bin/ceph-osd -f --clus 0.6 64.5

294 2 [ksoftirqd/46] 0.0 62.3

162 2 [ksoftirqd/24] 0.0 56.3

1132862 833829 /usr/bin/rbd -p DataStore - 0.0 53.9

14166 1 /usr/bin/kvm -id 14950 -nam 1.6 50.1

19747 1 /usr/bin/kvm -id 15320 -nam 0.1 49.0

6263 1 /usr/bin/kvm -id 12564 -nam 0.3 44.2

2765 1 /usr/bin/ceph-osd -f --clus 0.4 44.0

16362 1 /usr/bin/kvm -id 14990 -nam 0.4 41.0

21318 1 /usr/bin/kvm -id 15439 -nam 0.4 39.7

2748 1 /usr/bin/ceph-osd -f --clus 0.4 39.4

2759 1 /usr/bin/ceph-osd -f --clus 0.4 38.1

246 2 [ksoftirqd/38] 0.0 37.5

114 2 [ksoftirqd/16] 0.0 37.1

222 2 [ksoftirqd/34] 0.0 36.7

2753 1 /usr/bin/ceph-osd -f --clus 0.4 36.5

root@cmt6770:~# ps -eo pid,ppid,cmd,%mem,%cpu --sort=-%cpu | head -20

PID PPID CMD %MEM %CPU

1132978 230099 python3 /sbin/ifquery -a -c 0.0 141

2768 1 /usr/bin/ceph-osd -f --clus 0.5 69.2

42 2 [ksoftirqd/4] 0.0 68.1

2766 1 /usr/bin/ceph-osd -f --clus 0.6 64.5

294 2 [ksoftirqd/46] 0.0 62.3

162 2 [ksoftirqd/24] 0.0 56.3

1132862 833829 /usr/bin/rbd -p DataStore - 0.0 53.9

14166 1 /usr/bin/kvm -id 14950 -nam 1.6 50.1

19747 1 /usr/bin/kvm -id 15320 -nam 0.1 49.0

6263 1 /usr/bin/kvm -id 12564 -nam 0.3 44.2

2765 1 /usr/bin/ceph-osd -f --clus 0.4 44.0

16362 1 /usr/bin/kvm -id 14990 -nam 0.4 41.0

21318 1 /usr/bin/kvm -id 15439 -nam 0.4 39.7

2748 1 /usr/bin/ceph-osd -f --clus 0.4 39.4

2759 1 /usr/bin/ceph-osd -f --clus 0.4 38.1

246 2 [ksoftirqd/38] 0.0 37.5

114 2 [ksoftirqd/16] 0.0 37.1

222 2 [ksoftirqd/34] 0.0 36.7

2753 1 /usr/bin/ceph-osd -f --clus 0.4 36.5