Since there currently is no offical Support for Rotating Drives, I asked in this thread for some guidance and wanted to share my current Setup and Scripts so that other people can find this thread.

My Setup:

- 4x 20TB SATA HDD Drives that I take home each week (can be replaced for example with more or less USB Drives)

- Proxmox Backup Server with a Hotplug SATA Slot

- Webhooks to Discord (can obviously be replaced with other Platforms or E-Mail notifications)

- Colour Coded the Drives (Red, Green, Yellow, Blue) so that I know which drive is currently plugged in and which drive I will bring to work for the next backup cycle

- One Drive at a time is connected to the server, the other ones are stored offsite

- Backup runs from Monday to Friday

- first Script will eject the drive on Friday and I replace the Disk between Friday after 12:30pm or before Monday 12:30pm where the next Script will run

HowTo:

1. I plugged each Disk at a time

2. Created a ZFS and Datastore in the Proxmox Backup Server GUI for each Disk:

3. while my Disk is connected (e.g. "Blue"), I run

4. Create these two Shell Scripts and Replace the UUIDs, Datastorenames and the Webhook URL:

they are both AI generated

5. Create Cronjobs for both Scripts:

30 12 * * 1 /root/mount_disks.sh #Monday, 12:30pm

30 12 * * 5 /root/unmount_disks.sh #Friday, 12:30pm

6. set all Datastores to Maintenance mode (offline)

after all those steps, the following should should happen:

In my Case, I sync all my Backups from my first Proxmox Backup Server to my second Proxmox Backup Server and set "Transfer Last" to 1, so only the latest Backup will be Transfered

My Prunejob only keeps the last 4 Backups so that at the end of the Week I only have Backups of the current Week

and the Garbe Collection runs 1 Hour after my Prune Job

Note: I'm short on time today, I will fix spelling mistakes at a later point

My Setup:

- 4x 20TB SATA HDD Drives that I take home each week (can be replaced for example with more or less USB Drives)

- Proxmox Backup Server with a Hotplug SATA Slot

- Webhooks to Discord (can obviously be replaced with other Platforms or E-Mail notifications)

- Colour Coded the Drives (Red, Green, Yellow, Blue) so that I know which drive is currently plugged in and which drive I will bring to work for the next backup cycle

- One Drive at a time is connected to the server, the other ones are stored offsite

- Backup runs from Monday to Friday

- first Script will eject the drive on Friday and I replace the Disk between Friday after 12:30pm or before Monday 12:30pm where the next Script will run

HowTo:

1. I plugged each Disk at a time

2. Created a ZFS and Datastore in the Proxmox Backup Server GUI for each Disk:

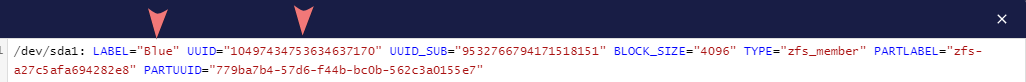

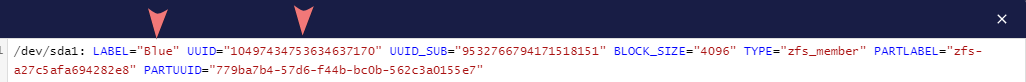

3. while my Disk is connected (e.g. "Blue"), I run

blkid and copy the UUID:

4. Create these two Shell Scripts and Replace the UUIDs, Datastorenames and the Webhook URL:

they are both AI generated

Code:

#!/bin/bash

export PATH="/usr/sbin:/usr/bin:/sbin:/bin"

# Discord Webhook URL, should be replaced

WEBHOOK_URL="https://discord.com/api/webhooks/---------------"

# Function to send a message to Discord

send_discord_notification() {

local message="$1"

curl -H "Content-Type: application/json" \

-X POST \

-d "{\"content\": \"$message\"}" \

$WEBHOOK_URL

}

# Define your ZFS pool and UUID mappings

declare -A ZFS_POOLS

ZFS_POOLS=(

["10497434753634637170"]="Blue" #["UUID"]="Datastore Name"

["14156652453457838743"]="Red"

["6857873780926006489"]="Green"

["8016966771343686250"]="Yellow"

)

# Initialize a message variable

NOTIFICATION=""

# Loop through the UUIDs to find the plugged-in disk

for UUID in "${!ZFS_POOLS[@]}"; do

DEVICE=$(blkid -o device -t UUID="$UUID")

if [ -n "$DEVICE" ]; then

ZPOOL="${ZFS_POOLS[$UUID]}"

NOTIFICATION="Detected disk for ZFS pool: $ZPOOL."

# Import the ZFS pool

if zpool import $ZPOOL; then

NOTIFICATION="ZFS pool $ZPOOL successfully imported."

else

NOTIFICATION="Error: Failed to import ZFS pool $ZPOOL. It might already be imported or there could be an issue."

send_discord_notification "$NOTIFICATION"

exit 1

fi

# Remove maintenance mode from the datastore

if proxmox-backup-manager datastore update $ZPOOL --delete maintenance-mode; then

NOTIFICATION="maintenance mode for $ZPOOL has been removed and is ready to be used."

else

NOTIFICATION="Error: Failed to remove maintenance mode for datastore $ZPOOL."

send_discord_notification "$NOTIFICATION"

exit 1

fi

# Exit after processing the first detected disk

send_discord_notification "$NOTIFICATION"

exit 0

fi

done

# If no disks were detected

NOTIFICATION="No known ZFS pool disks detected. Nothing to do."

send_discord_notification "$NOTIFICATION"

Code:

#!/bin/bash

export PATH="/usr/sbin:/usr/bin:/sbin:/bin"

# Discord Webhook URL, should be replaced

WEBHOOK_URL="https://discord.com/api/webhooks/---------------------------"

# Function to send a message to Discord, with JSON escaping

send_discord_notification() {

local message="$1"

# Escape special characters in the message for JSON

message=$(echo "$message" | python3 -c 'import json,sys; print(json.dumps(sys.stdin.read()))')

# Send the escaped message to Discord

curl -H "Content-Type: application/json" \

-X POST \

-d "{\"content\": $message}" \

$WEBHOOK_URL

}

# Define your ZFS pool and UUID mappings

declare -A ZFS_POOLS

ZFS_POOLS=(

["10497434753634637170"]="Blue" #["UUID"]="Datastore Name"

["14156652453457838743"]="Red"

["6857873780926006489"]="Green"

["8016966771343686250"]="Yellow"

)

# Initialize a message variable

NOTIFICATION=""

# Loop through the UUIDs to find the mounted disk

for UUID in "${!ZFS_POOLS[@]}"; do

DEVICE=$(blkid -o device -t UUID="$UUID")

if [ -n "$DEVICE" ]; then

ZPOOL="${ZFS_POOLS[$UUID]}"

MOUNT_POINT="/mnt/datastore/$ZPOOL"

NOTIFICATION="Detected disk for ZFS pool: $ZPOOL."

# Put the datastore in maintenance mode (set it offline)

if proxmox-backup-manager datastore update $ZPOOL --maintenance-mode type=offline; then

NOTIFICATION="Datastore $ZPOOL put in maintenance mode."

else

NOTIFICATION="Error: Failed to put datastore $ZPOOL in maintenance mode."

send_discord_notification "$NOTIFICATION"

exit 1

fi

# Sleep to ensure commands are fully applied

sleep 2

# Stop Proxmox Backup services to ensure they aren't holding the pool open

systemctl stop proxmox-backup proxmox-backup-proxy

# Remove the .lock file

LOCK_FILE="$MOUNT_POINT/.lock"

if [ -f "$LOCK_FILE" ]; then

rm -f "$LOCK_FILE"

NOTIFICATION=".lock file removed for $ZPOOL."

fi

# Check if any processes are using the mount point

if ! fuser -M $MOUNT_POINT > /dev/null 2>&1; then

NOTIFICATION="No processes are using $MOUNT_POINT. Attempting to export the pool."

# Add a timeout for the zpool export command

timeout 30s zpool export -f $ZPOOL

else

NOTIFICATION="Processes are using $MOUNT_POINT. Retrying until they release the mount point..."

tries=10

delay=2

while [ $tries -gt 0 ]; do

sleep $delay

if ! fuser -M $MOUNT_POINT > /dev/null 2>&1; then

break

fi

tries=$((tries-1))

done

# Add a timeout for the zpool export command

timeout 30s zpool export -f $ZPOOL

fi

# Confirm if the export was successful

if [ $? -eq 0 ]; then

NOTIFICATION="ZFS pool $ZPOOL exported successfully. Powering down the disk..."

if hdparm -Y $DEVICE; then

NOTIFICATION="The disk for ZFS pool $ZPOOL has been powered down. You can now safely unplug it."

else

NOTIFICATION="Error: Failed to power down the disk for $ZPOOL."

fi

else

# Additional diagnostics to see why the pool is busy

BUSY_PROCESSES=$(lsof +D $MOUNT_POINT)

ZFS_STATUS=$(zpool status $ZPOOL)

NOTIFICATION="Error: Failed to export the ZFS pool $ZPOOL. The pool may still be in use.\n\nBusy Processes:\n$BUSY_PROCESSES\n\nZFS Pool Status:\n$ZFS_STATUS"

fi

# Start the Proxmox Backup services again

systemctl start proxmox-backup proxmox-backup-proxy

send_discord_notification "$NOTIFICATION"

exit 0

fi

done

# If no disks were detected

NOTIFICATION="No known ZFS pool disks detected. Nothing to do."

send_discord_notification "$NOTIFICATION"5. Create Cronjobs for both Scripts:

crontab -e30 12 * * 1 /root/mount_disks.sh #Monday, 12:30pm

30 12 * * 5 /root/unmount_disks.sh #Friday, 12:30pm

6. set all Datastores to Maintenance mode (offline)

after all those steps, the following should should happen:

- when the mount_disks.sh script runs, it will

- check what Drive is plugged in

- import the ZFS Pool

- remove the maintenance mode

- Post a notification on Discord if it was successful or the error message

- when the unmount_disks.sh script runs, it will

- set the Datastore to offline

- stop the Proxmox Backup Services (so dont run the unmount_disks.sh script through the WebUI, It will fail! Only run it through a cronjob or via ssh)

- remove the .lock file

- check if any process is using the mountpoint

- export the ZFS Pool

- spin down the drive

- start the Proxmox Backup Service again

In my Case, I sync all my Backups from my first Proxmox Backup Server to my second Proxmox Backup Server and set "Transfer Last" to 1, so only the latest Backup will be Transfered

My Prunejob only keeps the last 4 Backups so that at the end of the Week I only have Backups of the current Week

and the Garbe Collection runs 1 Hour after my Prune Job

Note: I'm short on time today, I will fix spelling mistakes at a later point