You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

It seems you already have that accomplished. Can you provide output of:

lsblk

pvs

vgs

lvs

Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

lsblk

pvs

vgs

lvs

Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

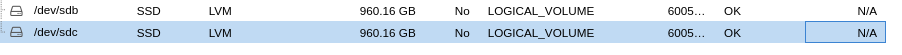

#lsblk

sdb 8:16 0 894.2G 0 disk

├─ssd2r0-ssd2r0_tmeta 253:9 0 8.9G 0 lvm

│ └─ssd2r0-ssd2r0-tpool 253:15 0 876.2G 0 lvm

│ ├─ssd2r0-ssd2r0 253:16 0 876.2G 1 lvm

│ ├─ssd2r0-vm--111--disk--0 253:17 0 8G 0 lvm

│ └─ssd2r0-vm--124--disk--0 253:18 0 32G 0 lvm

└─ssd2r0-ssd2r0_tdata 253:10 0 876.2G 0 lvm

└─ssd2r0-ssd2r0-tpool 253:15 0 876.2G 0 lvm

├─ssd2r0-ssd2r0 253:16 0 876.2G 1 lvm

├─ssd2r0-vm--1--disk--0 253:17 0 8G 0 lvm

└─ssd2r0-vm--12--disk--0 253:18 0 32G 0 lvm

sdc 8:32 0 894.2G 0 disk

# pvs

PV VG Fmt Attr PSize PFree

/dev/sdb ssd2r0 lvm2 a-- <894.22g 124.00m

/dev/sdc ssd2r0 lvm2 a-- <894.22g <894.22g

# vgs

VG #PV #LV #SN Attr VSize VFree

ssd2r0 2 3 0 wz--n- <1.75t <894.34g

# lvs

ssd2r0 ssd2r0 twi-aotz-- 876.21g 1.64 0.27

vm-1-disk-0 ssd2r0 Vwi-aotz-- 8.00g ssd2r0 45.25

vm-12-disk-0 ssd2r0 Vwi-aotz-- 32.00g ssd2r0 33.70

I assume you want to extend the

The thin pool can be extended just like a regular lv using

For testing, I used this:

IMPORTANT NOTE: This provides NO protection from over-provisioning.

More information on the man page: https://man7.org/linux/man-pages/man7/lvmthin.7.html

EDIT: As @bbgeek17 has pointed out - 'It seems you already have that accomplished' - this is true because the thinpool will create extra space within the volume group if needed.

/dev/ssd2r0/ssd2r0 thin pool to span both disks?The thin pool can be extended just like a regular lv using

lvresize.

Code:

# Add 300G to

lvresize -L +300G volumegroup/thinpool

# Add all free space to the thinpool

lvresize -l +100%FREE volumegroup/thinpoolFor testing, I used this:

Code:

# Create a 4G thinpool called ThinPool on volume group pve

root@pve-06:~# lvcreate --type thin-pool -L 4G -n ThinPoolLV pve

Thin pool volume with chunk size 64.00 KiB can address at most <15.88 TiB of d

Logical volume "ThinPoolLV" created.

# Resize the thinpool to use all available space

root@pve-06:~# lvresize /dev/pve/ThinPoolLV -l+100%FREE

Size of logical volume pve/ThinPoolLV_tmeta changed from 4.00 MiB (1 extents)

Size of logical volume pve/ThinPoolLV_tdata changed from 4.00 GiB (1024 extent

Logical volume pve/ThinPoolLV successfully resized.

# Check size of the thinpool

root@pve-06:~# lvs pve/ThinPoolLV

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

ThinPoolLV pve twi-a-tz-- <8.00g 0.00 10.79IMPORTANT NOTE: This provides NO protection from over-provisioning.

More information on the man page: https://man7.org/linux/man-pages/man7/lvmthin.7.html

EDIT: As @bbgeek17 has pointed out - 'It seems you already have that accomplished' - this is true because the thinpool will create extra space within the volume group if needed.

Last edited:

Thanks,I assume you want to extend the/dev/ssd2r0/ssd2r0thin pool to span both disks?

The thin pool can be extended just like a regular lv usinglvresize.

Code:# Add 300G to lvresize -L +300G volumegroup/thinpool # Add all free space to the thinpool lvresize -l +100%FREE volumegroup/thinpool

For testing, I used this:

Code:# Create a 4G thinpool called ThinPool on volume group pve root@pve-06:~# lvcreate --type thin-pool -L 4G -n ThinPoolLV pve Thin pool volume with chunk size 64.00 KiB can address at most <15.88 TiB of d Logical volume "ThinPoolLV" created. # Resize the thinpool to use all available space root@pve-06:~# lvresize /dev/pve/ThinPoolLV -l+100%FREE Size of logical volume pve/ThinPoolLV_tmeta changed from 4.00 MiB (1 extents) Size of logical volume pve/ThinPoolLV_tdata changed from 4.00 GiB (1024 extent Logical volume pve/ThinPoolLV successfully resized. # Check size of the thinpool root@pve-06:~# lvs pve/ThinPoolLV LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert ThinPoolLV pve twi-a-tz-- <8.00g 0.00 10.79

IMPORTANT NOTE: This provides NO protection from over-provisioning.

More information on the man page: https://man7.org/linux/man-pages/man7/lvmthin.7.html

EDIT: As @bbgeek17 has pointed out - 'It seems you already have that accomplished' - this is true because the thinpool will create extra space within the volume group if needed.

```

lvresize -l +100%FREE ssd2r0/ssd2r0

Size of logical volume ssd2r0/ssd2r0_tdata changed from 876.21 GiB (224311 extents) to <1.73 TiB (453262 extents).

Logical volume ssd2r0/ssd2r0_tdata successfully resized.

```

working well without losing data

does,need to set metadatasize for `1.73` ? bcs when try to resotre 1T take to long if not then where is the reasons that take to long like /tmp/ small etc...Thanks,

```

lvresize -l +100%FREE ssd2r0/ssd2r0

Size of logical volume ssd2r0/ssd2r0_tdata changed from 876.21 GiB (224311 extents) to <1.73 TiB (453262 extents).

Logical volume ssd2r0/ssd2r0_tdata successfully resized.

```

working well without losing data

Last edited:

need to set metadatasize for `1.73` ? bcs when try to restore take to long or there is other reasons for that like /tmp is small etc..Thanks,

```

lvresize -l +100%FREE ssd2r0/ssd2r0

Size of logical volume ssd2r0/ssd2r0_tdata changed from 876.21 GiB (224311 extents) to <1.73 TiB (453262 extents).

Logical volume ssd2r0/ssd2r0_tdata successfully resized.

```

working well without losing data

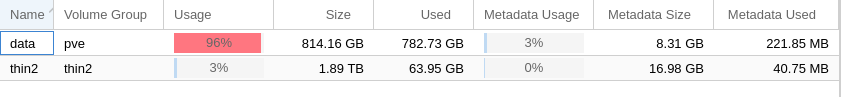

Strange

Code:

lvs -a -o +devices

[lvol0_pmspare] thin2 ewi------- 15.81g /dev/sdb(0)

thin2 thin2 twi-aot--- <1.72t 3.39 0.24 thin2_tdata(0)

[thin2_tdata] thin2 Twi-ao---- <1.72t /dev/sdc(0)

[thin2_tdata] thin2 Twi-ao---- <1.72t /dev/sdb(8096)

[thin2_tmeta] thin2 ewi-ao---- 15.81g /dev/sdb(4048)Attachments

Last edited:

need to set metadatasize for `1.73` ? bcs when try to restore take to long or there is other reasons for that like /tmp is small etc..

View attachment 55351

Strange

Code:lvs -a -o +devices [lvol0_pmspare] thin2 ewi------- 15.81g /dev/sdb(0) thin2 thin2 twi-aot--- <1.72t 3.39 0.24 thin2_tdata(0) [thin2_tdata] thin2 Twi-ao---- <1.72t /dev/sdc(0) [thin2_tdata] thin2 Twi-ao---- <1.72t /dev/sdb(8096) [thin2_tmeta] thin2 ewi-ao---- 15.81g /dev/sdb(4048)

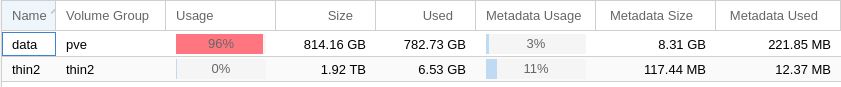

Your Metadata does seem exceeding large and will likely cover you for many Petabytes in the future.

I am unsure if large Metadata causes extreme slowdown.

If in doubt, back up your VMs/Containers (as well as anything else you may have added to the physical volumes) and recreate the storage from scratch.

You can either wipe the disks from the WebUI and create from there or use LVM commands and add to proxmox via the WebUI afterwards.

If you are still confident you want 100%FREE and have no need for over-provisioning safety, something along the likes of:

Code:

# WARNING: BACK UP EVERYTHING IN THE THINPOOL BEFORE CONTINUING

lvremove /dev/volumegroup/thinpool

lvcreate --type thin-pool -l 100%FREE -n thinpool volumegroupLVM 'should' create a Metadata size more suited to the capacity of your drives.

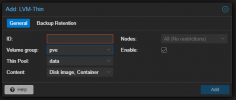

In the Proxmox WebUI > Storage > Add Storage > LVM-Thin

Choose a name for the ID and select the volume group and thin pool and it will be added for use in Proxmox.

* Also note - the thinpool is not a stripe/mirror set and will not increase your read/write speeds - it should be considered a JBOD

Thanks,Your Metadata does seem exceeding large and will likely cover you for many Petabytes in the future.

I am unsure if large Metadata causes extreme slowdown.

If in doubt, back up your VMs/Containers (as well as anything else you may have added to the physical volumes) and recreate the storage from scratch.

You can either wipe the disks from the WebUI and create from there or use LVM commands and add to proxmox via the WebUI afterwards.

If you are still confident you want 100%FREE and have no need for over-provisioning safety, something along the likes of:

Code:# WARNING: BACK UP EVERYTHING IN THE THINPOOL BEFORE CONTINUING lvremove /dev/volumegroup/thinpool lvcreate --type thin-pool -l 100%FREE -n thinpool volumegroup

LVM 'should' create a Metadata size more suited to the capacity of your drives.

In the Proxmox WebUI > Storage > Add Storage > LVM-Thin

View attachment 55357

Choose a name for the ID and select the volume group and thin pool and it will be added for use in Proxmox.

* Also note - the thinpool is not a stripe/mirror set and will not increase your read/write speeds - it should be considered a JBOD

is normal that data/pve take 8.31G while 1.92T take 117.44M?

I'm used (look like more faster)Your Metadata does seem exceeding large and will likely cover you for many Petabytes in the future.

I am unsure if large Metadata causes extreme slowdown.

If in doubt, back up your VMs/Containers (as well as anything else you may have added to the physical volumes) and recreate the storage from scratch.

You can either wipe the disks from the WebUI and create from there or use LVM commands and add to proxmox via the WebUI afterwards.

If you are still confident you want 100%FREE and have no need for over-provisioning safety, something along the likes of:

Code:# WARNING: BACK UP EVERYTHING IN THE THINPOOL BEFORE CONTINUING lvremove /dev/volumegroup/thinpool lvcreate --type thin-pool -l 100%FREE -n thinpool volumegroup

LVM 'should' create a Metadata size more suited to the capacity of your drives.

In the Proxmox WebUI > Storage > Add Storage > LVM-Thin

View attachment 55357

Choose a name for the ID and select the volume group and thin pool and it will be added for use in Proxmox.

* Also note - the thinpool is not a stripe/mirror set and will not increase your read/write speeds - it should be considered a JBOD

Code:

# lvcreate --type thin-pool -l 100%FREE -Zn thin2/thin2

Thin pool volume with chunk size 1.00 MiB can address at most 253.00 TiB of data.

Logical volume "thin2" created.

Code:

# lvcreate --type thin-pool -l 100%FREE -n thin2 thin2

Thin pool volume with chunk size 1.00 MiB can address at most 253.00 TiB of data.

WARNING: Pool zeroing and 1.00 MiB large chunk size slows down thin provisioning.

WARNING: Consider disabling zeroing (-Zn) or using smaller chunk size (<512.00 KiB).

Logical volume "thin2" created.are there way to make it more faster?

Last edited:

Defaults from

Also:

This is also something I have never experienced on a default Proxmox install:

LVM has always "Just Worked" in all of my server installs (Proxmox or other Linux) and it is also odd the default data pool has such large metadata along with LVM setting a 1MiB chunk size.

Is this a bare metal/hybrid/custom install?

/etc/lvm/lvm.conf:

Code:

# Configuration option allocation/wipe_signatures_when_zeroing_new_lvs.

# Look for and erase any signatures while zeroing a new LV.

# The --wipesignatures option overrides this setting.

# Zeroing is controlled by the -Z/--zero option, and if not specified,

# zeroing is used by default if possible. Zeroing simply overwrites the

# first 4KiB of a new LV with zeroes and does no signature detection or

# wiping. Signature wiping goes beyond zeroing and detects exact types

# and positions of signatures within the whole LV. It provides a

# cleaner LV after creation as all known signatures are wiped. The LV

# is not claimed incorrectly by other tools because of old signatures

# from previous use. The number of signatures that LVM can detect

# depends on the detection code that is selected (see

# use_blkid_wiping.) Wiping each detected signature must be confirmed.

# When this setting is disabled, signatures on new LVs are not detected

# or erased unless the --wipesignatures option is used directly.

# This configuration option has an automatic default value.

# wipe_signatures_when_zeroing_new_lvs = 1

Code:

# Configuration option allocation/thin_pool_zero.

# Thin pool data chunks are zeroed before they are first used.

# Zeroing with a larger thin pool chunk size reduces performance.

# This configuration option has an automatic default value.

# thin_pool_zero = 1This is also something I have never experienced on a default Proxmox install:

Code:

WARNING: Pool zeroing and 1.00 MiB large chunk size slows down thin provisioning.

WARNING: Consider disabling zeroing (-Zn) or using smaller chunk size (<512.00 KiB).LVM has always "Just Worked" in all of my server installs (Proxmox or other Linux) and it is also odd the default data pool has such large metadata along with LVM setting a 1MiB chunk size.

Is this a bare metal/hybrid/custom install?

Thanks,Defaults from/etc/lvm/lvm.conf:

Also:Code:# Configuration option allocation/wipe_signatures_when_zeroing_new_lvs. # Look for and erase any signatures while zeroing a new LV. # The --wipesignatures option overrides this setting. # Zeroing is controlled by the -Z/--zero option, and if not specified, # zeroing is used by default if possible. Zeroing simply overwrites the # first 4KiB of a new LV with zeroes and does no signature detection or # wiping. Signature wiping goes beyond zeroing and detects exact types # and positions of signatures within the whole LV. It provides a # cleaner LV after creation as all known signatures are wiped. The LV # is not claimed incorrectly by other tools because of old signatures # from previous use. The number of signatures that LVM can detect # depends on the detection code that is selected (see # use_blkid_wiping.) Wiping each detected signature must be confirmed. # When this setting is disabled, signatures on new LVs are not detected # or erased unless the --wipesignatures option is used directly. # This configuration option has an automatic default value. # wipe_signatures_when_zeroing_new_lvs = 1

Code:# Configuration option allocation/thin_pool_zero. # Thin pool data chunks are zeroed before they are first used. # Zeroing with a larger thin pool chunk size reduces performance. # This configuration option has an automatic default value. # thin_pool_zero = 1

This is also something I have never experienced on a default Proxmox install:

Code:WARNING: Pool zeroing and 1.00 MiB large chunk size slows down thin provisioning. WARNING: Consider disabling zeroing (-Zn) or using smaller chunk size (<512.00 KiB).

LVM has always "Just Worked" in all of my server installs (Proxmox or other Linux) and it is also odd the default data pool has such large metadata along with LVM setting a 1MiB chunk size.

Is this a bare metal/hybrid/custom install?

it's bare metal.

about chunk

the proxmox installer by default create Chunk 256,00k in version 5.X, and 64,00k in version 6.X

Seems that the debian 10 by default create 512,00 KiB

It is not something very critical but you can change the Chunk size with the -c option.

for example:

Code:lvconvert --type thin-pool -c 64K pve/data