My setup is:

So clearly, I have a 1 gbit/s network card

This morning there was a race condition on one of the VMs running LAMP and ClamAV which meant it RAM quickly got depleted, then it's swap file, and from then it went into a no response memory race condition. Impossible to access SSH etc. I had to force stop the VM.

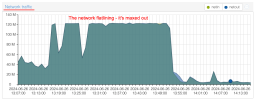

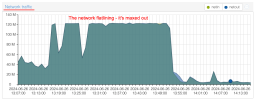

On the host, I see this:

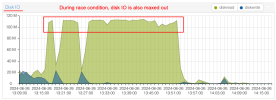

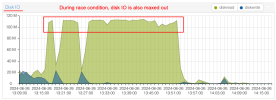

On the VM with the race condition, I see this:

So far everything is normal and I am not complaining. 99.9% of the time this solution works really well.

But here is my question:

Shouldn't the Proxmox VE host network connections to the virtual machines be "unlimited"? Or something more sane, like 10 gbit/s instead of 1 gbit/s? Surely this is "virtual"?

- A Proxmox VE host server with 1000Mb/s physical network card

- An ZFS over iSCSI link to a virtual machine on the same host which is running TrueNAS Core. Virtio.

- Numerous VMs all sharing the same NAS, mostly Debian derivatives. All Virtio

# ethtool eth0Settings for eth0:... Supported link modes: 1000baseT/Full... Advertised link modes: 1000baseT/Full... Speed: 1000Mb/s Duplex: Full Auto-negotiation: onSo clearly, I have a 1 gbit/s network card

This morning there was a race condition on one of the VMs running LAMP and ClamAV which meant it RAM quickly got depleted, then it's swap file, and from then it went into a no response memory race condition. Impossible to access SSH etc. I had to force stop the VM.

On the host, I see this:

On the VM with the race condition, I see this:

So far everything is normal and I am not complaining. 99.9% of the time this solution works really well.

But here is my question:

Shouldn't the Proxmox VE host network connections to the virtual machines be "unlimited"? Or something more sane, like 10 gbit/s instead of 1 gbit/s? Surely this is "virtual"?