Hi,

I have a four node Proxmox cluster.

These four nodes see the same 8 NetApp NFS datastores. My VMs lie on those external Datastores.

Currently I am in the process of migrating VMs from ESXi into that Proxmox cluster and onto those Datastores.

I am aware that the config file of the VM lies locally on the node, and the disk file on the external datastore.

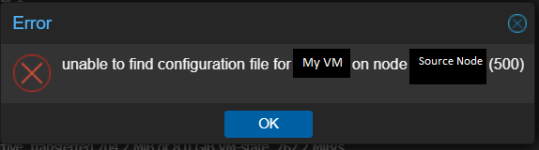

Now, when migrating those imported VMs within my Proxmox cluster, I get the following error each time:

"unable to find configuration file for VM 122 on node 'proxmox06' (500)" (Proxmox06 being the source host curiously)

This error doesn’t occure with VMs that are created via Proxmox VE itself. Only the ones I imported from an ESXi host. I imported them via the Proxmox Import Wizard.

What is the problem? The VM is working fine after the move. I am just afraid that in the future there will be problems. Also the error is hindering productivity.

Right now I am still on Proxmox 8.

Thanks in advance for any input!

I have a four node Proxmox cluster.

These four nodes see the same 8 NetApp NFS datastores. My VMs lie on those external Datastores.

Currently I am in the process of migrating VMs from ESXi into that Proxmox cluster and onto those Datastores.

I am aware that the config file of the VM lies locally on the node, and the disk file on the external datastore.

Now, when migrating those imported VMs within my Proxmox cluster, I get the following error each time:

"unable to find configuration file for VM 122 on node 'proxmox06' (500)" (Proxmox06 being the source host curiously)

This error doesn’t occure with VMs that are created via Proxmox VE itself. Only the ones I imported from an ESXi host. I imported them via the Proxmox Import Wizard.

What is the problem? The VM is working fine after the move. I am just afraid that in the future there will be problems. Also the error is hindering productivity.

Right now I am still on Proxmox 8.

Thanks in advance for any input!