I'm trying to configure some NFS storage for use by PVE and i've run into an issue that I likely self-created. Basically, me and NFS  don't get along. Every time I try to configure it I end up screwing it up the first 5 tries. So as usual I messed up the NFS config and in trying to troubleshoot it I attached and deleted the NFS share from the PVE Storage a few times.

don't get along. Every time I try to configure it I end up screwing it up the first 5 tries. So as usual I messed up the NFS config and in trying to troubleshoot it I attached and deleted the NFS share from the PVE Storage a few times.

Don't know if I went too fast or what but it ended up orphaning a few directories that were blocking re-adding the share. I've fixed that thanks to a couple helpful threads I was able to find but the current issue blocking me has proved to be very difficult to search for a solution. Either I can't find the right syntax or I've created a very unusual problem.

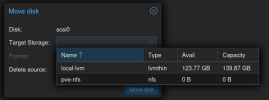

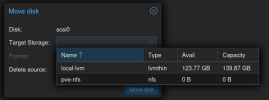

At this point I've connected the NFS storage to proxmox, PVE created the underlying directories (vzdump, etc). I can mount the NFS share on my local machine and create files fine as well so I think the NFS is fine as this point. But when i attempt to move a VM drive to the share the share is reporting 0 available space (see below photo).

I've been using Linux on the Server, though I'm not in IT so no professionally, for over 20 years and it's my daily driver for the last 5 or 6 but I just started using Proxmox. I'm not familiar with the layout or how the virtualization overlay system works, or even where things are logged as opposed to the base Debian I'm used to.

Can anyone point me in the direction of where to look for the problem here?

Don't know if I went too fast or what but it ended up orphaning a few directories that were blocking re-adding the share. I've fixed that thanks to a couple helpful threads I was able to find but the current issue blocking me has proved to be very difficult to search for a solution. Either I can't find the right syntax or I've created a very unusual problem.

At this point I've connected the NFS storage to proxmox, PVE created the underlying directories (vzdump, etc). I can mount the NFS share on my local machine and create files fine as well so I think the NFS is fine as this point. But when i attempt to move a VM drive to the share the share is reporting 0 available space (see below photo).

I've been using Linux on the Server, though I'm not in IT so no professionally, for over 20 years and it's my daily driver for the last 5 or 6 but I just started using Proxmox. I'm not familiar with the layout or how the virtualization overlay system works, or even where things are logged as opposed to the base Debian I'm used to.

Can anyone point me in the direction of where to look for the problem here?