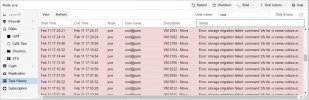

Error when move disk VMs

- Thread starter admjral3

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Probably I set limit Zfs memory 8gb ,so this error happenedIt is possible that the storage was under high load the first time, causing the `zfs list` command to run into a timeout.

possible but it could also be that ZFS was busy with something else. What kind of disks do you use for the pool?Probably I set limit Zfs memory 8gb ,so this error happened

ZFS will by default use up to 50% of the RAM available if nothing else wants to use it. It will use it as cache. Ideally in normal operation (no updates, other large changes) it can respond to almost all read requests from cache which will be much faster than from disk.I remove Limit ZFS Memory Usage, everything worked fine but i don't run anh vms but ram usage take 32gb/125gb i have

If you set up some performance monitoring and check the arc stats (ARC is the ZFS cache) you can see what your arc hit ratio is. On a smaller 2node cluster that I personally run (both nodes have 128GB ram) I usually have a ARC hit rate of > 99% except for times when backups are made, updates installed, etc. The ARC size varies usually between 30 to 45GB

I use SSD nvme and HDD 10tb, move disk vms from SSD to HDD , so arc is the cause of this errorpossible but it could also be that ZFS was busy with something else. What kind of disks do you use for the pool?

ZFS will by default use up to 50% of the RAM available if nothing else wants to use it. It will use it as cache. Ideally in normal operation (no updates, other large changes) it can respond to almost all read requests from cache which will be much faster than from disk.

If you set up some performance monitoring and check the arc stats (ARC is the ZFS cache) you can see what your arc hit ratio is. On a smaller 2node cluster that I personally run (both nodes have 128GB ram) I usually have a ARC hit rate of > 99% except for times when backups are made, updates installed, etc. The ARC size varies usually between 30 to 45GB

i work in mmo, so need a lot of vms with low resources, cpu 2680v4,ram128gb,disk 3tb nvme and 10tb hdd 3.5

Code:

root@pve:~# zpool status

pool: HDD

state: ONLINE

scan: scrub repaired 0B in 0 days 00:56:37 with 0 errors on Sun Feb 14 01:20:38 2021

config:

NAME STATE READ WRITE CKSUM

HDD ONLINE 0 0 0

ata-WDC_WUS721010ALE6L4_VCGLVHUN ONLINE 0 0 0

errors: No known data errors

pool: fastvms

state: ONLINE

scan: scrub repaired 0B in 0 days 00:03:16 with 0 errors on Sun Feb 14 00:27:18 2021

config:

NAME STATE READ WRITE CKSUM

fastvms ONLINE 0 0 0

nvme-WDC_WDS100T2B0C-00PXH0_20207E444202 ONLINE 0 0 0

errors: No known data errors

pool: fastvms2

state: ONLINE

scan: scrub repaired 0B in 0 days 00:03:31 with 0 errors on Sun Feb 14 00:27:34 2021

config:

NAME STATE READ WRITE CKSUM

fastvms2 ONLINE 0 0 0

nvme-Lexar_1TB_SSD_K29361R000564 ONLINE 0 0 0

errors: No known data errors

pool: fastvms3

state: ONLINE

scan: scrub repaired 0B in 0 days 00:06:53 with 0 errors on Sun Feb 14 00:30:57 2021

config:

NAME STATE READ WRITE CKSUM

fastvms3 ONLINE 0 0 0

nvme-CT1000P1SSD8_2025292369BB ONLINE 0 0 0

errors: No known data errors

Last edited:

Thanks for the info. It would help the readability if you could place the command line output into

From what I can see, you have only one single disk per pool? Are you aware that in this case, there is no redundancy and if a disk fails, that pool and the data on it will be lost?

[code][/code] tags.From what I can see, you have only one single disk per pool? Are you aware that in this case, there is no redundancy and if a disk fails, that pool and the data on it will be lost?

I just only have run this server 1 month, and i have plan to build a Proxmox backup server for vms,i do not use for storage purpose ,just need many many vms and everything is important data that used in vms to be saved in nas, so i don't worry about fail disk

And i want to move more disk at the same time but dont know how to, i move one by one disk vm to other storageThanks for the info. It would help the readability if you could place the command line output into[code][/code]tags.

From what I can see, you have only one single disk per pool? Are you aware that in this case, there is no redundancy and if a disk fails, that pool and the data on it will be lost?