Hello,

I have a PVE (Proxmox Virtual Environment) server that backs up its virtual machines to a PBS (Proxmox Backup Server).

This PBS server had reached 100% disk space on its datastore. We cleaned up and purged the datastore to make enough room for new backups. However, when we attempt new backups, they end in error, making it impossible to back up the virtual machines.

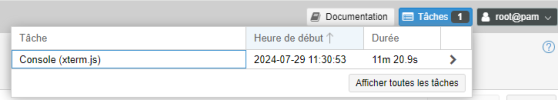

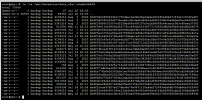

Here is the error that occurs during the backup attempt:

()

INFO: starting new backup job: vzdump 102 --notes-template '{{guestname}}' --all 0 --storage test --mailnotification always --node bichat20 --mode snapshot

INFO: Starting Backup of VM 102 (qemu)

INFO: Backup started at 2024-07-29 11:01:23

INFO: status = running

INFO: VM Name: uptime-kuma

INFO: include disk 'scsi0' 'local:102/vm-102-disk-0.qcow2' 32G

INFO: backup mode: snapshot

INFO: ionice priority: 7

INFO: creating Proxmox Backup Server archive 'vm/102/2024-07-29T09:01:23Z'

ERROR: VM 102 qmp command 'backup' failed - backup register image failed: command error: inserting chunk on store 'data_zfs' failed for bb9f8df61474d25e71fa00722318cd387396ca1736605e1248821cc0de3d3af8 - mkstemp "/mnt/datastore/data_zfs/.chunks/bb9f/bb9f8df61474d25e71fa00722318cd387396ca1736605e1248821cc0de3d3af8.tmp_XXXXXX" failed: EACCES: Permission denied

INFO: aborting backup job

INFO: resuming VM again

ERROR: Backup of VM 102 failed - VM 102 qmp command 'backup' failed - backup register image failed: command error: inserting chunk on store 'data_zfs' failed for bb9f8df61474d25e71fa00722318cd387396ca1736605e1248821cc0de3d3af8 - mkstemp "/mnt/datastore/data_zfs/.chunks/bb9f/bb9f8df61474d25e71fa00722318cd387396ca1736605e1248821cc0de3d3af8.tmp_XXXXXX" failed: EACCES: Permission denied

INFO: Failed at 2024-07-29 11:01:23

INFO: Backup job finished with errors

TASK ERROR: job errors

I can't find any solutions on forums and other support channels. Thank you in advance for any help you can provide.

Axel.

I have a PVE (Proxmox Virtual Environment) server that backs up its virtual machines to a PBS (Proxmox Backup Server).

This PBS server had reached 100% disk space on its datastore. We cleaned up and purged the datastore to make enough room for new backups. However, when we attempt new backups, they end in error, making it impossible to back up the virtual machines.

Here is the error that occurs during the backup attempt:

()

INFO: starting new backup job: vzdump 102 --notes-template '{{guestname}}' --all 0 --storage test --mailnotification always --node bichat20 --mode snapshot

INFO: Starting Backup of VM 102 (qemu)

INFO: Backup started at 2024-07-29 11:01:23

INFO: status = running

INFO: VM Name: uptime-kuma

INFO: include disk 'scsi0' 'local:102/vm-102-disk-0.qcow2' 32G

INFO: backup mode: snapshot

INFO: ionice priority: 7

INFO: creating Proxmox Backup Server archive 'vm/102/2024-07-29T09:01:23Z'

ERROR: VM 102 qmp command 'backup' failed - backup register image failed: command error: inserting chunk on store 'data_zfs' failed for bb9f8df61474d25e71fa00722318cd387396ca1736605e1248821cc0de3d3af8 - mkstemp "/mnt/datastore/data_zfs/.chunks/bb9f/bb9f8df61474d25e71fa00722318cd387396ca1736605e1248821cc0de3d3af8.tmp_XXXXXX" failed: EACCES: Permission denied

INFO: aborting backup job

INFO: resuming VM again

ERROR: Backup of VM 102 failed - VM 102 qmp command 'backup' failed - backup register image failed: command error: inserting chunk on store 'data_zfs' failed for bb9f8df61474d25e71fa00722318cd387396ca1736605e1248821cc0de3d3af8 - mkstemp "/mnt/datastore/data_zfs/.chunks/bb9f/bb9f8df61474d25e71fa00722318cd387396ca1736605e1248821cc0de3d3af8.tmp_XXXXXX" failed: EACCES: Permission denied

INFO: Failed at 2024-07-29 11:01:23

INFO: Backup job finished with errors

TASK ERROR: job errors

I can't find any solutions on forums and other support channels. Thank you in advance for any help you can provide.

Axel.