Hi Support out there,

We are running one of the Proxmox node in standalone mode, the backup scheduled on weekend basis and everything was working as expected last weekend.

We haven't changed anything in the configuration point of view.

Today we noticed tasks bar following issue regards to backup:

The above VM 104 even tried to do manually but still same error.

But the other VM's which had same issue "no such volume" we tried to do manually and it works. So the situation is like some of them works manually and someone of them don't.

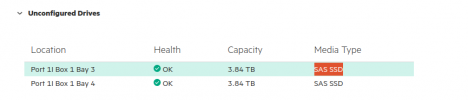

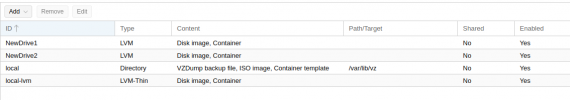

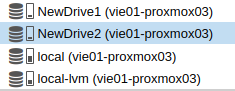

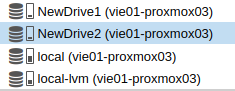

We have 2 additional drives also but it's not like we are facing issue only in the following drives, we also get error on local-lvm too. For example we have 10 vm which got failed backup, some of them running on local-lvm, some of them other drives like NewDrive1 and 2. If i try to backup manually we succeeded some some from local-lvm and some from other 2 drives and some manually backup not working with local-lvm and other 2 drives.

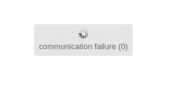

While we are trying to see VM Disks on web interface, unable to get list of disks and it showing "Connection timed out 596"

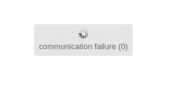

Sometime also getting error with "connection timed out (0)

pveversion -v

We are running one of the Proxmox node in standalone mode, the backup scheduled on weekend basis and everything was working as expected last weekend.

We haven't changed anything in the configuration point of view.

Today we noticed tasks bar following issue regards to backup:

Code:

INFO: starting new backup job: vzdump 104 --dumpdir /home/mgms-admin --compress zstd --mode snapshot

INFO: Starting Backup of VM 104 (qemu)

INFO: Backup started at 2022-04-19 11:41:22

INFO: status = running

INFO: VM Name: vm-backup-xxxxxxx

INFO: include disk 'scsi0' 'local-lvm:vm-104-disk-0' 70G

ERROR: Backup of VM 104 failed - no such volume 'local-lvm:vm-104-disk-0'

INFO: Failed at 2022-04-19 11:41:41

INFO: Backup job finished with errors

TASK ERROR: job errorsThe above VM 104 even tried to do manually but still same error.

But the other VM's which had same issue "no such volume" we tried to do manually and it works. So the situation is like some of them works manually and someone of them don't.

We have 2 additional drives also but it's not like we are facing issue only in the following drives, we also get error on local-lvm too. For example we have 10 vm which got failed backup, some of them running on local-lvm, some of them other drives like NewDrive1 and 2. If i try to backup manually we succeeded some some from local-lvm and some from other 2 drives and some manually backup not working with local-lvm and other 2 drives.

While we are trying to see VM Disks on web interface, unable to get list of disks and it showing "Connection timed out 596"

Sometime also getting error with "connection timed out (0)

Code:

Apr 19 12:09:17 vie01-proxmox03 icinga2[1358]: [2022-04-19 12:09:17 +0200] information/WorkQueue: #18 (JsonRpcConnection, #9) items: 0, rate: 0/s (0/min 0/5min 0/15min);

Apr 19 12:09:17 vie01-proxmox03 icinga2[1358]: [2022-04-19 12:09:17 +0200] information/WorkQueue: #12 (JsonRpcConnection, #3) items: 0, rate: 0/s (0/min 0/5min 0/15min);

Apr 19 12:09:17 vie01-proxmox03 icinga2[1358]: [2022-04-19 12:09:17 +0200] information/WorkQueue: #15 (JsonRpcConnection, #6) items: 0, rate: 0/s (0/min 0/5min 0/15min);

Apr 19 12:09:17 vie01-proxmox03 icinga2[1358]: [2022-04-19 12:09:17 +0200] information/WorkQueue: #23 (JsonRpcConnection, #14) items: 0, rate: 0/s (0/min 0/5min 0/15min);

Apr 19 12:09:27 vie01-proxmox03 icinga2[1358]: [2022-04-19 12:09:27 +0200] information/WorkQueue: #13 (JsonRpcConnection, #4) items: 0, rate: 0/s (0/min 0/5min 0/15min);

Apr 19 12:09:27 vie01-proxmox03 icinga2[1358]: [2022-04-19 12:09:27 +0200] information/WorkQueue: #14 (JsonRpcConnection, #5) items: 0, rate: 0/s (0/min 0/5min 0/15min);

Apr 19 12:09:27 vie01-proxmox03 icinga2[1358]: [2022-04-19 12:09:27 +0200] information/WorkQueue: #21 (JsonRpcConnection, #12) items: 0, rate: 0/s (0/min 0/5min 0/15min);

Apr 19 12:09:27 vie01-proxmox03 icinga2[1358]: [2022-04-19 12:09:27 +0200] information/RemoteCheckQueue: items: 0, rate: 0/s (6/min 30/5min 90/15min);

Apr 19 12:09:37 vie01-proxmox03 icinga2[1358]: [2022-04-19 12:09:37 +0200] information/WorkQueue: #27 (JsonRpcConnection, #18) items: 0, rate: 0/s (0/min 0/5min 0/15min);

Apr 19 12:09:37 vie01-proxmox03 icinga2[1358]: [2022-04-19 12:09:37 +0200] information/WorkQueue: #26 (JsonRpcConnection, #17) items: 0, rate: 0/s (0/min 0/5min 0/15min);

Apr 19 12:09:37 vie01-proxmox03 icinga2[1358]: [2022-04-19 12:09:37 +0200] information/WorkQueue: #11 (JsonRpcConnection, #2) items: 0, rate: 0/s (0/min 0/5min 0/15min);

Apr 19 12:09:37 vie01-proxmox03 icinga2[1358]: [2022-04-19 12:09:37 +0200] information/WorkQueue: #24 (JsonRpcConnection, #15) items: 0, rate: 0/s (0/min 0/5min 0/15min);

Apr 19 12:09:37 vie01-proxmox03 icinga2[1358]: [2022-04-19 12:09:37 +0200] information/WorkQueue: #20 (JsonRpcConnection, #11) items: 0, rate: 0/s (0/min 0/5min 0/15min);

Apr 19 12:09:37 vie01-proxmox03 icinga2[1358]: [2022-04-19 12:09:37 +0200] information/WorkQueue: #28 (JsonRpcConnection, #19) items: 0, rate: 0/s (0/min 0/5min 0/15min);

Apr 19 12:09:37 vie01-proxmox03 icinga2[1358]: [2022-04-19 12:09:37 +0200] information/WorkQueue: #32 (JsonRpcConnection, #23) items: 0, rate: 0/s (0/min 0/5min 0/15min);

Apr 19 12:09:37 vie01-proxmox03 icinga2[1358]: [2022-04-19 12:09:37 +0200] information/WorkQueue: #9 (JsonRpcConnection, #0) items: 0, rate: 0/s (0/min 0/5min 0/15min);

Apr 19 12:09:37 vie01-proxmox03 icinga2[1358]: [2022-04-19 12:09:37 +0200] information/WorkQueue: #19 (JsonRpcConnection, #10) items: 0, rate: 0/s (0/min 0/5min 0/15min);

Apr 19 12:09:37 vie01-proxmox03 icinga2[1358]: [2022-04-19 12:09:37 +0200] information/WorkQueue: #10 (JsonRpcConnection, #1) items: 0, rate: 0/s (0/min 0/5min 0/15min);

Apr 19 12:09:47 vie01-proxmox03 icinga2[1358]: [2022-04-19 12:09:47 +0200] information/WorkQueue: #29 (JsonRpcConnection, #20) items: 0, rate: 0/s (0/min 0/5min 0/15min);

Apr 19 12:09:47 vie01-proxmox03 icinga2[1358]: [2022-04-19 12:09:47 +0200] information/WorkQueue: #22 (JsonRpcConnection, #13) items: 0, rate: 0/s (0/min 0/5min 0/15min);

Apr 19 12:09:47 vie01-proxmox03 icinga2[1358]: [2022-04-19 12:09:47 +0200] information/WorkQueue: #30 (JsonRpcConnection, #21) items: 0, rate: 0/s (0/min 0/5min 0/15min);

Apr 19 12:09:47 vie01-proxmox03 icinga2[1358]: [2022-04-19 12:09:47 +0200] information/WorkQueue: #17 (JsonRpcConnection, #8) items: 0, rate: 0/s (0/min 0/5min 0/15min);

Apr 19 12:09:47 vie01-proxmox03 icinga2[1358]: [2022-04-19 12:09:47 +0200] information/WorkQueue: #16 (JsonRpcConnection, #7) items: 0, rate: 0/s (0/min 0/5min 0/15min);

Apr 19 12:09:47 vie01-proxmox03 icinga2[1358]: [2022-04-19 12:09:47 +0200] information/WorkQueue: #31 (JsonRpcConnection, #22) items: 0, rate: 0/s (0/min 0/5min 0/15min);

Apr 19 12:09:47 vie01-proxmox03 icinga2[1358]: [2022-04-19 12:09:47 +0200] information/WorkQueue: #25 (JsonRpcConnection, #16) items: 0, rate: 0.0833333/s (5/min 21/5min 63/15min);

Apr 19 12:09:59 vie01-proxmox03 pvestatd[1972]: status update time (36.950 seconds)

Apr 19 12:10:00 vie01-proxmox03 systemd[1]: Starting Proxmox VE replication runner...

Apr 19 12:10:00 vie01-proxmox03 systemd[1]: pvesr.service: Succeeded.

Apr 19 12:10:00 vie01-proxmox03 systemd[1]: Started Proxmox VE replication runner.

Apr 19 12:10:16 vie01-proxmox03 pvestatd[1972]: status update time (6.624 seconds)

Apr 19 12:10:48 vie01-proxmox03 pvestatd[1972]: status update time (18.763 seconds)

Apr 19 12:11:00 vie01-proxmox03 systemd[1]: Starting Proxmox VE replication runner...

Apr 19 12:11:00 vie01-proxmox03 systemd[1]: pvesr.service: Succeeded.

Apr 19 12:11:00 vie01-proxmox03 systemd[1]: Started Proxmox VE replication runner.

Apr 19 12:11:17 vie01-proxmox03 icinga2[1358]: [2022-04-19 12:11:17 +0200] information/RemoteCheckQueue: items: 0, rate: 0/s (6/min 30/5min 90/15min);

Apr 19 12:11:19 vie01-proxmox03 pvestatd[1972]: status update time (30.911 seconds)

Apr 19 12:11:38 vie01-proxmox03 pvestatd[1972]: status update time (18.792 seconds)

Apr 19 12:11:47 vie01-proxmox03 icinga2[1358]: [2022-04-19 12:11:47 +0200] information/RemoteCheckQueue: items: 0, rate: 0/s (6/min 30/5min 90/15min);

Apr 19 12:11:57 vie01-proxmox03 icinga2[1358]: [2022-04-19 12:11:57 +0200] information/RemoteCheckQueue: items: 0, rate: 0/s (6/min 30/5min 90/15min);

Apr 19 12:12:00 vie01-proxmox03 systemd[1]: Starting Proxmox VE replication runner...

Apr 19 12:12:00 vie01-proxmox03 systemd[1]: pvesr.service: Succeeded.

Apr 19 12:12:00 vie01-proxmox03 systemd[1]: Started Proxmox VE replication runner.

Apr 19 12:12:19 vie01-proxmox03 pvestatd[1972]: status update time (30.911 seconds)

Apr 19 12:12:37 vie01-proxmox03 pvedaemon[1775863]: <root@pam> successful auth for user 'xxxxx'

Apr 19 12:12:44 vie01-proxmox03 pvestatd[1972]: status update time (24.844 seconds)pveversion -v

Code:

mgms-admin@vie01-proxmox03:~$ pveversion -v

perl: warning: Setting locale failed.

perl: warning: Please check that your locale settings:

LANGUAGE = (unset),

LC_ALL = (unset),

LC_ADDRESS = "de_AT.UTF-8",

LC_NAME = "de_AT.UTF-8",

LC_MONETARY = "de_AT.UTF-8",

LC_PAPER = "de_AT.UTF-8",

LC_IDENTIFICATION = "de_AT.UTF-8",

LC_TELEPHONE = "de_AT.UTF-8",

LC_MEASUREMENT = "de_AT.UTF-8",

LC_TIME = "de_AT.UTF-8",

LC_NUMERIC = "de_AT.UTF-8",

LANG = "en_US.UTF-8"

are supported and installed on your system.

perl: warning: Falling back to a fallback locale ("en_US.UTF-8").

proxmox-ve: 6.4-1 (running kernel: 5.4.106-1-pve)

pve-manager: 6.4-5 (running version: 6.4-5/6c7bf5de)

pve-kernel-5.4: 6.4-1

pve-kernel-helper: 6.4-1

pve-kernel-5.4.106-1-pve: 5.4.106-1

pve-kernel-5.4.73-1-pve: 5.4.73-1

ceph: 15.2.11-pve1

ceph-fuse: 15.2.11-pve1

corosync: 3.1.2-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: 0.8.35+pve1

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.20-pve1

libproxmox-acme-perl: 1.0.8

libproxmox-backup-qemu0: 1.0.3-1

libpve-access-control: 6.4-1

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.4-2

libpve-guest-common-perl: 3.1-5

libpve-http-server-perl: 3.2-1

libpve-storage-perl: 6.4-1

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.6-2

lxcfs: 4.0.6-pve1

novnc-pve: 1.1.0-1

proxmox-backup-client: 1.1.5-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.5-3

pve-cluster: 6.4-1

pve-container: 3.3-5

pve-docs: 6.4-1

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-3

pve-firmware: 3.2-2

pve-ha-manager: 3.1-1

pve-i18n: 2.3-1

pve-qemu-kvm: 5.2.0-6

pve-xtermjs: 4.7.0-3

qemu-server: 6.4-2

smartmontools: 7.2-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 2.0.4-pve1