Hi,

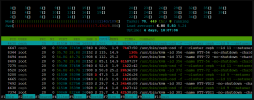

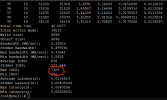

We are running a 3 node cluster of Proxmox ceph and getting really low iops from the VMs. Around 4000 to 5000.

Host 1:

Server Model: Dell R730xd

Ceph network: 10Gbps x2 (LACP configured)

SSD: Kingstone DC500M 1.92TB x3

Storage Controller: PERC H730mini

RAM: 192GB

CPU: Intel(R) Xeon(R) CPU E5-2660 v3 @ 2.60GHz x2

Host2:

Server Model: Dell R730xd

Ceph network: 10Gbps x2 (LACP configured)

SSD: Kingstone DC500M 1.92TB x3

Storage Controller: HBA330mini

RAM: 192GB

CPU: Intel(R) Xeon(R) CPU E5-2650 v3 @ 2.30GHz x2

Host3:

Server Model: Dell R730xd

Ceph network: 10Gbps x2 (LACP configured)

SSD: Kingstone DC500M 1.92TB x2

Storage Controller: HBA330mini

RAM: 192GB

CPU: Intel(R) Xeon(R) CPU E5-2650 v3 @ 2.30GHz x3

We cannot see no more than, 4gbps traffic in the 20Gbps LACP link.

Could anyone please guide us, what is being wrong here? Why are not we achieving at least 20K iops?

Please note we are also running other ceph pool of HDD drives. We have segregated the ceph pool of SSDs using class based ceph rule which proxmox provides.

Thanks in advanced.

We are running a 3 node cluster of Proxmox ceph and getting really low iops from the VMs. Around 4000 to 5000.

Host 1:

Server Model: Dell R730xd

Ceph network: 10Gbps x2 (LACP configured)

SSD: Kingstone DC500M 1.92TB x3

Storage Controller: PERC H730mini

RAM: 192GB

CPU: Intel(R) Xeon(R) CPU E5-2660 v3 @ 2.60GHz x2

Host2:

Server Model: Dell R730xd

Ceph network: 10Gbps x2 (LACP configured)

SSD: Kingstone DC500M 1.92TB x3

Storage Controller: HBA330mini

RAM: 192GB

CPU: Intel(R) Xeon(R) CPU E5-2650 v3 @ 2.30GHz x2

Host3:

Server Model: Dell R730xd

Ceph network: 10Gbps x2 (LACP configured)

SSD: Kingstone DC500M 1.92TB x2

Storage Controller: HBA330mini

RAM: 192GB

CPU: Intel(R) Xeon(R) CPU E5-2650 v3 @ 2.30GHz x3

We cannot see no more than, 4gbps traffic in the 20Gbps LACP link.

Could anyone please guide us, what is being wrong here? Why are not we achieving at least 20K iops?

Please note we are also running other ceph pool of HDD drives. We have segregated the ceph pool of SSDs using class based ceph rule which proxmox provides.

Thanks in advanced.