Encrypted backup 3x larger than unencrypted

- Thread starter ilium007

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Yes, I'm doing some testing.To be clear...you talk about non-encrypted guests that are backed up to a non-encrpted PBS datastore once with encryption enabled and once without for the PBS backup?

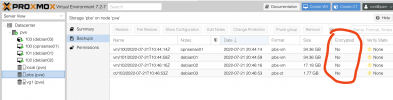

I have 4 VM's that I had running a backup to an un-encrypted datastore. The total backup size for the 4 VM's was 8.65GB

I then re-created the datastore as an encrypted datastore and re-ran the backups and it totalled 21.53GB

I don't know what you mean by "non-encrypted guests"

1.) I can use encryption inside a guest, so the data would be encrypted no matter how the backups are stored. Here compress ratio should be bad.

2.) I can encrypt the PVE storage the guests are stored on. Here compress ratio should be fine as PVE will backups the unencrypted data after the storage got unlocked.

3.) I can put my PBS datastore on an LUKS encrypted ext4 partition or a ZFS encrypted dataset. Then my backups would be encrypted on the PBS machine no matter if encryption would be enabled or not for the PVE backups jobs.

4.) I can use the encryption the PVE/PBS GUI offers for the backup jobs. Then guests data will first be zstd-compressed, then AES encrypted, then hashed and deduplicated on the PVE and then send to the PBS. As far as I understand, here in theory the backups shouldn't get bigger as compression is done before the encryption and deduplication uses hashes that are calculated after the encryption (so PBS can verify backups without needing to know the encryption key), so deduplication should work too.

So its really the question how and where you encrypt.

2.) I can encrypt the PVE storage the guests are stored on. Here compress ratio should be fine as PVE will backups the unencrypted data after the storage got unlocked.

3.) I can put my PBS datastore on an LUKS encrypted ext4 partition or a ZFS encrypted dataset. Then my backups would be encrypted on the PBS machine no matter if encryption would be enabled or not for the PVE backups jobs.

4.) I can use the encryption the PVE/PBS GUI offers for the backup jobs. Then guests data will first be zstd-compressed, then AES encrypted, then hashed and deduplicated on the PVE and then send to the PBS. As far as I understand, here in theory the backups shouldn't get bigger as compression is done before the encryption and deduplication uses hashes that are calculated after the encryption (so PBS can verify backups without needing to know the encryption key), so deduplication should work too.

So its really the question how and where you encrypt.

Last edited:

Ok - I am using the datastore encryption for the PBS destination backup datastore. I haven't done anything with the guests. They have remained unchanged.

I am testing un-encrypted vs encrypted PBS datastore and the encrypted datastore has resulted in a 3x increase in backup size.

I am testing un-encrypted vs encrypted PBS datastore and the encrypted datastore has resulted in a 3x increase in backup size.

What do you mean with "re-create Datastore"? That you just re-added the PBS datastore on the Proxmox VE side but with a encryption key, or that you did that plus creating a completely fresh datastore on the PBS side.I then re-created the datastore as an encrypted datastore and re-ran the backups and it totalled 21.53GB

Note that with the former you got the original unencrypted backups still that you need to decut from the usage, as encrypted and unencrypted backups naturally cannot share chunks (would need to break/defeat the purpose of encryption) and thus no deduplication between those two groups of backups. Similar with backup keys, different keys means that the same data will result in different chunks once encrypted.

Also, how do you measure the usage size?

du -hd1 /path/to/datastore or something else?Ok... I am obviously not explaining this using the correct terms....

I had 4 VM's that I backed up to a non-encrypted datastore on my PBS instance. In the PBS UI Summary screen I could see they consumed 8.65GB.

I had 4 VM's that I backed up to a non-encrypted datastore on my PBS instance. In the PBS UI Summary screen I could see they consumed 8.65GB.

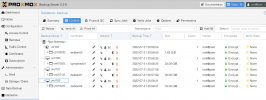

- I deleted the backups and datastore and removed the datastore from PBS.

- I created a new PBS datastore but this time encrypted.

- I ran the same backup for the 4 VM's to the new encrypted datastore.

- In the PBS Summary screen the 4 backups consumed 21.53GB.

How? Can you describe the exact steps taken on both, PVE and PBS.I deleted the backups and datastore and removed the datastore from PBS.

As deleting a datastore over the PBS web interface won't delete the data, just remove the config entry.

So if you deleted it there in PBS, then created a new one pointing at the old path the .chunks content addressable storage and all its data from previous would be still there..

There's no such thing as creating an encrypted datastore, a PBS datastore always handles either, encryped or not.I created a new PBS datastore but this time encrypted.

Again, did you mean you added the datastore newly with encryption selected in the PVE storage add dialogue?

How? Can you describe the exact steps taken on both, PVE and PBS.

As deleting a datastore over the PBS web interface won't delete the data, just remove the config entry.

So if you deleted it there in PBS, then created a new one pointing at the old path the .chunks content addressable storage and all its data from previous would be still there..

There's no such thing as creating an encrypted datastore, a PBS datastore always handles either, encryped or not.

Again, did you mean you added the datastore newly with encryption selected in the PVE storage add dialogue?

I went to a lot of effort to remove and documented steps in case I needed to do it again while experimenting here.

After removing in the UI I went through the following steps:

Code:

systemctl disable mnt-datastore-backup.mount

Removed /etc/systemd/system/multi-user.target.wants/mnt-datastore-backup.mount.

proxmox-backup-manager datastore remove backup

rm /etc/systemd/system/mnt-datastore-backup.mount

umount /dev/sdd1

rm -rf /mnt/datastore/backup

systemctl restart proxmox-backup proxmox-backup-proxy

sgdisk --zap-all /dev/sdd

GPT data structures destroyed! You may now partition the disk using fdisk or

other utilities.There's no such thing as creating an encrypted datastore

So I am not using the correct terms... I acknowledged this...

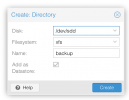

After removing from UI and deleting everything on /dev/sdd (as above) I went into PBS and created the datastore from /dev/sdd:

I then went across to PVE and added the connection to PBS, specified the datastore and specified encryption.

After performing these steps I set up the 4 backups and ran from scratch and the resultant size was 21.53GB

I am happy to erase the disk again and prove what I am saying. Not my first rodeo on *nix. I know how to erase a GPT disk.

Here we go again....

Backups deleted in UI, /dev/sdd erased and removed from PBS:

Do we all agree there are no backups....

Backups deleted in UI, /dev/sdd erased and removed from PBS:

Code:

❯ ssh proxmox

Warning: Permanently added '192.168.10.11' (ED25519) to the list of known hosts.

Linux pve 5.15.35-3-pve #1 SMP PVE 5.15.35-6 (Fri, 17 Jun 2022 13:42:35 +0200) x86_64

The programs included with the Debian GNU/Linux system are free software;

the exact distribution terms for each program are described in the

individual files in /usr/share/doc/*/copyright.

Debian GNU/Linux comes with ABSOLUTELY NO WARRANTY, to the extent

permitted by applicable law.

Last login: Thu Jul 21 18:26:08 2022 from 192.168.10.189

root@pve:~#

root@pve:~# systemctl disable mnt-datastore-backup.mount

Removed /etc/systemd/system/multi-user.target.wants/mnt-datastore-backup.mount.

root@pve:~#

root@pve:~# proxmox-backup-manager datastore remove backup

root@pve:~#

root@pve:~# rm /etc/systemd/system/mnt-datastore-backup.mount

root@pve:~#

root@pve:~# umount /dev/sdd1

root@pve:~#

root@pve:~# rm -rf /mnt/datastore/backup

root@pve:~#

root@pve:~# systemctl restart proxmox-backup proxmox-backup-proxy

root@pve:~#

root@pve:~# gdisk /dev/sdd

GPT fdisk (gdisk) version 1.0.6

Partition table scan:

MBR: protective

BSD: not present

APM: not present

GPT: present

Found valid GPT with protective MBR; using GPT.

Command (? for help): x

Expert command (? for help): z

About to wipe out GPT on /dev/sdd. Proceed? (Y/N): y

GPT data structures destroyed! You may now partition the disk using fdisk or

other utilities.

Blank out MBR? (Y/N): y

root@pve:~#

root@pve:~# mount

sysfs on /sys type sysfs (rw,nosuid,nodev,noexec,relatime)

proc on /proc type proc (rw,relatime)

udev on /dev type devtmpfs (rw,nosuid,relatime,size=8098680k,nr_inodes=2024670,mode=755,inode64)

devpts on /dev/pts type devpts (rw,nosuid,noexec,relatime,gid=5,mode=620,ptmxmode=000)

tmpfs on /run type tmpfs (rw,nosuid,nodev,noexec,relatime,size=1626612k,mode=755,inode64)

/dev/mapper/pve-root on / type ext4 (rw,relatime,errors=remount-ro)

securityfs on /sys/kernel/security type securityfs (rw,nosuid,nodev,noexec,relatime)

tmpfs on /dev/shm type tmpfs (rw,nosuid,nodev,inode64)

tmpfs on /run/lock type tmpfs (rw,nosuid,nodev,noexec,relatime,size=5120k,inode64)

cgroup2 on /sys/fs/cgroup type cgroup2 (rw,nosuid,nodev,noexec,relatime)

pstore on /sys/fs/pstore type pstore (rw,nosuid,nodev,noexec,relatime)

efivarfs on /sys/firmware/efi/efivars type efivarfs (rw,nosuid,nodev,noexec,relatime)

bpf on /sys/fs/bpf type bpf (rw,nosuid,nodev,noexec,relatime,mode=700)

systemd-1 on /proc/sys/fs/binfmt_misc type autofs (rw,relatime,fd=30,pgrp=1,timeout=0,minproto=5,maxproto=5,direct,pipe_ino=18956)

hugetlbfs on /dev/hugepages type hugetlbfs (rw,relatime,pagesize=2M)

mqueue on /dev/mqueue type mqueue (rw,nosuid,nodev,noexec,relatime)

debugfs on /sys/kernel/debug type debugfs (rw,nosuid,nodev,noexec,relatime)

tracefs on /sys/kernel/tracing type tracefs (rw,nosuid,nodev,noexec,relatime)

fusectl on /sys/fs/fuse/connections type fusectl (rw,nosuid,nodev,noexec,relatime)

configfs on /sys/kernel/config type configfs (rw,nosuid,nodev,noexec,relatime)

sunrpc on /run/rpc_pipefs type rpc_pipefs (rw,relatime)

/dev/sda2 on /boot/efi type vfat (rw,relatime,fmask=0022,dmask=0022,codepage=437,iocharset=iso8859-1,shortname=mixed,errors=remount-ro)

lxcfs on /var/lib/lxcfs type fuse.lxcfs (rw,nosuid,nodev,relatime,user_id=0,group_id=0,allow_other)

/dev/fuse on /etc/pve type fuse (rw,nosuid,nodev,relatime,user_id=0,group_id=0,default_permissions,allow_other)

tmpfs on /run/user/0 type tmpfs (rw,nosuid,nodev,relatime,size=1626608k,nr_inodes=406652,mode=700,inode64)

root@pve:~#

root@pve:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 119.2G 0 disk

├─sda1 8:1 0 1007K 0 part

├─sda2 8:2 0 512M 0 part /boot/efi

└─sda3 8:3 0 118.7G 0 part

├─pve-swap 253:4 0 8G 0 lvm [SWAP]

└─pve-root 253:5 0 110.7G 0 lvm /

sdb 8:16 0 1.8T 0 disk

sdc 8:32 0 1.8T 0 disk

sdd 8:48 0 1.8T 0 disk

nvme0n1 259:0 0 238.5G 0 disk

├─vg1-vm--101--disk--0 253:0 0 32G 0 lvm

├─vg1-vm--100--disk--0 253:1 0 32G 0 lvm

└─vg1-vm--102--disk--0 253:2 0 16G 0 lvm

root@pve:~#

root@pve:~# proxmox-backup-manager disk list

┌─────────┬────────────┬─────┬───────────┬───────────────┬────────────────────────┬─────────┬────────┐

│ name │ used │ gpt │ disk-type │ size │ model │ wearout │ status │

╞═════════╪════════════╪═════╪═══════════╪═══════════════╪════════════════════════╪═════════╪════════╡

│ nvme0n1 │ lvm │ 0 │ ssd │ 256060514304 │ KBG40ZNV256G KIOXIA │ 0.00 % │ passed │

├─────────┼────────────┼─────┼───────────┼───────────────┼────────────────────────┼─────────┼────────┤

│ sda │ mounted │ 1 │ ssd │ 128035676160 │ LITEONIT_LCT-128M3S │ - │ passed │

├─────────┼────────────┼─────┼───────────┼───────────────┼────────────────────────┼─────────┼────────┤

│ sdb │ filesystem │ 0 │ hdd │ 2000398934016 │ WDC_WD2002FAEX-007BA0 │ - │ passed │

├─────────┼────────────┼─────┼───────────┼───────────────┼────────────────────────┼─────────┼────────┤

│ sdc │ filesystem │ 0 │ hdd │ 2000398934016 │ WDC_WD2000FYYZ-01UL1B1 │ - │ passed │

├─────────┼────────────┼─────┼───────────┼───────────────┼────────────────────────┼─────────┼────────┤

│ sdd │ unused │ 0 │ hdd │ 2000398933504 │ WDC_WD2002FAEX-007BA0 │ - │ passed │Do we all agree there are no backups....

Last edited:

Code:

root@pbs:~# du -hs /mnt/datastore/backup/

7.1G /mnt/datastore/backup/

root@pbs:~# du -hd1 /mnt/datastore/backup/

7.1G /mnt/datastore/backup/.chunks

704K /mnt/datastore/backup/vm

48K /mnt/datastore/backup/ct

7.1G /mnt/datastore/backup/

root@pbs:~#Erased all again (same steps as above)

Code:

2022-07-21T20:52:19+10:00: create datastore 'backup' on disk sdd

2022-07-21T20:52:28+10:00: Chunkstore create: 1%

2022-07-21T20:52:28+10:00: Chunkstore create: 2%

2022-07-21T20:52:28+10:00: Chunkstore create: 3%

...

2022-07-21T20:52:29+10:00: Chunkstore create: 97%

2022-07-21T20:52:29+10:00: Chunkstore create: 98%

2022-07-21T20:52:29+10:00: Chunkstore create: 99%

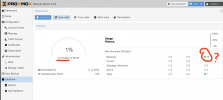

2022-07-21T20:52:29+10:00: TASK OKNew datastore after erase shows 14.01GB again...

Connected PBS to PVE via Datacenter > Storage > Add > Proxmox Backup Server and selected 'Auto-generate a client encryption key'

Ran backup again for the 4 Guests on encrypted PBS storage:

Code:

root@pbs:~# du -hd1 /mnt/datastore/backup/

7.1G /mnt/datastore/backup/.chunks

700K /mnt/datastore/backup/vm

48K /mnt/datastore/backup/ct

7.1G /mnt/datastore/backup/

root@pbs:~#It's the same....

So why is the UI showing 21.54GB? I 'Removed' all backups via the UI before erasing disk so nothing should have lingered in any database that is tracking backups. There are clearly 4 backups on disk.

This all started for me because I am look gin at syncing PBS backups over a WAN VPN link and was concerned about size of backups. Obviously that is not an issue now because I have proven to myself that the encryption makes no difference. But not sure why this disk size reporting is showing 3x what is used by the backups.

As far as I understand the size shown by PBS is not meant to be accurate. It just reports what the filesystem is reporting as usage instead of actually always going through all files and folders, like du command would do, to prevent unneccessary IO.

Turns out the size show in PBS is accurate and reflects exactly the same as the filesystem reports. I removed and recreated the datastore once more but this time as an ext4 file system. Now it only shows 270MB used.

The reason I was looking at 21GB usage after creating the xfs file system was due to the file system overhead.

The reason I was looking at 21GB usage after creating the xfs file system was due to the file system overhead.