Hi all,

i'm not new in using proxmox and now i'm trying to install pve 8.4 on a Dell R750 for a customer who is valutating quitting broadcom esxi.

The goal is to enable vGPU feautes for a RTX TENSOR A30 to be shared beetween 2 windows VMs.

I followed this documentation : https://pve.proxmox.com/wiki/NVIDIA_vGPU_on_Proxmox_VE

- I installed proxmox 8.4 from scratch

- apt update && apt dist-upgrade -y

- enabling all the features required in the documentation :

- pve-nvidia-vgpu-helper setup

- reboot

- installation nvidia driver NVIDIA-Linux-x86_64-570.148.06-vgpu-kvm.run

- reboot

- systemctl enable --now pve-nvidia-sriov@ALL.service

- reboot

everything seems to be installed well, no problem with the service, no problem with lspci that is showing all the pci device (before the installation i can see just a single entry for the nvidia card).

Anyway, when is time to create the resource mapping from GUI, if i check the mediated devices box, no device appears.

I tried different kernel version, i tried with different nvidia driver version (always for KVM and with vGPU support) but i can't still resolve this problem.

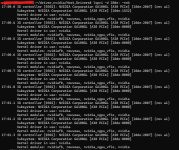

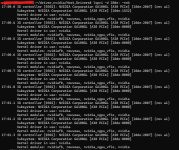

the problem is related to mdev, actually i can't find any nvidia-profile loaded in the system and some path are missing, in others some file are missing.

1) /sys/class/mdev_bus it's an empty dir.

2) mdevctl types not showing output

3) mdevctl list not showing output

4) vgpuConfig.xml MISSING ( cp /usr/share/nvidia/vgpu/vgpuConfig.xml /var/lib/nvidia-vgpu-mgr/vgpuConfig.xml doesn't solve the problem)

So, i'm wondering if someone may help me in this. I followed any step of the procedure but i can't understand why i'm not able to mapping the gpu resources.

Maybe my configuration is not fully supported ? Maybe it's a bug ?

As a long-time Proxmox user, I’d really like to resolve this issue and demonstrate the full potential of the platform to the client. However, the vGPU implementation on ESXi was much faster, and this might discourage the client from choosing Proxmox. Please, I need your support to get this working and to properly showcase Proxmox’s capabilities to the customer !!!

i can provide any log/information you need

i'm not new in using proxmox and now i'm trying to install pve 8.4 on a Dell R750 for a customer who is valutating quitting broadcom esxi.

The goal is to enable vGPU feautes for a RTX TENSOR A30 to be shared beetween 2 windows VMs.

I followed this documentation : https://pve.proxmox.com/wiki/NVIDIA_vGPU_on_Proxmox_VE

- I installed proxmox 8.4 from scratch

- apt update && apt dist-upgrade -y

- enabling all the features required in the documentation :

- VT-d for Intel, or AMD-v for AMD (sometimes named IOMMU)

- SR-IOV (this may not be necessary for older pre-Ampere GPU generations)

- Above 4G decoding

- Alternative Routing ID Interpretation (ARI) (not necessary for pre-Ampere GPUs)

- pve-nvidia-vgpu-helper setup

- reboot

- installation nvidia driver NVIDIA-Linux-x86_64-570.148.06-vgpu-kvm.run

- reboot

- systemctl enable --now pve-nvidia-sriov@ALL.service

- reboot

everything seems to be installed well, no problem with the service, no problem with lspci that is showing all the pci device (before the installation i can see just a single entry for the nvidia card).

Anyway, when is time to create the resource mapping from GUI, if i check the mediated devices box, no device appears.

I tried different kernel version, i tried with different nvidia driver version (always for KVM and with vGPU support) but i can't still resolve this problem.

the problem is related to mdev, actually i can't find any nvidia-profile loaded in the system and some path are missing, in others some file are missing.

1) /sys/class/mdev_bus it's an empty dir.

2) mdevctl types not showing output

3) mdevctl list not showing output

4) vgpuConfig.xml MISSING ( cp /usr/share/nvidia/vgpu/vgpuConfig.xml /var/lib/nvidia-vgpu-mgr/vgpuConfig.xml doesn't solve the problem)

So, i'm wondering if someone may help me in this. I followed any step of the procedure but i can't understand why i'm not able to mapping the gpu resources.

Maybe my configuration is not fully supported ? Maybe it's a bug ?

As a long-time Proxmox user, I’d really like to resolve this issue and demonstrate the full potential of the platform to the client. However, the vGPU implementation on ESXi was much faster, and this might discourage the client from choosing Proxmox. Please, I need your support to get this working and to properly showcase Proxmox’s capabilities to the customer !!!

i can provide any log/information you need