Hi everyone,

I have been using Proxmox to create new servers at home and am fairly new to the software and the concept of servers in general.

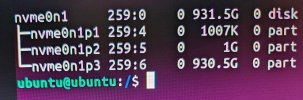

I created a main Proxmox node with a couple of VMS and 4 or 5 LXCs and it is installed on a single NVME SSD with zfs (defaults). I also created a cluster with this machine as the main node.

I have a second empty Proxmox node installed initially on a single sata SSD with an LVM setup (defaults). Today I tried to join this second node to the cluster with the first node.

Whilst the joining process was a success, I found that the there was some kind of 'question mark' icon in the 'drive' section of the second node. I was doing some reading online and then stupidly decided to edit the storage.cfg file. Additionally, out of panic and stupidity, I may have also deleted some disks from one of the Proxmox nodes, although I cannot remember which one specifically.

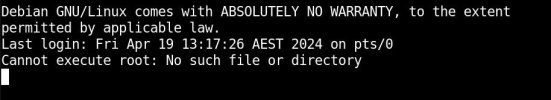

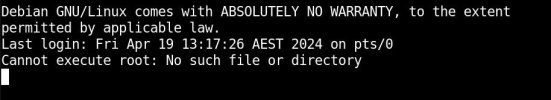

During this editing process I made some changes which have resulted in me seeing the following message when I try to access the shell of the first node:

Reading this leads me to believe that I may have accidentally deleted/cleared the drive containing the root partition of the first Proxmox node. I cannot SSH into this Proxmox host either. The only thing is that I can still see the webGUI of both nodes.

The LXCs and VMs which are running on the first Proxmox node are all still functioning and I can still access the services being hosted on them. The only issue is that when I shut down an LXC or VM, I cannot start it back up, it shows me the error:

When I try to migrate VM/LXCs to the second node, it will say the follow (in addition to the previous error message):

I have been playing around in order to try to 'guess' what the original storage.cfg contained, as it stands, the storage.cfg sits at this:

Given that I cannot access the terminal of the first Proxmox node via the Proxmox web console and cannot SSH into it (perhaps due to not enabling SSH directly into the root account of the Proxmox node, or perhaps by actually deleting the root contents on the Proxmox installation drive), is there a way to rescue this? Does the aforementioned information tell me that I actually accidentally deleted the root contents of the first Proxmox install or are these errors purely caused by an incorrect storage.cfg file? If the former is true, am I doomed to wiping this node and restarting from scratch? And if the latter is the case, how can I go about reverse engineering the right storage.cfg file from my current predicament?

Much thanks to anyone reading this

I have been using Proxmox to create new servers at home and am fairly new to the software and the concept of servers in general.

I created a main Proxmox node with a couple of VMS and 4 or 5 LXCs and it is installed on a single NVME SSD with zfs (defaults). I also created a cluster with this machine as the main node.

I have a second empty Proxmox node installed initially on a single sata SSD with an LVM setup (defaults). Today I tried to join this second node to the cluster with the first node.

Whilst the joining process was a success, I found that the there was some kind of 'question mark' icon in the 'drive' section of the second node. I was doing some reading online and then stupidly decided to edit the storage.cfg file. Additionally, out of panic and stupidity, I may have also deleted some disks from one of the Proxmox nodes, although I cannot remember which one specifically.

During this editing process I made some changes which have resulted in me seeing the following message when I try to access the shell of the first node:

Reading this leads me to believe that I may have accidentally deleted/cleared the drive containing the root partition of the first Proxmox node. I cannot SSH into this Proxmox host either. The only thing is that I can still see the webGUI of both nodes.

The LXCs and VMs which are running on the first Proxmox node are all still functioning and I can still access the services being hosted on them. The only issue is that when I shut down an LXC or VM, I cannot start it back up, it shows me the error:

Code:

TASK ERROR: zfs error: cannot open 'rpool/subvol-111-disk-0': dataset does not existWhen I try to migrate VM/LXCs to the second node, it will say the follow (in addition to the previous error message):

Code:

ERROR: migration aborted (duration 00:00:03): storage migration for 'local-zfs:subvol-105-disk-0' to storage 'local-zfs' failed - zfs error: For further help on a command or topic, run: zfs help [<topic>I have been playing around in order to try to 'guess' what the original storage.cfg contained, as it stands, the storage.cfg sits at this:

Given that I cannot access the terminal of the first Proxmox node via the Proxmox web console and cannot SSH into it (perhaps due to not enabling SSH directly into the root account of the Proxmox node, or perhaps by actually deleting the root contents on the Proxmox installation drive), is there a way to rescue this? Does the aforementioned information tell me that I actually accidentally deleted the root contents of the first Proxmox install or are these errors purely caused by an incorrect storage.cfg file? If the former is true, am I doomed to wiping this node and restarting from scratch? And if the latter is the case, how can I go about reverse engineering the right storage.cfg file from my current predicament?

Much thanks to anyone reading this