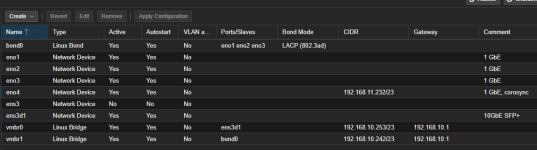

My intial setup used bond0 for vmbr0. It worked but was slow. Today I installed a new 10 GbE NIC (ens3d1). I set vmbr0 to use ens3d1 instead of bond0, and that works for accessing PVE/cluster, but the VMs would not connect. I created a new vmbr1 using bond0. When I change the hardware configuration of the virtual NIC to use vmbr1, the VMs connect. When I set them to use vmbr0, they have no network connection. I've rebooted the PVE host and the VMs several times with no change. What am I doing wrong?

EDIT October 13 2026: after getting a compatible SFP+ module for the 10GbE NIC (still shows no carrier/down, rebooted host and swapped patch cables to be sure, no change) and spending a bunch of time trying to download drivers that no longer exist for download, I'm working on accepting that making this old NIC, made for a server that's 10 years old, isn't going to happen.

Last edited: