Hello

I am pretty new to Proxmox and currently testing if we can use Proxmox as alternative to VMWare.

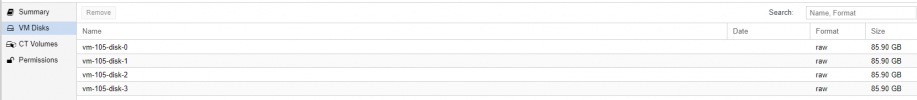

Now I discovered something strange at one test VM. The VM has three disks:

Then the VM has been moved to PVE host 2.

On host 2 I filled the data disks with rsync from our production systems (500GB & 1.3TB)

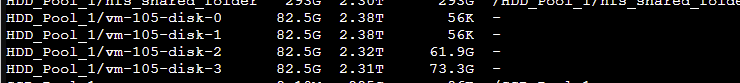

Then I tried to move the VM back to host 1. This failed because host 1 run out of free space (4TB ZFS local storage)

Now I recognized that there are 9 disks for this VM. vm-104-disk-0 to vm-104-disk-8. Each disk three times. The last three are connected to the VM.

What happend there? And can it be, that the discard setting will be lost on a VM migration? Sparse flag is set on each ZFS storage.

Thank you

I am pretty new to Proxmox and currently testing if we can use Proxmox as alternative to VMWare.

Now I discovered something strange at one test VM. The VM has three disks:

- 32GB - Ubuntu Server

- 1TB ZFS for department data

- 2TB ZFS for another department

Then the VM has been moved to PVE host 2.

On host 2 I filled the data disks with rsync from our production systems (500GB & 1.3TB)

Then I tried to move the VM back to host 1. This failed because host 1 run out of free space (4TB ZFS local storage)

Now I recognized that there are 9 disks for this VM. vm-104-disk-0 to vm-104-disk-8. Each disk three times. The last three are connected to the VM.

What happend there? And can it be, that the discard setting will be lost on a VM migration? Sparse flag is set on each ZFS storage.

Thank you