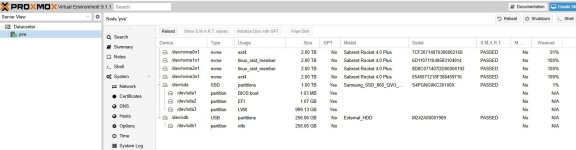

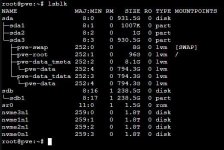

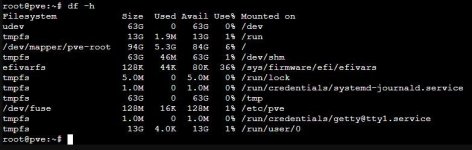

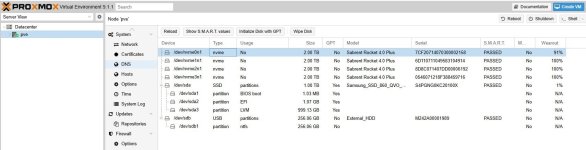

I am brand new to Proxmox. I have put together a server using parts I had laying around. I did buy some NVME drives and RAM. It is an Azrock X299 Taichi Motherboard. I have Quad Channel Memory going at 2400 with 4-32GB sticks of RAM for a total of 128 GB of RAM. I did purchase and install in PCIe slot #1 the ASUS Hyper m.2 X16 GEN5 Card. I have my 4 Sabrent Rocket 4 PLUS 2TB NVMe SSD drives mounted on this card. I set PCIe slot #1 to (x4x4x4x4) in the BIOS to bifurcate the slot into 4 sets of 4 lanes each to handle the 4 NVMe SSD drives. All of that is working perfectly! I see the 4 NVMe drives in the BIOS and I also have a 1TB Samsung 860 QVO SSD drive plugged into a SATA port. The Proxmox was installed on the Samsung drive. My plan was to use the 4 Sabrent Rocket drives for 8TB of storage space. I was getting ready to set them up under ZFS but when I look at my disks in the Proxmox screen I am seeing that 2 of the Sabrent Rockets are allocated under "Usage" as a "linux_raid_member". This is concerning to me because I never set them up as a RAID. But I don't want to screw things up. Can I safely ignore this and just allocate all 4 drives to my ZFS storage space? Or do I need to somehow undo the RAID? And if so, how? Thanks in advance for any help. Kresp.