Hello!

I'm just starting to study ceph and ran into a problem. I can't find the exact information anywhere.

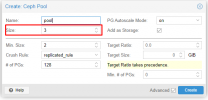

Should I, when planning disk space, count on the fact that there is always free space in the pool in case of failure of one server for pg replication to the remaining servers, or does ceph allocate space for the entire pool based on the replication factor?

For example, if I have 4 servers with 4 osd 10 TB, the replication factor is 3 (the pool will be 40 TB).

If I use all the disk space (all 40 TB), then in case of failure of one server or even two, the pool will completely degrade due to the fact that it will have nowhere to redistribute pg?

Or is there already reserved space in the system for this case and I can take up all 40 TB?

I'm just starting to study ceph and ran into a problem. I can't find the exact information anywhere.

Should I, when planning disk space, count on the fact that there is always free space in the pool in case of failure of one server for pg replication to the remaining servers, or does ceph allocate space for the entire pool based on the replication factor?

For example, if I have 4 servers with 4 osd 10 TB, the replication factor is 3 (the pool will be 40 TB).

If I use all the disk space (all 40 TB), then in case of failure of one server or even two, the pool will completely degrade due to the fact that it will have nowhere to redistribute pg?

Or is there already reserved space in the system for this case and I can take up all 40 TB?