Still having the same issue as I've always had, and its really a showstopper for the use of proxmox for anything important.

Have a VM imported from legacy KVM previous version of qemu. Everything runs perfectly fine except when you perform a backup to snapshot about 1/10 times this breaks the disks completely to the point where the only resolution is to destroy the VM and restart at which points its corrupted. When the problem occurs you can still log into to the VM (ubuntu 12 or 14 I think) but you cannot run any commands that require disk access and if you do then you get something like : Input/output error.

Now this issue has gone on long enough, its in the forums as unsolved for two years at this point. Usually people assume its to do with fsfreeze and fsthaw because it throws an error on fsthaw, but its NOT; The guest agent fs-freeze or thaw that is the problem - they just happen to be logging to a writable log and therefore is the place where you see the error first when the thaw realises the disks have vanished. We don't have the guest agent installed and it makes no difference if you do anyway. It doesn't have to be that VM, simply create a new one or a container stick mariadb on it, set a backup job that backs it up every hour and soon enough you'll see that your database is broken and queries get stuck. File systems don't become read-only, they vanish entirely.

I run zfs scrub on the pool afterwards and everything is 100% no errors detected.

Another thing that I have noticed that could be loosely related is if I have created a snapshot successfully and then create a clone based on that - during the cloning process the exact same thing happens to the source VM it locks up and disks are unwritable, unrecoverable until restart. Again about 1 in 10.

underlying disks look perfectly fine, they are not using hardware raid, just attached and combined into zpool.

Configuration:

Its a DELL poweredge 450, PERC H745 but I have seen same issue on others.

PVE is 7.4-3

HA cluster of 3. + PBS.

Nothing fancy in the config, machine default, disk controller default, BIOS - default. PVE settings are largely default and we don't have anything fancy installed and pretty much the entire config is via the UI.

It doesn't matter what type of backup you're using, if snapshot is selected the problem occurs. It means you can't really use this in any mission critical scenario as I'd hoped. Not only that, but it means you can't use PBS or make use of any of the features in either because you can't trust it. For a while it looked like backup to PBS wasn't causing the issue but it is.

All day long we have replication running - isn't that doing exactly the same underneath and if so why doesn't that cause it to fail? We have that running every 15 min.

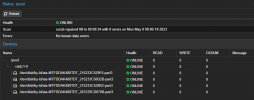

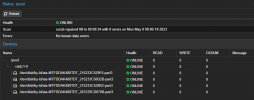

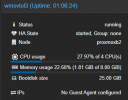

When it screws up:

Bootdisk size here shows "0B".

Have a VM imported from legacy KVM previous version of qemu. Everything runs perfectly fine except when you perform a backup to snapshot about 1/10 times this breaks the disks completely to the point where the only resolution is to destroy the VM and restart at which points its corrupted. When the problem occurs you can still log into to the VM (ubuntu 12 or 14 I think) but you cannot run any commands that require disk access and if you do then you get something like : Input/output error.

Now this issue has gone on long enough, its in the forums as unsolved for two years at this point. Usually people assume its to do with fsfreeze and fsthaw because it throws an error on fsthaw, but its NOT; The guest agent fs-freeze or thaw that is the problem - they just happen to be logging to a writable log and therefore is the place where you see the error first when the thaw realises the disks have vanished. We don't have the guest agent installed and it makes no difference if you do anyway. It doesn't have to be that VM, simply create a new one or a container stick mariadb on it, set a backup job that backs it up every hour and soon enough you'll see that your database is broken and queries get stuck. File systems don't become read-only, they vanish entirely.

I run zfs scrub on the pool afterwards and everything is 100% no errors detected.

Another thing that I have noticed that could be loosely related is if I have created a snapshot successfully and then create a clone based on that - during the cloning process the exact same thing happens to the source VM it locks up and disks are unwritable, unrecoverable until restart. Again about 1 in 10.

underlying disks look perfectly fine, they are not using hardware raid, just attached and combined into zpool.

Configuration:

Its a DELL poweredge 450, PERC H745 but I have seen same issue on others.

PVE is 7.4-3

HA cluster of 3. + PBS.

Nothing fancy in the config, machine default, disk controller default, BIOS - default. PVE settings are largely default and we don't have anything fancy installed and pretty much the entire config is via the UI.

It doesn't matter what type of backup you're using, if snapshot is selected the problem occurs. It means you can't really use this in any mission critical scenario as I'd hoped. Not only that, but it means you can't use PBS or make use of any of the features in either because you can't trust it. For a while it looked like backup to PBS wasn't causing the issue but it is.

All day long we have replication running - isn't that doing exactly the same underneath and if so why doesn't that cause it to fail? We have that running every 15 min.

When it screws up:

Bootdisk size here shows "0B".