Hello! Running PVE as my main home system has been a blast of an experience for the past year, so I just wanna say I appreciate the amazing work developing it open source very much!

The one thing I struggle with on and off though has been local zfs usage maxing out. I posted some time ago when my system became unusable because of insufficient space; the solution included deleting snapshots of VMs on the local-zfs as well as manually deleting some old VM disc images which for some reason did not get deleted when deleting the corresponding VMs via the GUI.

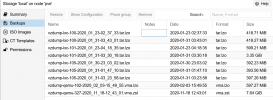

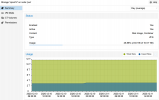

This time it is a bit different I am afraid. The Summary of my pve-node reads a HD space(root) of 98.99% (264.4 GiB of 267.14 GiB), and

or

for

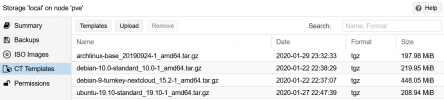

I have recently migrated most of my larger VM discs away from that SSD to another drive via "Move Disk" in the GUI under Hardware/Resources.

Another couple of notes: I tried to update the host today, and read 4 log lines:

Error: command 'usr/bin/termproxy 5900 --path /nodes/pve --perm Sys.Console -- /usr/bin/pveupgrade --shell' failed: exit code 1'

and 3 times like

'command '/usr/bin/termproxy 5903 --path /nodes/pve --perm Sys.Console -- /bin/login -f root' failed: exit code 1'.

I am not sure what to make of these, except maybe that due to insufficient space, some updates didn't go through properly (worst case) or just threw some errors but went through fine (better case).

The fragmentation of the SSD is also quite high at 68%, could that be one factor contributing to the issue?

As I understand it, the proxmox host only requires very little space, like around 32 GB should suffice, so I don't know where the root of the problem is here. Any help in resolving it is greatly appreciated- I hope the system is not doomed yet.

The one thing I struggle with on and off though has been local zfs usage maxing out. I posted some time ago when my system became unusable because of insufficient space; the solution included deleting snapshots of VMs on the local-zfs as well as manually deleting some old VM disc images which for some reason did not get deleted when deleting the corresponding VMs via the GUI.

This time it is a bit different I am afraid. The Summary of my pve-node reads a HD space(root) of 98.99% (264.4 GiB of 267.14 GiB), and

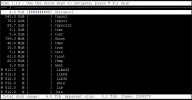

zfs list shows

Code:

NAME USED AVAIL REFER MOUNTPOINT

rpool 443G 2.71G 104K /rpool

rpool/ROOT 264G 2.71G 96K /rpool/ROOT

rpool/ROOT/pve-1 264G 2.71G 264G /

rpool/data 178G 2.71G 136K /rpool/data

rpool/data/subvol-100-disk-0 1.11G 2.71G 1.11G /rpool/data/subvol-100-disk-0

rpool/data/subvol-101-disk-0 35.3G 0B 32G /rpool/data/subvol-101-disk-0

rpool/data/subvol-103-disk-0 1.44G 2.71G 1.01G /rpool/data/subvol-103-disk-0

rpool/data/subvol-104-disk-0 42.4G 2.71G 42.4G /rpool/data/subvol-104-disk-0

rpool/data/subvol-105-disk-0 852M 2.71G 852M /rpool/data/subvol-105-disk-0

rpool/data/subvol-105-disk-1 861M 2.71G 861M /rpool/data/subvol-105-disk-1

rpool/data/subvol-105-disk-2 96K 2.71G 96K /rpool/data/subvol-105-disk-2

rpool/data/vm-102-disk-0 1.82G 2.71G 1.82G -

rpool/data/vm-201-disk-0 192K 2.71G 192K -

rpool/data/vm-201-disk-1 94.7G 2.71G 94.7G -

Code:

NAME AVAIL USED USEDSNAP USEDDS USEDREFRESERV USEDCHILD

rpool 2.70G 443G 0B 104K 0B 443G

rpool/ROOT 2.70G 264G 0B 96K 0B 264G

rpool/ROOT/pve-1 2.70G 264G 0B 264G 0B 0B

rpool/data 2.70G 178G 0B 136K 0B 178G

rpool/data/subvol-100-disk-0 2.70G 1.11G 0B 1.11G 0B 0B

rpool/data/subvol-101-disk-0 0B 35.3G 3.31G 32G 0B 0B

rpool/data/subvol-103-disk-0 2.70G 1.44G 448M 1.01G 0B 0B

rpool/data/subvol-104-disk-0 2.70G 42.4G 28.6M 42.4G 0B 0B

rpool/data/subvol-105-disk-0 2.70G 852M 0B 852M 0B 0B

rpool/data/subvol-105-disk-1 2.70G 861M 0B 861M 0B 0B

rpool/data/subvol-105-disk-2 2.70G 96K 0B 96K 0B 0B

rpool/data/vm-102-disk-0 2.70G 1.82G 0B 1.82G 0B 0B

rpool/data/vm-201-disk-0 2.70G 192K 0B 192K 0B 0B

rpool/data/vm-201-disk-1 2.70G 94.7G 0B 94.7G 0B 0Bfor

zfs list -ospace. The situation I had previously was different, as the root filesystem was not the issue here, having been at around 35 GB, but rather rpool/data:

Code:

NAME AVAIL USED USEDSNAP USEDDS USEDREFRESERV USEDCHILD

rpool 35.0G 411G 0B 104K 0B 411G

rpool/ROOT 35.0G 34.7G 0B 96K 0B 34.7G

rpool/ROOT/pve-1 35.0G 34.7G 0B 34.7G 0B 0B

rpool/data 35.0G 376G 0B 144K 0B 376GI have recently migrated most of my larger VM discs away from that SSD to another drive via "Move Disk" in the GUI under Hardware/Resources.

zfs list as well as ls /rpool/data/ seem to confirm that this worked; unfortunately, I can't say if the rpool/ROOT usage was as low as 35 GB right before that or not.Another couple of notes: I tried to update the host today, and read 4 log lines:

Error: command 'usr/bin/termproxy 5900 --path /nodes/pve --perm Sys.Console -- /usr/bin/pveupgrade --shell' failed: exit code 1'

and 3 times like

'command '/usr/bin/termproxy 5903 --path /nodes/pve --perm Sys.Console -- /bin/login -f root' failed: exit code 1'.

I am not sure what to make of these, except maybe that due to insufficient space, some updates didn't go through properly (worst case) or just threw some errors but went through fine (better case).

The fragmentation of the SSD is also quite high at 68%, could that be one factor contributing to the issue?

As I understand it, the proxmox host only requires very little space, like around 32 GB should suffice, so I don't know where the root of the problem is here. Any help in resolving it is greatly appreciated- I hope the system is not doomed yet.

Last edited: