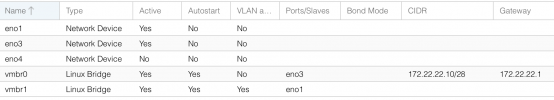

My goal is to connect 2 nodes directly using a sfp+ 10GbE DAC cable for zfs replication. Both nodes have the same network card which has 4 ports in total, 2 x 10GbE sfp+ ports (ports eno1 & eno2) and 2 x 1GbE ports (ports eno3 & eno4 ). Ports eno1 and eno3 are connected to the switch and port eno2 is directly connected to the other node. The problem is that port eno2 doesn't even show up in Proxmox as a network device on both nodes.

How do I go about this? I tried manually adding eno2 to the interfaces file and created a bridge on both nodes with the IP addresses 172.23.23.1/30 and 172.23.23.2/30 but pinging doesn't work. Not sure why this is the case, below is my /etc/network/interfaces file before any attempts were tested.

auto lo

iface lo inet loopback

iface eno3 inet manual

iface eno4 inet manual

iface eno1 inet manual

auto vmbr0

iface vmbr0 inet static

address 172.22.22.10/28

gateway 172.22.22.1

bridge-ports eno3

bridge-stp off

bridge-fd 0

iface vmbr1 inet manual

bridge-ports eno1

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

#Datacenter

How do I go about this? I tried manually adding eno2 to the interfaces file and created a bridge on both nodes with the IP addresses 172.23.23.1/30 and 172.23.23.2/30 but pinging doesn't work. Not sure why this is the case, below is my /etc/network/interfaces file before any attempts were tested.

auto lo

iface lo inet loopback

iface eno3 inet manual

iface eno4 inet manual

iface eno1 inet manual

auto vmbr0

iface vmbr0 inet static

address 172.22.22.10/28

gateway 172.22.22.1

bridge-ports eno3

bridge-stp off

bridge-fd 0

iface vmbr1 inet manual

bridge-ports eno1

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

#Datacenter

Last edited: