[SOLVED] Different RAM Usage between GUI and Shell

- Thread starter Toormser

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Please try to use the search function. That is asked atleast once a week.

For a short answer:

Run

Proxmox shows you how much of the physical RAM the VM is actually using (atleast for linux). So for Proxmox used + shared + buff/cache = used. free = free.

With top/htop you misinterpret the numbers. "3.65G of 15,6G" doesn't mean that 11,95GB are actually free. It only means that 11,95GB are available to the guest for services and so on. But available RAM is still used and not free. Linux uses RAM for caching. That RAM then is used (so no other VMs or the host can use it) but for the guest itself it is still available because the guest can drop the cached data to free up RAM so services can use it if the guest is running out of RAM.

For a short answer:

Run

free -h instead of top or htop.Proxmox shows you how much of the physical RAM the VM is actually using (atleast for linux). So for Proxmox used + shared + buff/cache = used. free = free.

With top/htop you misinterpret the numbers. "3.65G of 15,6G" doesn't mean that 11,95GB are actually free. It only means that 11,95GB are available to the guest for services and so on. But available RAM is still used and not free. Linux uses RAM for caching. That RAM then is used (so no other VMs or the host can use it) but for the guest itself it is still available because the guest can drop the cached data to free up RAM so services can use it if the guest is running out of RAM.

Last edited:

I apologize for my question but... keeping in mind that "the guest itself it is still available because the guest can drop the cached data to free up RAM", Does it mean that the VM could be dimensioned to assign less memory and still work fine?

In other words, which of these values should I use to decide if I can reduce the amount of memory assigned to VM?

I'll thank so much your opinion.

Regards,

Carlos.

In other words, which of these values should I use to decide if I can reduce the amount of memory assigned to VM?

I'll thank so much your opinion.

Regards,

Carlos.

Monitor from the guestOS over some days how much RAM is "available" inside the VM. Check the biggest spike in RAM usage and if at that time there is still plenty on RAM available, you can lower the amount of RAM you assign to that VM.In other words, which of these values should I use to decide if I can reduce the amount of memory assigned to VM?

You basically want to assign VMs as less RAM as possible but not so low that processes get killed by OOM.

Last edited:

Monitor from the guestOS over some days how much RAM is "available" inside the VM. Check the biggest spike in RAM usage and if at that time there is still plenty on RAM available, you can lower the amount of RAM you assign to that VM.

You basically want to assign VMs as less RAM as possible but not so low that processes get killed by OOM.

Thank you for your fast and useful response.

So, if I have understood it right, the key value is "free -h" and see the available value several times in different situations. In some way, almost all that memory could be reduced from memory allocation in VM configuration.

Have I understood it correctly?

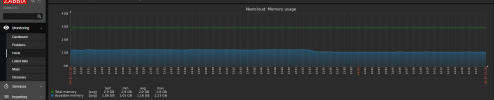

That would be the manual way. I run the zabbix agent inside each VM and that monitors the RAM usage (and hundreds of other metrics) every minute and sends it to the zabbix server that is then collecting it and printing it as nice graphs and will allow me to see the min/avg/max values of "available" RAM (the same thing that is reported by "free -h") over a period I define:So, if I have understood it right, the key value is "free -h" and see the available value several times in different situations. In some way, almost all that memory could be reduced from memory allocation in VM configuration.

Good idea to have some proper monitoring set up. Not only to be able to make better decisions based on long term metrics but also to get notification in case something isn't behaving as it should. It for example would warn me early in case any VM is getting low on available RAM so I can fix stuff and search for memory leaks or whatever before some services get killed.

Last edited:

Thank you, I'll try itThat would be the manual way. I run the zabbix agent inside each VM and that monitors the RAM usage (and hundreds of other metrics) every minute and sends it to the zabbix server that is then collecting it and printing it as nice graphs and will allow me to see the min/avg/max values of "available" RAM (the same thing that is reported by "free -h") over a period I define:

View attachment 66952

Good idea to have some proper monitoring set up. Not only to be able to make better decisions based on long term metrics but also to get notification in case something isn't behaving as it should. It for example would warn me early in case any VM is getting low on available RAM so I can fix stuff and search for memory leaks or whatever before some services get killed.

Thanks for your great support