Hello, thanks again for the hints..

a little (unlucky) update

at first I offlined the disk and wiped from pve as you suggested and eventually cloned the partitions

Code:

root@pve:~# sfdisk /dev/nvme0n1 < part_table

Checking that no-one is using this disk right now ... OK

Disk /dev/nvme0n1: 476.94 GiB, 512110190592 bytes, 1000215216 sectors

Disk model: Lexar SSD NM620 512GB

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

>>> Script header accepted.

>>> Script header accepted.

>>> Script header accepted.

>>> Script header accepted.

>>> Script header accepted.

>>> Script header accepted.

>>> Script header accepted.

>>> Created a new GPT disklabel (GUID: 6601EEB0-BDAF-4C63-B0E8-607AEAA81B5F).

/dev/nvme0n1p1: Created a new partition 1 of type 'BIOS boot' and of size 1007 KiB.

/dev/nvme0n1p2: Created a new partition 2 of type 'EFI System' and of size 1 GiB.

/dev/nvme0n1p3: Created a new partition 3 of type 'Solaris /usr & Apple ZFS' and of size 464 GiB.

/dev/nvme0n1p4: Done.

New situation:

Disklabel type: gpt

Disk identifier: 6601EEB0-BDAF-4C63-B0E8-607AEAA81B5F

Device Start End Sectors Size Type

/dev/nvme0n1p1 34 2047 2014 1007K BIOS boot

/dev/nvme0n1p2 2048 2099199 2097152 1G EFI System

/dev/nvme0n1p3 2099200 975175680 973076481 464G Solaris /usr & Apple ZFS

The partition table has been altered.

Calling ioctl() to re-read partition table.

Syncing disks.

root@pve:~# cat part_table

label: gpt

label-id: 6601EEB0-BDAF-4C63-B0E8-607AEAA81B5F

device: /dev/nvme0n1

unit: sectors

first-lba: 34

last-lba: 1000215182

sector-size: 512

/dev/nvme0n1p1 : start= 34, size= 2014, type=21686148-6449-6E6F-744E-656564454649, uuid=297EF39F-A168-45DE-AC52-6D7AC37458DF

/dev/nvme0n1p2 : start= 2048, size= 2097152, type=C12A7328-F81F-11D2-BA4B-00A0C93EC93B, uuid=E1E90EDA-C198-428C-8CF2-4D9BD3F670F8

/dev/nvme0n1p3 : start= 2099200, size= 973076481, type=6A898CC3-1DD2-11B2-99A6-080020736631, uuid=3CFBF49C-7289-4068-8A44-E2EFD0FB0892

then following the wiki I ran the format on EFI

Code:

root@pve:~# proxmox-boot-tool format /dev/nvme0n1p2

UUID="" SIZE="1073741824" FSTYPE="" PARTTYPE="c12a7328-f81f-11d2-ba4b-00a0c93ec93b" PKNAME="nvme0n1" MOUNTPOINT=""

Formatting '/dev/nvme0n1p2' as vfat..

mkfs.fat 4.2 (2021-01-31)

Done.

and eventually the init

Code:

root@pve:~# proxmox-boot-tool init /dev/nvme0n1p2

Re-executing '/usr/sbin/proxmox-boot-tool' in new private mount namespace..

UUID="C54D-3B8F" SIZE="1073741824" FSTYPE="vfat" PARTTYPE="c12a7328-f81f-11d2-ba4b-00a0c93ec93b" PKNAME="nvme0n1" MOUNTPOINT=""

Mounting '/dev/nvme0n1p2' on '/var/tmp/espmounts/C54D-3B8F'.

Installing systemd-boot..

Created "/var/tmp/espmounts/C54D-3B8F/EFI/systemd".

Created "/var/tmp/espmounts/C54D-3B8F/EFI/BOOT".

Created "/var/tmp/espmounts/C54D-3B8F/loader".

Created "/var/tmp/espmounts/C54D-3B8F/loader/entries".

Created "/var/tmp/espmounts/C54D-3B8F/EFI/Linux".

Copied "/usr/lib/systemd/boot/efi/systemd-bootx64.efi" to "/var/tmp/espmounts/C54D-3B8F/EFI/systemd/systemd-bootx64.efi".

Copied "/usr/lib/systemd/boot/efi/systemd-bootx64.efi" to "/var/tmp/espmounts/C54D-3B8F/EFI/BOOT/BOOTX64.EFI".

Random seed file /var/tmp/espmounts/C54D-3B8F/loader/random-seed successfully written (32 bytes).

Created EFI boot entry "Linux Boot Manager".

Configuring systemd-boot..

Unmounting '/dev/nvme0n1p2'.

Adding '/dev/nvme0n1p2' to list of synced ESPs..

Refreshing kernels and initrds..

Running hook script 'proxmox-auto-removal'..

Running hook script 'zz-proxmox-boot'..

Copying and configuring kernels on /dev/disk/by-uuid/58D9-77ED

Copying kernel and creating boot-entry for 6.5.11-4-pve

WARN: /dev/disk/by-uuid/58D9-E5FE does not exist - clean '/etc/kernel/proxmox-boot-uuids'! - skipping

Copying and configuring kernels on /dev/disk/by-uuid/C54D-3B8F

Copying kernel and creating boot-entry for 6.5.11-4-pve

now, following the warns I guess I shall remove the C54D-3B8F uuid from boot-uuids but i didnt yet

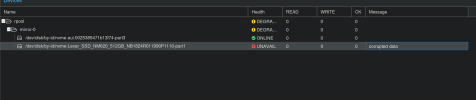

finally I put online the zpool but both from cli and from pve it shows bad

Code:

root@pve:~# zpool online rpool nvme-Lexar_SSD_NM620_512GB_NB1824R011990P1110

warning: device 'nvme-Lexar_SSD_NM620_512GB_NB1824R011990P1110' onlined, but remains in faulted state

use 'zpool replace' to replace devices that are no longer present

I feel this is really over my head and any hints would be really appreciated, thank you again

edit: I

guess the (or a) issue may be that in the rpool there is part3 zfs data and part1 from the Lexar which had to be bios boot, I guess this is why is stating corrupted data and i should, in some way, switch the partition in the rpool and maybe start the process over again, since /dev/nvme0n1p1 is wrongly ZFS (?)

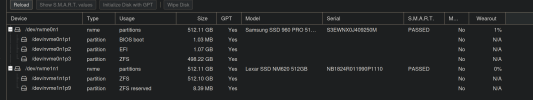

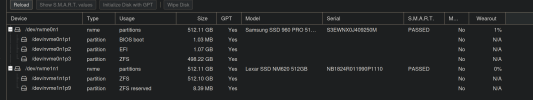

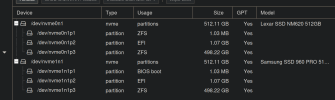

View attachment 60544

View attachment 60545