Hello.

We faced a problem that we are trying to create virtual disk in H700 and then build osd on it to our ceph cluster.

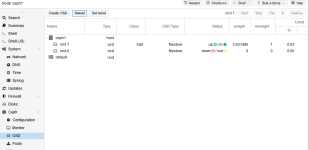

We found that, if we do not specificly build the OSD as filesotre instead of bluestore, the osd will not appear in the OSD UI.

Even we can use command ceph osd crush add osd.0 0 host=ceph1 to ask it back to UI, but it will shown as out with used 0 (down out). In and Start makes no difference.

However if we pveceph createosd /dev/sdb -bluestore 0 like this, the osd will appear instantly without any problem.(up in with Used 0.0.3)

Is this because the raid card affects the MBR and GPT in the virtual disk so it cannot function correctly ??

Is there anyone have this experience?

We faced a problem that we are trying to create virtual disk in H700 and then build osd on it to our ceph cluster.

We found that, if we do not specificly build the OSD as filesotre instead of bluestore, the osd will not appear in the OSD UI.

Even we can use command ceph osd crush add osd.0 0 host=ceph1 to ask it back to UI, but it will shown as out with used 0 (down out). In and Start makes no difference.

However if we pveceph createosd /dev/sdb -bluestore 0 like this, the osd will appear instantly without any problem.(up in with Used 0.0.3)

Is this because the raid card affects the MBR and GPT in the virtual disk so it cannot function correctly ??

Is there anyone have this experience?

Last edited: