Delay of 1 node for reconnection on nfs.

- Thread starter frankz

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Sounds like a network layer issue. There could be a hundred different reasons, from flaky network cable to misconfigured MTU.

You should start with examining the log: journalctl -b

Continue with looking at network interface statistics, looking at errors.

Followed by running things in debug mode, like "pvestatd" and others.

A network trace analyses of initial connection would probably give you an immediate clue but it requires some advanced skills.

Blockbridge: Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

You should start with examining the log: journalctl -b

Continue with looking at network interface statistics, looking at errors.

Followed by running things in debug mode, like "pvestatd" and others.

A network trace analyses of initial connection would probably give you an immediate clue but it requires some advanced skills.

Blockbridge: Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

At the moment, thank you, I will try to check as directed by you.Sounds like a network layer issue. There could be a hundred different reasons, from flaky network cable to misconfigured MTU.

You should start with examining the log: journalctl -b

Continue with looking at network interface statistics, looking at errors.

Followed by running things in debug mode, like "pvestatd" and others.

A network trace analyses of initial connection would probably give you an immediate clue but it requires some advanced skills.

Blockbridge: Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

I checked the traceroute of the segment and it's correct:traceroute to 192.168.2.99 (192.168.2.99), 30 hops max, 60 byte packetsSounds like a network layer issue. There could be a hundred different reasons, from flaky network cable to misconfigured MTU.

You should start with examining the log: journalctl -b

Continue with looking at network interface statistics, looking at errors.

Followed by running things in debug mode, like "pvestatd" and others.

A network trace analyses of initial connection would probably give you an immediate clue but it requires some advanced skills.

Blockbridge: Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

1 hpnas.internal2.lan (192.168.2.99) 0.608 ms 0.591 ms 0.583 ms

root@pve:~# traceroute 192.168.2.100

traceroute to 192.168.2.100 (192.168.2.100), 30 hops max, 60 byte packets

1 192.168.2.100 (192.168.2.100) 0.133 ms 0.123 ms 0.117 ms

The network cable is fine.

In the logs I tried this:

Apr 12 14:04:48 pve kernel: DMAR: [Firmware Bug]: No firmware reserved region can cover this RMRR [0x000000007d000000-0x000000007f7fffff], >

Apr 12 14:04:48 pve kernel: x86/cpu: SGX disabled by BIOS.

Apr 12 14:04:48 pve kernel: r8169 0000:01:00.0: can't disable ASPM; OS doesn't have ASPM control

Apr 12 14:05:06 pve pmxcfs[2510]: [quorum] crit: quorum_initialize failed: 2

Apr 12 14:05:06 pve pmxcfs[2510]: [quorum] crit: can't initialize service

Apr 12 14:05:06 pve pmxcfs[2510]: [confdb] crit: cmap_initialize failed: 2

Apr 12 14:05:06 pve pmxcfs[2510]: [confdb] crit: can't initialize service

Apr 12 14:05:06 pve pmxcfs[2510]: [dcdb] crit: cpg_initialize failed: 2

Apr 12 14:05:06 pve pmxcfs[2510]: [dcdb] crit: can't initialize service

Apr 12 14:05:06 pve pmxcfs[2510]: [status] crit: cpg_initialize failed: 2

Apr 12 14:05:06 pve pmxcfs[2510]: [status] crit: can't initialize service

Apr 12 14:05:08 pve corosync[2607]: [KNET ] host: host: 1 has no active links

Apr 12 14:05:08 pve corosync[2607]: [KNET ] host: host: 2 (passive) best link: 0 (pri: 1)

Apr 12 14:05:08 pve corosync[2607]: [KNET ] host: host: 2 has no active links

Apr 12 14:05:08 pve corosync[2607]: [KNET ] host: host: 2 (passive) best link: 0 (pri: 1)

Apr 12 14:05:08 pve corosync[2607]: [KNET ] host: host: 2 has no active links

Apr 12 14:05:08 pve corosync[2607]: [KNET ] host: host: 2 (passive) best link: 0 (pri: 1)

Apr 12 14:05:08 pve corosync[2607]: [KNET ] host: host: 2 has no active links

Apr 12 14:04:52 pve systemd[1]: nfs-config.service: Succeeded.

Apr 12 14:05:23 pve kernel: FS-Cache: Netfs 'nfs' registered for caching

Apr 12 14:05:23 pve systemd[1]: nfs-config.service: Succeeded.

Apr 12 14:06:00 pve pvestatd[2649]: storage 'hpnas_nfs' is not online

Apr 12 14:06:10 pve pvedaemon[2729]: storage 'hpnas_nfs' is not online

Apr 12 14:06:11 pve pvedaemon[2728]: storage 'hpnas_nfs' is not online

root@pve:~#

Keep digging.

The error you are getting is based on "check_connection()" response from NFS Plugin.

NFS Plugin does:

Find out what happens to rpcinfo and showmount. These are not PVE specific, its generic TCP/NFS layer of Linux. If you have 3 nodes and NAS, and only one node has an issue, the most logical explanation is that the problem is with that node.

Is network even up at the time of the probes? The log says that corosync is not happy with its network connectivity - is that the same link you are using for NFS?

Blockbridge: Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

The error you are getting is based on "check_connection()" response from NFS Plugin.

NFS Plugin does:

Code:

sub check_connection {

my ($class, $storeid, $scfg) = @_;

my $server = $scfg->{server};

my $opts = $scfg->{options};

my $cmd;

if (defined($opts) && $opts =~ /vers=4.*/) {

my $ip = PVE::JSONSchema::pve_verify_ip($server, 1);

if (!defined($ip)) {

$ip = PVE::Network::get_ip_from_hostname($server);

}

my $transport = PVE::JSONSchema::pve_verify_ipv4($ip, 1) ? 'tcp' : 'tcp6';

# nfsv4 uses a pseudo-filesystem always beginning with /

# no exports are listed

$cmd = ['/usr/sbin/rpcinfo', '-T', $transport, $ip, 'nfs', '4'];

} else {

$cmd = ['/sbin/showmount', '--no-headers', '--exports', $server];

}

eval { run_command($cmd, timeout => 10, outfunc => sub {}, errfunc => sub {}) };

if (my $err = $@) {

return 0;

}

return 1;

}Find out what happens to rpcinfo and showmount. These are not PVE specific, its generic TCP/NFS layer of Linux. If you have 3 nodes and NAS, and only one node has an issue, the most logical explanation is that the problem is with that node.

Is network even up at the time of the probes? The log says that corosync is not happy with its network connectivity - is that the same link you are using for NFS?

Blockbridge: Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

Thanks, I will check shortly, however the migration network is on another segment the 192.168.9.x la192.168.2.x nfs. Your assumption is correct as it is only a node that signals this anomaly as well as delay (boot only). Once the drives are reconnected, everything works, migration, sync, VM to nfs, backup to PBS.Keep digging.

The error you are getting is based on "check_connection()" response from NFS Plugin.

NFS Plugin does:

Code:sub check_connection { my ($class, $storeid, $scfg) = @_; my $server = $scfg->{server}; my $opts = $scfg->{options}; my $cmd; if (defined($opts) && $opts =~ /vers=4.*/) { my $ip = PVE::JSONSchema::pve_verify_ip($server, 1); if (!defined($ip)) { $ip = PVE::Network::get_ip_from_hostname($server); } my $transport = PVE::JSONSchema::pve_verify_ipv4($ip, 1) ? 'tcp' : 'tcp6'; # nfsv4 uses a pseudo-filesystem always beginning with / # no exports are listed $cmd = ['/usr/sbin/rpcinfo', '-T', $transport, $ip, 'nfs', '4']; } else { $cmd = ['/sbin/showmount', '--no-headers', '--exports', $server]; } eval { run_command($cmd, timeout => 10, outfunc => sub {}, errfunc => sub {}) }; if (my $err = $@) { return 0; } return 1; }

Find out what happens to rpcinfo and showmount. These are not PVE specific, its generic TCP/NFS layer of Linux. If you have 3 nodes and NAS, and only one node has an issue, the most logical explanation is that the problem is with that node.

Is network even up at the time of the probes? The log says that corosync is not happy with its network connectivity - is that the same link you are using for NFS?

Blockbridge: Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

This is the result of showmount where VMs reside in NFS:Keep digging.

The error you are getting is based on "check_connection()" response from NFS Plugin.

NFS Plugin does:

Code:sub check_connection { my ($class, $storeid, $scfg) = @_; my $server = $scfg->{server}; my $opts = $scfg->{options}; my $cmd; if (defined($opts) && $opts =~ /vers=4.*/) { my $ip = PVE::JSONSchema::pve_verify_ip($server, 1); if (!defined($ip)) { $ip = PVE::Network::get_ip_from_hostname($server); } my $transport = PVE::JSONSchema::pve_verify_ipv4($ip, 1) ? 'tcp' : 'tcp6'; # nfsv4 uses a pseudo-filesystem always beginning with / # no exports are listed $cmd = ['/usr/sbin/rpcinfo', '-T', $transport, $ip, 'nfs', '4']; } else { $cmd = ['/sbin/showmount', '--no-headers', '--exports', $server]; } eval { run_command($cmd, timeout => 10, outfunc => sub {}, errfunc => sub {}) }; if (my $err = $@) { return 0; } return 1; }

Find out what happens to rpcinfo and showmount. These are not PVE specific, its generic TCP/NFS layer of Linux. If you have 3 nodes and NAS, and only one node has an issue, the most logical explanation is that the problem is with that node.

Is network even up at the time of the probes? The log says that corosync is not happy with its network connectivity - is that the same link you are using for NFS?

Blockbridge: Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

root@pve:/var/log# showmount 192.168.2.99

Hosts on 192.168.2.99:

192.168.2.33

192.168.2.34

192.168.2.37

root@pve:/var/log# showmount 192.168.2.100

Hosts on 192.168.2.100:

192.168.2.33

192.168.2.34

192.168.2.37

root@pve:/var/log#

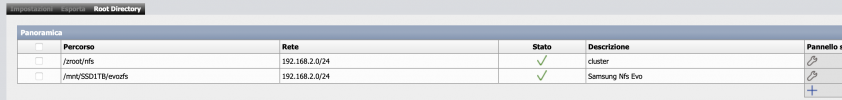

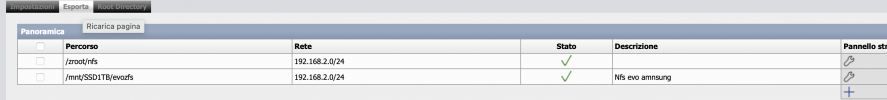

Storage cfg:

root@pve:/etc/pve# less storage.cfg

dir: local

path /var/lib/vz

content backup,images,iso,vztmpl

prune-backups keep-last=3

shared 0

zfspool: local-zfs

pool rpool/data

content rootdir,images

nodes pve

sparse 0

nfs: nasforum

export /zroot/nfs

path /mnt/pve/nasforum

server 192.168.2.100

content vztmpl,images,rootdir

prune-backups keep-all=1

pbs: pbs

datastore ZFS_STORAGE

server 192.168.9.19

content backup

fingerprint d9:da:f3:38:76:1b:bd:8d:61:7c:fb:85:c3:b9:90:1f:00:9f:e8:cd:d9:43:4e:85:4e:2e:20:b8:dd:91:36:da

prune-backups keep-all=1

username root@pam

nfs: evo1tb

export /mnt/SSD1TB/evozfs

path /mnt/pve/evo1tb

server 192.168.2.100

content images,rootdir,vztmpl

prune-backups keep-all=1

pbs: PBS_WD

datastore ZFS_WD

server 192.168.9.19

content backup

fingerprint d9:da:f3:38:76:1b:bd:8d:61:7c:fb:85:c3:b9:90:1f:00:9f:e8:cd:d9:43:4e:85:4e:2e:20:b8:dd:91:36:da

prune-backups keep-all=1

username root@pam

lvmthin: data_lvm

thinpool data

vgname pve

content images,rootdir

nodes pve3,pve4

nfs: hpnas_nfs

export /zroot/nfs

path /mnt/pve/hpnas_nfs

server 192.168.2.99

content vztmpl,images,rootdir

prune-backups keep-all=1

zfspool: SSD1TB

pool SSD1TB

content images,rootdir

mountpoint /SSD1TB

nodes pve4

pbs: pbsusb500

datastore ZFS_USB500

server 192.168.9.19

content backup

fingerprint d9:da:f3:38:76:1b:bd:8d:61:7c:fb:85:c3:b9:90:1f:00:9f:e8:cd:d9:43:4e:85:4e:2e:20:b8:dd:91:36:da

prune-backups keep-all=1

username root@pam

Last edited:

Thats not very interesting. You need to run showmount and rpcinfo (preferably with the same options as the code snippet) directly on the node that has an issue specifically at the time right after boot and before the connection settles. If you can catch that window the commands should be failing but may be you'll get a helpful error message or some other clue.

Your window of troubleshooting is that one minute, everything else you run outside of it is useless. If there is nothing in log - you have to keep experimenting.

Have you looked at the network cards, is there a difference in model? firmware? bios settings?

good luck.

Blockbridge: Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

Your window of troubleshooting is that one minute, everything else you run outside of it is useless. If there is nothing in log - you have to keep experimenting.

Have you looked at the network cards, is there a difference in model? firmware? bios settings?

good luck.

Blockbridge: Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

So I understand that I have to restart the server and as soon as it gives me the error run the show Mount command. As for the show Mount command, could you give me the correct syntax to perform?Thats not very interesting. You need to run showmount and rpcinfo (preferably with the same options as the code snippet) directly on the node that has an issue specifically at the time right after boot and before the connection settles. If you can catch that window the commands should be failing but may be you'll get a helpful error message or some other clue.

Your window of troubleshooting is that one minute, everything else you run outside of it is useless. If there is nothing in log - you have to keep experimenting.

Have you looked at the network cards, is there a difference in model? firmware? bios settings?

good luck.

Blockbridge: Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

You dont need to wait for the error, start running commands as soon as you can login.

Create a script with relevant commands , run them in a loop.

Blockbridge: Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

Create a script with relevant commands , run them in a loop.

Code:

#!/usr/bin/env bash

/usr/bin/ping -c4 {STORAGE_IP}

/usr/sbin/rpcinfo -T tcp {STORAGE_IP} nfs 4

/usr/sbin/rpcinfo -T tcp {STORAGE_IP} nfs 3

/sbin/showmount --no-headers --exports {STORAGE_IP}Blockbridge: Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

Grazie per la tua gentilezza , appena possibile provo e ti terrò aggiornato . Al momento ho eseguito lo script su un cluster che uso come test , e i risultati sono questi :You dont need to wait for the error, start running commands as soon as you can login.

Create a script with relevant commands , run them in a loop.

Code:#!/usr/bin/env bash /usr/bin/ping -c4 {STORAGE_IP} /usr/sbin/rpcinfo -T tcp {STORAGE_IP} nfs 4 /usr/sbin/rpcinfo -T tcp {STORAGE_IP} nfs 3 /sbin/showmount --no-headers --exports {STORAGE_IP}

Blockbridge: Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

Code:

root@pvetest:~# ./checknfs.sh

PING 192.168.3.42 (192.168.3.42) 56(84) bytes of data.

64 bytes from 192.168.3.42: icmp_seq=1 ttl=64 time=0.416 ms

64 bytes from 192.168.3.42: icmp_seq=2 ttl=64 time=0.177 ms

64 bytes from 192.168.3.42: icmp_seq=3 ttl=64 time=0.336 ms

64 bytes from 192.168.3.42: icmp_seq=4 ttl=64 time=0.364 ms

--- 192.168.3.42 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3035ms

rtt min/avg/max/mdev = 0.177/0.323/0.416/0.089 ms

program 100003 version 4 ready and waiting

program 100003 version 3 ready and waiting

/mnt/pub 192.168.3.0I hope to try the one in production tonight. Thank you

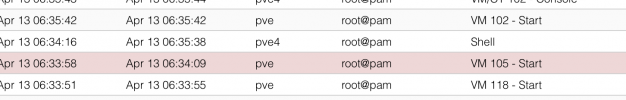

Hi, I did what you told me. Here are the results:You dont need to wait for the error, start running commands as soon as you can login.

Create a script with relevant commands , run them in a loop.

Code:#!/usr/bin/env bash /usr/bin/ping -c4 {STORAGE_IP} /usr/sbin/rpcinfo -T tcp {STORAGE_IP} nfs 4 /usr/sbin/rpcinfo -T tcp {STORAGE_IP} nfs 3 /sbin/showmount --no-headers --exports {STORAGE_IP}

Blockbridge: Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

Code:

Debian GNU/Linux comes with ABSOLUTELY NO WARRANTY, to the extent

permitted by applicable law.

Last login: Wed Apr 13 06:34:16 CEST 2022 from 192.168.3.33 on pts/1

root@pve:~# ./checknfs.sh

PING 192.168.2.100 (192.168.2.100) 56(84) bytes of data.

64 bytes from 192.168.2.100: icmp_seq=1 ttl=64 time=0.085 ms

64 bytes from 192.168.2.100: icmp_seq=2 ttl=64 time=0.178 ms

64 bytes from 192.168.2.100: icmp_seq=3 ttl=64 time=0.184 ms

64 bytes from 192.168.2.100: icmp_seq=4 ttl=64 time=0.185 ms

--- 192.168.2.100 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3047ms

rtt min/avg/max/mdev = 0.085/0.158/0.185/0.042 ms

program 100003 version 4 ready and waiting

program 100003 version 3 ready and waiting

rpc mount export: RPC: Timed out

PING 192.168.2.99 (192.168.2.99) 56(84) bytes of data.

64 bytes from 192.168.2.99: icmp_seq=1 ttl=64 time=0.630 ms

64 bytes from 192.168.2.99: icmp_seq=2 ttl=64 time=0.647 ms

64 bytes from 192.168.2.99: icmp_seq=3 ttl=64 time=0.699 ms

64 bytes from 192.168.2.99: icmp_seq=4 ttl=64 time=0.759 ms

--- 192.168.2.99 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3039ms

rtt min/avg/max/mdev = 0.630/0.683/0.759/0.050 ms

program 100003 version 4 ready and waiting

program 100003 version 3 ready and waiting

/zroot/nfs 192.168.2.0

root@pve:~#

VM 105 does not start because it resides on nfs.

Here are the nas parameters:

The output you provided does not give any timing reference or information about the state of the storage. And based on the output it seems that you have already missed the window.

If this is a production environment then you should start utilizing your vendor support channels, i.e. NAS and/or PVE.

If this is a home/lab environment you can either continue trying to catch the unavailability window or try to research more and implement one of the many workarounds already discussed in the forum:

https://forum.proxmox.com/threads/start-at-boot-and-shared-storage.26340/

https://forum.proxmox.com/threads/startup-delay-for-first-vm-with-remote-storage.60348/

etc

The red flag for me is that you only have the problem on one out of three nodes. If it was my environment, I would concentrate on finding differences between the nodes.

Good luck.

Blockbridge: Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

If this is a production environment then you should start utilizing your vendor support channels, i.e. NAS and/or PVE.

If this is a home/lab environment you can either continue trying to catch the unavailability window or try to research more and implement one of the many workarounds already discussed in the forum:

https://forum.proxmox.com/threads/start-at-boot-and-shared-storage.26340/

https://forum.proxmox.com/threads/startup-delay-for-first-vm-with-remote-storage.60348/

etc

The red flag for me is that you only have the problem on one out of three nodes. If it was my environment, I would concentrate on finding differences between the nodes.

Good luck.

Blockbridge: Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

Last edited:

Thank you for your courtesy and technical information. I hope to find something or rather a clue that I bring you in the correct trade to solving the problem.The output you provided does not give any timing reference or information about the state of the storage. And based on the output it seems that you have already missed the window.

If this is a production environment then you should start utilizing your vendor support channels, i.e. NAS and/or PVE.

If this is a home/lab environment you can either continue trying to catch the unavailability window or try to research more and implement one of the many workarounds already discussed in the forum:

https://forum.proxmox.com/threads/start-at-boot-and-shared-storage.26340/

https://forum.proxmox.com/threads/startup-delay-for-first-vm-with-remote-storage.60348/

etc

The red flag for me is that you only have the problem on one out of three nodes. If it was my environment, I would concentrate on finding differences between the nodes.

Good luck.

Blockbridge: Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

I came across this resources, I think it would be helpful for you to study as it may address your issue :

https://wiki.archlinux.org/title/NFS#Mount_using_.2Fetc.2Ffstab_with_systemd

Blockbridge: Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

https://wiki.archlinux.org/title/NFS#Mount_using_.2Fetc.2Ffstab_with_systemd

Blockbridge: Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

Thank you. I will give an in-depth reading on the docs, but reading quickly I understand that you should mount nfs manually. I find interesting what you wrote to me earlier, in particular to change the sleep delay declared on systemd, Maybe it is less invasive and imposes a small delay, what do you think? In addition, other users have had this problem. Thank you very much at the moment.I came across this resources, I think it would be helpful for you to study as it may address your issue :

https://wiki.archlinux.org/title/NFS#Mount_using_.2Fetc.2Ffstab_with_systemd

Blockbridge: Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

You can but you shouldn't have to. If you do go that route you will need to do this across entire cluster. Its your choice if you want to use this approach as a band aid to the main issue you have.Thank you. I will give an in-depth reading on the docs, but reading quickly I understand that you should mount nfs manually.

Ideally you will find what causes the delay (nic,firmware,cable,bios,switch port,cable, some extra software or setting you set and forgot about). If you cant and it bothers you enough - apply some sort of workaround.I find interesting what you wrote to me earlier, in particular to change the sleep delay declared on systemd, Maybe it is less invasive and imposes a small delay, what do you think? In addition, other users have had this problem.

Blockbridge: Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

I don't know, but technically I think about the process order of the services. Let me explain better:You can but you shouldn't have to. If you do go that route you will need to do this across entire cluster. Its your choice if you want to use this approach as a band aid to the main issue you have.

Ideally you will find what causes the delay (nic,firmware,cable,bios,switch port,cable, some extra software or setting you set and forgot about). If you cant and it bothers you enough - apply some sort of workaround.

Blockbridge: Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

It's as if the nfs storage service is not processed in the correct order. I could try to share a type of CIFS /samba storage through another server to understand if even with this service, connection times vary or remain the same.