I am running ceph dedicated 4 node cluster with 10Gbos networks for ceph cluster and ceph public networks over bonded interfaces:

proxmox-ve: 6.1-2 (running kernel: 5.3.13-1-pve)

pve-manager: 6.1-5 (running version: 6.1-5/9bf06119)

pve-kernel-5.3: 6.1-1

pve-kernel-helper: 6.1-1

pve-kernel-5.3.13-1-pve: 5.3.13-1

pve-kernel-5.3.10-1-pve: 5.3.10-1

ceph: 14.2.6-pve1

ceph-fuse: 14.2.6-pve1

corosync: 3.0.2-pve4

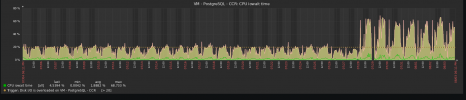

Each server has 12 x Intel(R) Xeon(R) CPU E5-2609 v3 @ 1.90GHz (2 Sockets), 6 cores per socket and 48 GB RAM per server , currently 18-20GB is in use. I have 24 total OSDs, 8 SSDs - pool with SSDs and 16 x 1000K spinners drives - pool with HDDs. Currently I have 39 VMs on spinners and 18 VMs on SSDs. What worries me is that I have on average twice as much i/o delay as CPU usage. So usually at least for now I have 2.5% CPU usage and 5% I/O delay. When I am move drives to ceph the CPU usage jumps to 5% and I/O delay to 10%.

The i/O delay spike here in the middle is due to the disk move to ceph storage but on average as I said I observed that the I/O delay is twice as much as CPU usage.

Should I be concerned or this is normal ?

Thank you

proxmox-ve: 6.1-2 (running kernel: 5.3.13-1-pve)

pve-manager: 6.1-5 (running version: 6.1-5/9bf06119)

pve-kernel-5.3: 6.1-1

pve-kernel-helper: 6.1-1

pve-kernel-5.3.13-1-pve: 5.3.13-1

pve-kernel-5.3.10-1-pve: 5.3.10-1

ceph: 14.2.6-pve1

ceph-fuse: 14.2.6-pve1

corosync: 3.0.2-pve4

Each server has 12 x Intel(R) Xeon(R) CPU E5-2609 v3 @ 1.90GHz (2 Sockets), 6 cores per socket and 48 GB RAM per server , currently 18-20GB is in use. I have 24 total OSDs, 8 SSDs - pool with SSDs and 16 x 1000K spinners drives - pool with HDDs. Currently I have 39 VMs on spinners and 18 VMs on SSDs. What worries me is that I have on average twice as much i/o delay as CPU usage. So usually at least for now I have 2.5% CPU usage and 5% I/O delay. When I am move drives to ceph the CPU usage jumps to 5% and I/O delay to 10%.

The i/O delay spike here in the middle is due to the disk move to ceph storage but on average as I said I observed that the I/O delay is twice as much as CPU usage.

Should I be concerned or this is normal ?

Thank you