Hello,

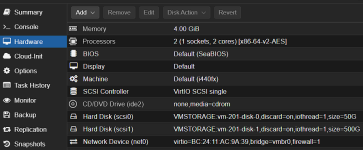

I have a Debian 13 VM on Proxmox 9 that has 2 hard drives attached from the same ZFS pool. Sometimes on boot/reboot the second hard drive doesn't mount. Not sure why. Rebooting again generally resolves the issue, and both drives mount.

Missing SDB

df -h

Filesystem Size Used Avail Use% Mounted on

udev 1.9G 0 1.9G 0% /dev

tmpfs 393M 4.4M 388M 2% /run

/dev/sdb1 47G 4.6G 40G 11% /

tmpfs 2.0G 0 2.0G 0% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

tmpfs 1.0M 0 1.0M 0% /run/credentials/systemd-journald.service

tmpfs 2.0G 0 2.0G 0% /tmp

tmpfs 1.0M 0 1.0M 0% /run/credentials/getty@tty1.service

tmpfs 393M 8.0K 393M 1% /run/user/1000

REBOOT

df -h

Filesystem Size Used Avail Use% Mounted on

udev 1.9G 0 1.9G 0% /dev

tmpfs 393M 4.4M 388M 2% /run

/dev/sda1 47G 4.6G 40G 11% /

tmpfs 2.0G 0 2.0G 0% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

tmpfs 1.0M 0 1.0M 0% /run/credentials/systemd-journald.service

tmpfs 2.0G 0 2.0G 0% /tmp

/dev/sdb1 492G 2.1M 467G 1% /data

tmpfs 1.0M 0 1.0M 0% /run/credentials/getty@tty1.service

tmpfs 393M 8.0K 393M 1% /run/user/1000

Suggestions?

Regards,

James

I have a Debian 13 VM on Proxmox 9 that has 2 hard drives attached from the same ZFS pool. Sometimes on boot/reboot the second hard drive doesn't mount. Not sure why. Rebooting again generally resolves the issue, and both drives mount.

Missing SDB

df -h

Filesystem Size Used Avail Use% Mounted on

udev 1.9G 0 1.9G 0% /dev

tmpfs 393M 4.4M 388M 2% /run

/dev/sdb1 47G 4.6G 40G 11% /

tmpfs 2.0G 0 2.0G 0% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

tmpfs 1.0M 0 1.0M 0% /run/credentials/systemd-journald.service

tmpfs 2.0G 0 2.0G 0% /tmp

tmpfs 1.0M 0 1.0M 0% /run/credentials/getty@tty1.service

tmpfs 393M 8.0K 393M 1% /run/user/1000

REBOOT

df -h

Filesystem Size Used Avail Use% Mounted on

udev 1.9G 0 1.9G 0% /dev

tmpfs 393M 4.4M 388M 2% /run

/dev/sda1 47G 4.6G 40G 11% /

tmpfs 2.0G 0 2.0G 0% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

tmpfs 1.0M 0 1.0M 0% /run/credentials/systemd-journald.service

tmpfs 2.0G 0 2.0G 0% /tmp

/dev/sdb1 492G 2.1M 467G 1% /data

tmpfs 1.0M 0 1.0M 0% /run/credentials/getty@tty1.service

tmpfs 393M 8.0K 393M 1% /run/user/1000

Suggestions?

Regards,

James