Hi all,

TL;DR:

I created a thin volume and a mount point, but forgot to mount it before creating a datastore. The result was not what I expected. Not finding LVM thin explicitly in the PBS docs, I asked whether a datastore on thinly provisioned LVM would work. Conclusion: yes it does, just mount it before creating the datastore.

---------------------------------------------------------------------

Original message

Is datastore on a thin volume supported? Creating the datastore runs well enough, but the result is not what I expected.

These are the steps I took to create the thin volume and add a datastore on it:

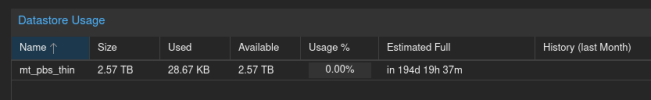

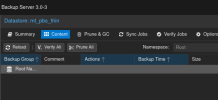

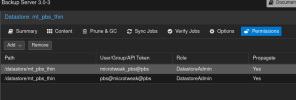

It took a few minutes to initialize the chunkstore, then it showed the datastore in the GUI:

Querying via CLI, I got:

The backing thin pool looks like :

The line for `mt_pbs` shows 2.50t, which is as expected.

Is it correct to assume that the chunk store takes ~6GB for the whole 2.5TB, with the reported stats in PBS growing with usage?

To add insult to injury, `lvs` above gives it away: this server shares PBS and PVE and both of them share storage in this thin volume. I think that is content for another thread: it also does not really behave the way I'd expected. In my defense: it is on the one server, so I thought 'shared storage' is not an item, and the reason for sharing the thin volume is so that I can use a single dm_cache on SSD for the (spinning) thin pool LV (.. is the idea, anyway..)

Thank you for reading!

TL;DR:

I created a thin volume and a mount point, but forgot to mount it before creating a datastore. The result was not what I expected. Not finding LVM thin explicitly in the PBS docs, I asked whether a datastore on thinly provisioned LVM would work. Conclusion: yes it does, just mount it before creating the datastore.

---------------------------------------------------------------------

Original message

Is datastore on a thin volume supported? Creating the datastore runs well enough, but the result is not what I expected.

These are the steps I took to create the thin volume and add a datastore on it:

# lvcreate -V 2.5T -T mt_alledingen/mt_alledingen_pool -n mt_pbs

Logical volume "mt_pbs" created.

# mkfs.ext4 -L mt_pbs_thin

# echo '/dev/mapper/mt_alledingen/mt_pbs /home/data/backup/mt_pbs ext4 defaults,noatime 0 0' >> /etc/fstab

# proxmox-backup-manager datastore create mt_pbs_thin /home/data/backup/mt_pbs

It took a few minutes to initialize the chunkstore, then it showed the datastore in the GUI:

Querying via CLI, I got:

# proxmox-backup-manager datastore list

┌─────────────┬──────────────────────────┬─────────┐

│ name │ path │ comment │

╞═════════════╪══════════════════════════╪═════════╡

│ mt_pbs_thin │ /home/data/backup/mt_pbs │ │

└─────────────┴──────────────────────────┴─────────┘

# proxmox-backup-manager disk list

┌──────┬────────────┬─────┬───────────┬───────────────┬───────────────────────────┬─────────┬─────────┐

│ name │ used │ gpt │ disk-type │ size │ model │ wearout │ status │

╞══════╪════════════╪═════╪═══════════╪═══════════════╪═══════════════════════════╪═════════╪═════════╡

│ sda │ lvm │ 1 │ hdd │ 3000592982016 │ Hitachi_HDS5C3030BLE630 │ - │ passed │

├──────┼────────────┼─────┼───────────┼───────────────┼───────────────────────────┼─────────┼─────────┤

│ sdb │ lvm │ 0 │ ssd │ 500107862016 │ Samsung_SSD_860_EVO_500GB │ 1.00 % │ passed │

├──────┼────────────┼─────┼───────────┼───────────────┼───────────────────────────┼─────────┼─────────┤

│ sdc │ lvm │ 1 │ hdd │ 2000398934016 │ WDC_WD20EFRX-68AX9N0 │ - │ passed │

├──────┼────────────┼─────┼───────────┼───────────────┼───────────────────────────┼─────────┼─────────┤

│ sdd │ lvm │ 1 │ hdd │ 2000398934016 │ WDC_WD20EFRX-68AX9N0 │ - │ passed │

├──────┼────────────┼─────┼───────────┼───────────────┼───────────────────────────┼─────────┼─────────┤

│ sde │ lvm │ 1 │ hdd │ 31914983424 │ Storage_Device │ - │ unknown │

├──────┼────────────┼─────┼───────────┼───────────────┼───────────────────────────┼─────────┼─────────┤

│ sdf │ lvm │ 1 │ hdd │ 32105299968 │ Internal_SD-CARD │ - │ unknown │

├──────┼────────────┼─────┼───────────┼───────────────┼───────────────────────────┼─────────┼─────────┤

│ sdg │ partitions │ 0 │ hdd │ 268435456 │ LUN_01_Media_0 │ - │ unknown │

├──────┼────────────┼─────┼───────────┼───────────────┼───────────────────────────┼─────────┼─────────┤

│ sdh │ lvm │ 1 │ ssd │ 500107862016 │ Samsung_SSD_860_EVO_500GB │ 0.00 % │ passed │

└──────┴────────────┴─────┴───────────┴───────────────┴───────────────────────────┴─────────┴─────────┘

The backing thin pool looks like :

# lvs mt_alledingen

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

mt_alledingen_pool mt_alledingen twi-aot--- 5.07t 0.07 8.36

mt_pbs mt_alledingen Vwi-a-t--- 2.50t mt_alledingen_pool 0.14

vm-102-disk-0 mt_alledingen Vwi-aot--- 8.00g mt_alledingen_pool 2.98

The line for `mt_pbs` shows 2.50t, which is as expected.

Is it correct to assume that the chunk store takes ~6GB for the whole 2.5TB, with the reported stats in PBS growing with usage?

To add insult to injury, `lvs` above gives it away: this server shares PBS and PVE and both of them share storage in this thin volume. I think that is content for another thread: it also does not really behave the way I'd expected. In my defense: it is on the one server, so I thought 'shared storage' is not an item, and the reason for sharing the thin volume is so that I can use a single dm_cache on SSD for the (spinning) thin pool LV (.. is the idea, anyway..)

Thank you for reading!

Last edited: