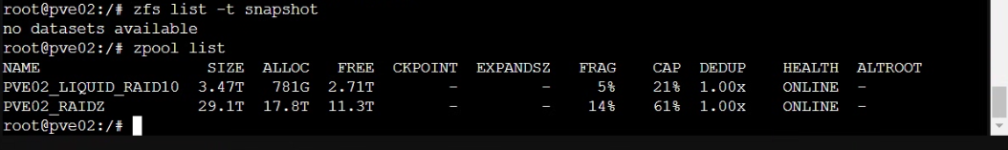

How is it possible to have different remaining space available for the same filesystem? @aaron I've never seen this before... what does it mean?

You mean the "AVAIL" column? This happens if some disk images, or in ZFS terminology, ZVOL datasets have a reservation / refreservation set. If the Proxmox VE storage config for it doesn't have the "thin provision" checkbox set, the datasets are created "thick" which for ZFS means, they get a reservation.

To convert a thick provisioned ZVOL into a thin one, one needs to remove the reservations:

Code:

zfs set reservation=none refreservation=none {zfspool}/vm-X-disk-Y

@JSChasle It seems that the situation explained in the admin guide is hitting you here (

https://pve.proxmox.com/pve-docs/pve-admin-guide.html#sysadmin_zfs_raid_considerations).

The disk image has a volblocksize of 8k, and for each 8k, one block of parity is written in a raidz1. I assume that the pool has an ashift of 12, which means a physical block size of 4k (2^12=4096). Therefore, you have one 4k block parity (smallest physical block possible) per 8k of disk image data.

Given that the disk image is 10T, the currently used is 16T which is roughly 1.5x the 10T, given some leeway for additional metadata overhead and rounding errors.

This situation has gotten better as with recent ZFS versions, the default volblocksize is 16k, therefore, the impact of the additional parity blocks is a lot lower. If you are still on an older Proxmox VE version, you can override the volblocksize in the storage config. It will only affect newly created disk images though and you cannot change it for an existing one. Therefore, changing the volblocksize and moving the disk image from this storage to another one, and then back, should create it with the larger volblocksize.