Hi,

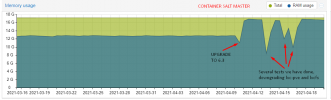

We have detected a strange (or not well-understood) behavior in the memory usage of, at least, two containers but we believe it's a generalized issue.

After a CT restart the memory usage keeps steadily growing until, after a couple days, it reaches around 96-99% of the total assigned memory.

What's strange is that, according to the usual memory metric tools, there is no way the sum of the resident memory of all processes is what's being reported.

buf/cache and shared does not account for much of it either. We have not observed the OOM killer take any action yet.

Interestingly enough, when we drop all the caches by running in the host,

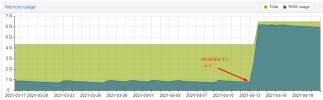

the available memory in the CT becomes readily available after a few seconds as you can see in the image below:

Initially we were suspicious of the 6.2 -> 6.3 upgrade we performed a few days ago but, after booting one of the PVE nodes with the 6.2 kernel, we still can observe the same behavior, so we think this must be happening at least from 6.2 onwards.

Could it be that the cache memory for a particular cgroup is not being accounted in the cgroup's memory stats but still linked to it?

If so, how would anyone get meaninful information from the memory reporting tools within a CT?

Thanks,

We have detected a strange (or not well-understood) behavior in the memory usage of, at least, two containers but we believe it's a generalized issue.

After a CT restart the memory usage keeps steadily growing until, after a couple days, it reaches around 96-99% of the total assigned memory.

What's strange is that, according to the usual memory metric tools, there is no way the sum of the resident memory of all processes is what's being reported.

buf/cache and shared does not account for much of it either. We have not observed the OOM killer take any action yet.

Interestingly enough, when we drop all the caches by running in the host,

Code:

echo 3 > /proc/sys/vm/drop_cachesthe available memory in the CT becomes readily available after a few seconds as you can see in the image below:

Initially we were suspicious of the 6.2 -> 6.3 upgrade we performed a few days ago but, after booting one of the PVE nodes with the 6.2 kernel, we still can observe the same behavior, so we think this must be happening at least from 6.2 onwards.

Could it be that the cache memory for a particular cgroup is not being accounted in the cgroup's memory stats but still linked to it?

If so, how would anyone get meaninful information from the memory reporting tools within a CT?

Thanks,