I read the full mesh article for ceph > https://pve.proxmox.com/wiki/Full_Mesh_Network_for_Ceph_Server?ref=x14-kod-infrastruktur-ab

While I did get three nodes to be able to ping each other over DAC, I have 4 PVE nodes that I would like to use for Ceph with 10G SFP+ DAC connections.

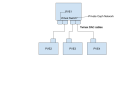

With the SDN functionality in 8.1, would it be possible to have a couple of cheap dual-port 10G cards (HP 530SFP+ are $15-20 each) in the most powerful workstation and turn those dual-port cards into a virtual SFP+ switch so the other 3 nodes could each connect into it with a single DAC cable and a virtual switch would then send traffic out the correct interface for the node intended? Using OpenSwitch, etc. would be fine - I just don't know if the idea is even feasible.

If there are any cheap 10G SFP+ switches for a home-lab - i.e quiet, small, and used for ~$100.00 range then I would use that but I haven't seen any. I have seen there are old enterprise switches with many 10G SFP+ ports but I imagine they are all loud and overkill for me.

While I did get three nodes to be able to ping each other over DAC, I have 4 PVE nodes that I would like to use for Ceph with 10G SFP+ DAC connections.

With the SDN functionality in 8.1, would it be possible to have a couple of cheap dual-port 10G cards (HP 530SFP+ are $15-20 each) in the most powerful workstation and turn those dual-port cards into a virtual SFP+ switch so the other 3 nodes could each connect into it with a single DAC cable and a virtual switch would then send traffic out the correct interface for the node intended? Using OpenSwitch, etc. would be fine - I just don't know if the idea is even feasible.

If there are any cheap 10G SFP+ switches for a home-lab - i.e quiet, small, and used for ~$100.00 range then I would use that but I haven't seen any. I have seen there are old enterprise switches with many 10G SFP+ ports but I imagine they are all loud and overkill for me.