I'm not new to Proxmox, but am new to trying out ZFS.

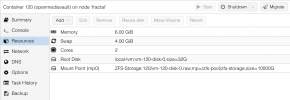

At the moment I have Proxmox installed in a 1TB NVMe. I have a few VMs on there and they each have 50GB - 100GB disks each (enough space for them to run OK).

I also have 7 old-ish 2TB HDs in my PC/server case, and thought about creating a ZFS pool from the HDs and would use this as a large pool of data that the VMs and other computers could share (shared media files, a few backups, random files, etc.).

Is this a good idea? (Creating a large pool of shared storage from the 2TB disks) And if so, how would I create the share from the pool?

I've seen tutorials on creating ZFS pools on Proxmox, but haven't seen how to create a large pool of storage that could be shared with VMs and other computers, maybe via Samba/CIFS?

At the moment I have Proxmox installed in a 1TB NVMe. I have a few VMs on there and they each have 50GB - 100GB disks each (enough space for them to run OK).

I also have 7 old-ish 2TB HDs in my PC/server case, and thought about creating a ZFS pool from the HDs and would use this as a large pool of data that the VMs and other computers could share (shared media files, a few backups, random files, etc.).

Is this a good idea? (Creating a large pool of shared storage from the 2TB disks) And if so, how would I create the share from the pool?

I've seen tutorials on creating ZFS pools on Proxmox, but haven't seen how to create a large pool of storage that could be shared with VMs and other computers, maybe via Samba/CIFS?