Thank you Tamanok. You mentioned that you were manually applying pinning for a few customers. How are you pinning the host processes?Hi Pharpe,

Please read my detailed post above which answers your questions. TL;DR No it doesn't prevent other processes from using the pinned cores and no you likely can't prevent that without having computer science level knowledge. We're asking the Proxmox team to consider making this a feature, maybe an expert software engineer will swing by and drop us some script or inform us of a kernel level feature that we're missing.

Cheers,

Tmanok

CPU pinning?

- Thread starter Jamie

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Hello

I'm running a VM with pcie passthrough to a nvidia quadro GPU, and trying to set cpu and ram affinity to the same NUMA node that hosts the GPU (numa 0) It's my understanding both the RAM and the CPU's should be run only on the same NUMA node the GPU is attached to..

As i understand it the hookscript in this thread only pins the VM to certain cpu cores, but i'm looking to pin the cpu's and ram in the VM to a single numa node, and let KVM handle the core scheduling and choosing.

Is there any news about the patch in this thread ? Could anyone give instruction on how to apply it?

According to this Intel guide:

So what would be the best way to use numactl instead of taskset? or is cpuset comparable? or could i use some 'qm set --numa' argument in the vmid .conf file?

I'm running a VM with pcie passthrough to a nvidia quadro GPU, and trying to set cpu and ram affinity to the same NUMA node that hosts the GPU (numa 0) It's my understanding both the RAM and the CPU's should be run only on the same NUMA node the GPU is attached to..

As i understand it the hookscript in this thread only pins the VM to certain cpu cores, but i'm looking to pin the cpu's and ram in the VM to a single numa node, and let KVM handle the core scheduling and choosing.

Is there any news about the patch in this thread ? Could anyone give instruction on how to apply it?

According to this Intel guide:

Automatic NUMA Balancing vs. Manual NUMA Configuration

Automatic NUMA Balancing is a Linux Kernel feature that tries to dynamically move application process closer to the memory they access. It is enabled by default. In virtualization environment, automatic NUMA balancing is usually preferrable if there is no passthrough device assigned to the Virtual Machine (VM). Otherwise, manual NUMA specification may achieve better performance. This is because the VM memory is usually pinned by VFIO driver when there is passthrough devices assigned to the VM, thus VM memory cannot be moved across NUMA nodes.

Specify NUMA Node to Run VM

Using numactl can specify which NUMA node we want to run a VM.

Its option “--membind=<node>” or “-m <node>” will specify to only allocate memory for the VM from specified <node>.

Option “--cpunodebind=<node>” or “-N <node>” will specify to only execute the VM on the CPUs of node <node>

The following example runs a virtual machine at NUMA node 0:

# numactl -N 0 -m 0 qemu-system-x86_64 --enable-kvm -M q35 -smp 4 -m 4G -hda vm.img -vnc :2

So what would be the best way to use numactl instead of taskset? or is cpuset comparable? or could i use some 'qm set --numa' argument in the vmid .conf file?

I've gotten as far as extracting 6 different diffs from those posts. However, being quite new to this, it seems that the patches can't be made on an existing system, but instead on the sources. Seems I'll have to give up for now.Is there any news about the patch in this thread ? Could anyone give instruction on how to apply it?

Last edited:

It would be nice to be able to set this in the CPU settings for VM, but in the meantime i found thisI've gotten as far as extracting 6 different diffs from those posts. However, being quite new to this, it seems that the patches can't be made on an existing system, but instead on the sources. Seems I'll have to give up for now.

https://github.com/ayufan/pve-helpers

It allows you to assign cpu cores to a VM, as well as set irq affinity using the 'Notes' area for a VM.

Edit: based on preliminary testing, with a USB port assigned to the VM, it seems like setting irq affinity for the VM is needed, a USB soundcard would not work for me without using 'assign_interrupts --all' using pve-helpers.

i currently have 'cpu_taskset 29-40 assign_interrupts --all' for a VM with 6 cores, giving it 12 cores to choose from on one of two 14 core xeon CPU's on the host. Still wondering about the RAM though, but needs further testing.

However an option to set irq affinity for a VM seems to be needed.

Last edited:

For the better part of a year and a half I have been using a variation of the hookscript above. Each VM is setup with the hookscript and a separate file that defines it's CPU set.

This was really annoying so, I wrote a patch and replied to the bug tracker which adds the

Additionally, the patch adds an

In order to acheive the desired result, where one VM has dedicated cores, all vms must have an affinity set. Other host processes, such as zfs for example, can still compete with the VMs for cpu cycles, but as Tmanok said above, that's a more complicated problem. I attempted to figure out a way to prevent proxmox from scheduling any process on a set of cores, but didn't end up getting anywhere with it. I believe that there are IO specific processes that need to be run across cores. (Not a kernel developer)

I got a reply on the mailing list this week saying that the patch has been applied. So, I am anticipating when I can see it in a release and I can finally get rid of this hook script.

This was really annoying so, I wrote a patch and replied to the bug tracker which adds the

affinity value to the <vmid>.conf file. The affinity value follows the linux cpuset List Format. For example, to pin a vm to a certain set of cores, one would add affinity: 0,3-6,9 to the <vmid>.conf file. If the value is set, the VM's parent process will be launched with the taskset command and the requested affinity. If the value is not set, then taskset will not be used to launch the VM's parent process.man cpuset | grep "List format" -A 8

Code:

List format

The List Format for cpus and mems is a comma-separated list of CPU or memory-node numbers and ranges of numbers, in ASCII

decimal.

Examples of the List Format:

0-4,9 # bits 0, 1, 2, 3, 4, and 9 set

0-2,7,12-14 # bits 0, 1, 2, 7, 12, 13, and 14 setAdditionally, the patch adds an

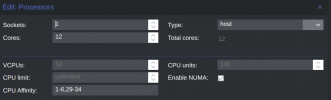

affinity input box to the Edit: Processors page for a vm. This way, we can easily edit the affinity for each vm.In order to acheive the desired result, where one VM has dedicated cores, all vms must have an affinity set. Other host processes, such as zfs for example, can still compete with the VMs for cpu cycles, but as Tmanok said above, that's a more complicated problem. I attempted to figure out a way to prevent proxmox from scheduling any process on a set of cores, but didn't end up getting anywhere with it. I believe that there are IO specific processes that need to be run across cores. (Not a kernel developer)

I got a reply on the mailing list this week saying that the patch has been applied. So, I am anticipating when I can see it in a release and I can finally get rid of this hook script.

Edit2: to add to my questions below, i'm not sure that taskset is the correct tool to use for this issue at all. It seems to me that in the beginning of this thread the taskset tool was chosen without much consideration at all: from taskset manpage:For the better part of a year and a half I have been using a variation of the hookscript above. Each VM is setup with the hookscript and a separate file that defines it's CPU set.

This was really annoying so, I wrote a patch and replied to the bug tracker which adds theaffinityvalue to the<vmid>.conffile. The affinity value follows the linux cpuset List Format. For example, to pin a vm to a certain set of cores, one would addaffinity: 0,3-6,9to the<vmid>.conffile. If the value is set, the VM's parent process will be launched with the taskset command and the requested affinity. If the value is not set, then taskset will not be used to launch the VM's parent process.

man cpuset | grep "List format" -A 8

Code:List format The List Format for cpus and mems is a comma-separated list of CPU or memory-node numbers and ranges of numbers, in ASCII decimal. Examples of the List Format: 0-4,9 # bits 0, 1, 2, 3, 4, and 9 set 0-2,7,12-14 # bits 0, 1, 2, 7, 12, 13, and 14 set

Additionally, the patch adds anaffinityinput box to theEdit: Processorspage for a vm. This way, we can easily edit the affinity for each vm.

In order to acheive the desired result, where one VM has dedicated cores, all vms must have an affinity set. Other host processes, such as zfs for example, can still compete with the VMs for cpu cycles, but as Tmanok said above, that's a more complicated problem. I attempted to figure out a way to prevent proxmox from scheduling any process on a set of cores, but didn't end up getting anywhere with it. I believe that there are IO specific processes that need to be run across cores. (Not a kernel developer)

I got a reply on the mailing list this week saying that the patch has been applied. So, I am anticipating when I can see it in a release and I can finally get rid of this hook script.

And from numactl manpages:taskset is used to set or retrieve the CPU affinity of a running process given its pid, or to launch a new command with a given CPU affinity. CPU affinity is a scheduler property that "bonds" a process to a given set of CPUs on the system. The Linux scheduler will honor the given CPU affinity and the process will not run on

any other CPUs. Note that the Linux scheduler also supports natural CPU affinity: the scheduler attempts to keep processes on the same CPU as long as practical for performance reasons. Therefore, forcing a specific CPU affinity is useful only in certain applications.

numactl runs processes with a specific NUMA scheduling or memory placement policy. The policy is set for command and inherited by all of its children. In addition it can set persistent policy for shared memory segments or files.

So i ask, are you sure that you should spend time on taskset at all? Since it was never designed for this, whereas numactl is.

Below my initial comments:

Hello. I'm not anykind of developer, so i have very little understanding of all this, but after playing around with pve-helpers i am left with a few questions:

The scripts in this thread all use taskset to pin a VM to CPU cores, but the Intel Guide mentions using

numactl --membind=<node> and --cpunodebind=<node> to bind the VM's RAM and CPU threads to a specific numa node instead of a set of select cores.Afaik none of the scripts in this thread care about binding the VM process to the RAM of a select NUMA node, which is the actual purpose of Non-Uniform Memory Access

I've done some preliminary benchmarks in a VM with passthrough to a Nvidia P620 GPU, with and without cpu pinning and irq affinities, and found no difference in performance at all, as a matter of fact, the VM was slightly faster without pinning and irq affinity.

These were basic benchmarks though, i ran passmark Performancetest Linux free edition for a general benchmark, and the excellent Unigine Heaven for graphics.

just for reference, here is the output of a VM starting with the PVE-Helpers hookscript. IMO it's currently the cleanest solution, because you can set the options per VM in the VM description field, and so there's no need to edit scripts, or change the actual Proxmox code in any way, atleast until there is an official solution.

Looking forward to test your solution @Dot , but i'd really like to figure out how to test those intel recommended numactl options as well, they just seem abit more sane than the taskset ones..

EDIT: Best practices for KVM on NUMA servers talks about numactl and numad, it's on my to-read list. It also mentions some memory heavy benchmarks to test with..

Code:

Running exec-cmds for 103 on pre-start...

Running exec-cmds for 103 on post-start...

Running taskset with 29-40 for 477440...

pid 477440's current affinity list: 0-55

pid 477440's new affinity list: 29-40

pid 477441's current affinity list: 0-55

pid 477441's new affinity list: 29-40

pid 477442's current affinity list: 0-55

pid 477442's new affinity list: 29-40

pid 477605's current affinity list: 0-55

pid 477605's new affinity list: 29-40

pid 477606's current affinity list: 0-55

pid 477606's new affinity list: 29-40

pid 477607's current affinity list: 0-55

pid 477607's new affinity list: 29-40

pid 477608's current affinity list: 0-55

pid 477608's new affinity list: 29-40

pid 477609's current affinity list: 0-55

pid 477609's new affinity list: 29-40

pid 477610's current affinity list: 0-55

pid 477610's new affinity list: 29-40

pid 477611's current affinity list: 0-55

pid 477611's new affinity list: 29-40

pid 477612's current affinity list: 0-55

pid 477612's new affinity list: 29-40

pid 477613's current affinity list: 0-55

pid 477613's new affinity list: 29-40

pid 477614's current affinity list: 0-55

pid 477614's new affinity list: 29-40

pid 477615's current affinity list: 0-55

pid 477615's new affinity list: 29-40

pid 477616's current affinity list: 0-55

pid 477616's new affinity list: 29-40

pid 477617's current affinity list: 0-55

pid 477617's new affinity list: 29-40

pid 477770's current affinity list: 0-55

pid 477770's new affinity list: 29-40

Wating 10s seconds for all vfio-gpu interrupts to show up...

Moving 0000:02:00 interrupts to 29-40 cpu cores 103...

- IRQ: 26: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 IR-IO-APIC 8-fasteoi vfio-intx(0000:02:00.0)

- IRQ: 148: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 IR-IO-APIC 12-fasteoi vfio-intx(0000:02:00.1)

Moving 0000:02:00 interrupts to 29-40 cpu cores 103...

- IRQ: 26: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 IR-IO-APIC 8-fasteoi vfio-intx(0000:02:00.0)

- IRQ: 148: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 IR-IO-APIC 12-fasteoi vfio-intx(0000:02:00.1)

TASK OK

Last edited:

So, I ran several tests.

TLDR:

As far as this testing of CPU pinning goes, numactl can do most of what taskset can do and more. One of those additional features offers better numa memory latency but is a double edged sword for servers that are numa OOM. If you only want to pin a vm to cores in a single numa domain, taskset works just as good as numactl. However, numactl is't installed by default. If/when numactl becomes one of the default utilities installed on PVE, I would consider making a patch to switch to taskset to numactl. In order to utilize the memory binding feature of numactl, a GUI switch would need to be added as well; something to the effect of, "Restrict VM Memory to Numa Nodes" or something like that.

Test Rig:

TR 3975WX, 32c/64t, 8 NUMA domains

*Each numa domain has 64G of memory. This is important.

Test Script:

hold_mem.sh

The script will write zeros to memory, allocating it to the process.

To allocate 96G of memory:

./hold_mem.sh 96000m

This table shows the commands used launch the script.

The second table shows the properties of each scenario.

The third table tracks which numa node the memory was allocated to.

For example, in Scenario 03, we allocated 96000m of memory. The memory from 0-64GB was allocated on numa node 4, and the memory from 64-96GB was allocated on numa node 5 (N + 1).

I ran each a few times, because, as you can see, some of these are not deterministic.

When we start a process, the scheduler puts it on a core and starts allocating memory. Both taskset and numactl act as a shim to ensure the scheduler puts our next process on the correct cores. Furthermore, numactl has the ability to lock the allocation of memory to particular numa node memory pools.

1. In situations when you want to pin a VM to a SINGLE numa node, there's not really any major problem with taskset or numactl. Both will allocate the memory in the correct numa node. However, numactl can force the memory to be bound on that numa node, failing if that numa node does not have enough memory. This is the first concern. If we membind with numactl and we go over our memory definitions in just the slightest, we will end up killing the process.

2. In situations when you want to pin a VM to MULTIPLE numa nodes, there is a problem. Both taskset and numactl will randomly choose a core from the cpuset, and begin allocating memory on that core. The memory will be first allocated from the memory pool of the numa node that the process landed on. If we have 8 numa nodes, and we choose cores from numa node 2 and 4, there are two possible scenarios.

However, numactl has a way around this. Numactl has the membind parameter which forces the process into the memory pools of a set of defined numa nodes. membind=2,4. With this, there is a 100% chance that the process will pull memory from the correct numa node pools. This is also a double edged sword. If there is not enough memory in those pools to satisfy the process, the process will be terminated.

--

As the TLDR says above, numactl seems to be able to do almost everything taskset can do. Numactl isn't installed by default, so, that would need to be changed before numactl could be used for this. For binding a VM to a single numa node, taskset works just fine -- it even doesn't kill the process if the numa node is OOM. When binding a VM to multiple numa nodes, there's a high probability of ending up with a big chunk of memory outside of the NUMA boundary.

--

Note: In my research, I found a few references saying that numactl cannot change the cpu cores that a running process is already bound to. I believe this to be the case, but I don't ultimately see that as a con.

TLDR:

As far as this testing of CPU pinning goes, numactl can do most of what taskset can do and more. One of those additional features offers better numa memory latency but is a double edged sword for servers that are numa OOM. If you only want to pin a vm to cores in a single numa domain, taskset works just as good as numactl. However, numactl is't installed by default. If/when numactl becomes one of the default utilities installed on PVE, I would consider making a patch to switch to taskset to numactl. In order to utilize the memory binding feature of numactl, a GUI switch would need to be added as well; something to the effect of, "Restrict VM Memory to Numa Nodes" or something like that.

Test Rig:

TR 3975WX, 32c/64t, 8 NUMA domains

Code:

root@pve-01:~# numactl -H

available: 8 nodes (0-7)

node 0 cpus: 0 1 2 3 32 33 34 35

node 0 size: 64295 MB

node 0 free: 61465 MB

node 1 cpus: 4 5 6 7 36 37 38 39

node 1 size: 64508 MB

node 1 free: 62149 MB

node 2 cpus: 8 9 10 11 40 41 42 43

node 2 size: 64508 MB

node 2 free: 62778 MB

node 3 cpus: 12 13 14 15 44 45 46 47

node 3 size: 64508 MB

node 3 free: 62351 MB

node 4 cpus: 16 17 18 19 48 49 50 51

node 4 size: 64508 MB

node 4 free: 61332 MB

node 5 cpus: 20 21 22 23 52 53 54 55

node 5 size: 64473 MB

node 5 free: 62232 MB

node 6 cpus: 24 25 26 27 56 57 58 59

node 6 size: 64508 MB

node 6 free: 62483 MB

node 7 cpus: 28 29 30 31 60 61 62 63

node 7 size: 64488 MB

node 7 free: 61570 MB

node distances:

node 0 1 2 3 4 5 6 7

0: 10 11 11 11 11 11 11 11

1: 11 10 11 11 11 11 11 11

2: 11 11 10 11 11 11 11 11

3: 11 11 11 10 11 11 11 11

4: 11 11 11 11 10 11 11 11

5: 11 11 11 11 11 10 11 11

6: 11 11 11 11 11 11 10 11

7: 11 11 11 11 11 11 11 10*Each numa domain has 64G of memory. This is important.

Test Script:

hold_mem.sh

Bash:

#!/bin/bash

MEM=$1

</dev/zero head -c $MEM | pv | tailThe script will write zeros to memory, allocating it to the process.

To allocate 96G of memory:

./hold_mem.sh 96000m

This table shows the commands used launch the script.

Scenario | Command |

01 | ./hold_mem.sh 32000m |

02 | ./hold_mem.sh 96000m |

03 | taskset --cpu-list --all-tasks 16-19 ./hold_mem.sh 32000m |

04 | taskset --cpu-list --all-tasks 16-19 ./hold_mem.sh 96000m |

05 | taskset --cpu-list --all-tasks 8-11,16-19 ./hold_mem.sh 96000m |

06 | numactl -C +16-19 ./hold_mem.sh 32000m |

07 | numactl -C +16-19 ./hold_mem.sh 96000m |

08 | numactl -C +8-11,16-19 ./hold_mem.sh 96000m |

09 | numactl -C +16-19 --membind=4 ./hold_mem.sh 32000m |

10 | numactl -C +16-19 --membind=4 ./hold_mem.sh 96000m |

11 | numactl -C +8-11,16-19 --membind=2,4 ./hold_mem.sh 96000m |

The second table shows the properties of each scenario.

Scenario | Allocated Memory | Pinning | Numa Nodes | Cores |

01 | 32G | None | | |

02 | 96G | None | | |

03 | 32G | taskset | 4 | 16-19 |

04 | 96G | taskset | 4 | 16-19 |

05 | 96G | taskset | 2,4 | 8-11,16-19 |

06 | 32G | numactl | 4 | 16-19 |

07 | 96G | numactl | 4 | 16-19 |

08 | 96G | numactl | 2,4 | 8-11,16-19 |

09 | 32G | numactl w/ membind | 4 | 16-19 |

10 | 96G | numactl w/ membind | 4 | 16-19 |

11 | 96G | numactl w/ membind | 2,4 | 8-11,16-19 |

The third table tracks which numa node the memory was allocated to.

For example, in Scenario 03, we allocated 96000m of memory. The memory from 0-64GB was allocated on numa node 4, and the memory from 64-96GB was allocated on numa node 5 (N + 1).

Scenario | Node 0-64GB | Node 64-96G |

01 | Any | N/A |

02 | Any | N + 1 |

03 | 4 | N/A |

04 | 4 | N + 1 |

05 | 2 or 4 | N + 1 |

06 | 4 | N/A |

07 | 4 | N + 1 |

08 | 2 or 4 | N + 1 |

09 | 4 | N/A |

10 | 4 | FAILED |

11 | 2 or 4 | 2 or 4 (Not N) |

I ran each a few times, because, as you can see, some of these are not deterministic.

When we start a process, the scheduler puts it on a core and starts allocating memory. Both taskset and numactl act as a shim to ensure the scheduler puts our next process on the correct cores. Furthermore, numactl has the ability to lock the allocation of memory to particular numa node memory pools.

1. In situations when you want to pin a VM to a SINGLE numa node, there's not really any major problem with taskset or numactl. Both will allocate the memory in the correct numa node. However, numactl can force the memory to be bound on that numa node, failing if that numa node does not have enough memory. This is the first concern. If we membind with numactl and we go over our memory definitions in just the slightest, we will end up killing the process.

2. In situations when you want to pin a VM to MULTIPLE numa nodes, there is a problem. Both taskset and numactl will randomly choose a core from the cpuset, and begin allocating memory on that core. The memory will be first allocated from the memory pool of the numa node that the process landed on. If we have 8 numa nodes, and we choose cores from numa node 2 and 4, there are two possible scenarios.

A. The process lands on 4, and memory gets allocated from the memory pools of numa nodes 4 and 5.

B. The process lands on 2, and memory gets allocated from the memory pools of numa nodes 2 and 3.

Neither of these are desirable. Even when we put the numa nodes next to eachother, there's only a 50% chance that we will allocate memory from both the pools we want.However, numactl has a way around this. Numactl has the membind parameter which forces the process into the memory pools of a set of defined numa nodes. membind=2,4. With this, there is a 100% chance that the process will pull memory from the correct numa node pools. This is also a double edged sword. If there is not enough memory in those pools to satisfy the process, the process will be terminated.

--

As the TLDR says above, numactl seems to be able to do almost everything taskset can do. Numactl isn't installed by default, so, that would need to be changed before numactl could be used for this. For binding a VM to a single numa node, taskset works just fine -- it even doesn't kill the process if the numa node is OOM. When binding a VM to multiple numa nodes, there's a high probability of ending up with a big chunk of memory outside of the NUMA boundary.

--

Note: In my research, I found a few references saying that numactl cannot change the cpu cores that a running process is already bound to. I believe this to be the case, but I don't ultimately see that as a con.

Last edited:

Hi, again i must state i'm no expert.. but a few things keep bothering me about this pinning stuff. Most of the people here that want to pin processes to certain cores need it for gaming setups, or to get maximum performance of other pcie devices such as storage controllers or nvme disks.So, I ran several tests.

TLDR:

As far as this testing of CPU pinning goes, numactl can do most of what taskset can do and more. One of those additional features offers better numa memory latency but is a double edged sword for servers that are numa OOM. If you only want to pin a vm to cores in a single numa domain, taskset works just as good as numactl. However, numactl is't installed by default. If/when numactl becomes one of the default utilities installed on PVE, I would consider making a patch to switch to taskset to numactl. In order to utilize the memory binding feature of numactl, a GUI switch would need to be added as well; something to the effect of, "Restrict VM Memory to Numa Nodes" or something like that.

Test Rig:

TR 3975WX, 32c/64t, 8 NUMA domains

Code:root@pve-01:~# numactl -H available: 8 nodes (0-7) node 0 cpus: 0 1 2 3 32 33 34 35 node 0 size: 64295 MB node 0 free: 61465 MB node 1 cpus: 4 5 6 7 36 37 38 39 node 1 size: 64508 MB node 1 free: 62149 MB node 2 cpus: 8 9 10 11 40 41 42 43 node 2 size: 64508 MB node 2 free: 62778 MB node 3 cpus: 12 13 14 15 44 45 46 47 node 3 size: 64508 MB node 3 free: 62351 MB node 4 cpus: 16 17 18 19 48 49 50 51 node 4 size: 64508 MB node 4 free: 61332 MB node 5 cpus: 20 21 22 23 52 53 54 55 node 5 size: 64473 MB node 5 free: 62232 MB node 6 cpus: 24 25 26 27 56 57 58 59 node 6 size: 64508 MB node 6 free: 62483 MB node 7 cpus: 28 29 30 31 60 61 62 63 node 7 size: 64488 MB node 7 free: 61570 MB node distances: node 0 1 2 3 4 5 6 7 0: 10 11 11 11 11 11 11 11 1: 11 10 11 11 11 11 11 11 2: 11 11 10 11 11 11 11 11 3: 11 11 11 10 11 11 11 11 4: 11 11 11 11 10 11 11 11 5: 11 11 11 11 11 10 11 11 6: 11 11 11 11 11 11 10 11 7: 11 11 11 11 11 11 11 10

*Each numa domain has 64G of memory. This is important.

Test Script:

hold_mem.sh

Bash:#!/bin/bash MEM=$1 </dev/zero head -c $MEM | pv | tail

The script will write zeros to memory, allocating it to the process.

To allocate 96G of memory:

./hold_mem.sh 96000m

This table shows the commands used launch the script.

The second table shows the properties of each scenario.

The third table tracks which numa node the memory was allocated to.

For example, in Scenario 03, we allocated 96000m of memory. The memory from 0-64GB was allocated on numa node 4, and the memory from 64-96GB was allocated on numa node 5 (N + 1).

I ran each a few times, because, as you can see, some of these are not deterministic.

When we start a process, the scheduler puts it on a core and starts allocating memory. Both taskset and numactl act as a shim to ensure the scheduler puts our next process on the correct cores. Furthermore, numactl has the ability to lock the allocation of memory to particular numa node memory pools.

1. In situations when you want to pin a VM to a SINGLE numa node, there's not really any major problem with taskset or numactl. Both will allocate the memory in the correct numa node. However, numactl can force the memory to be bound on that numa node, failing if that numa node does not have enough memory. This is the first concern. If we membind with numactl and we go over our memory definitions in just the slightest, we will end up killing the process.

2. In situations when you want to pin a VM to MULTIPLE numa nodes, there is a problem. Both taskset and numactl will randomly choose a core from the cpuset, and begin allocating memory on that core. The memory will be first allocated from the memory pool of the numa node that the process landed on. If we have 8 numa nodes, and we choose cores from numa node 2 and 4, there are two possible scenarios.

A. The process lands on 4, and memory gets allocated from the memory pools of numa nodes 4 and 5.B. The process lands on 2, and memory gets allocated from the memory pools of numa nodes 2 and 3.Neither of these are desirable. Even when we put the numa nodes next to eachother, there's only a 50% chance that we will allocate memory from both the pools we want.

However, numactl has a way around this. Numactl has the membind parameter which forces the process into the memory pools of a set of defined numa nodes. membind=2,4. With this, there is a 100% chance that the process will pull memory from the correct numa node pools. This is also a double edged sword. If there is not enough memory in those pools to satisfy the process, the process will be terminated.

--

As the TLDR says above, numactl seems to be able to do almost everything taskset can do. Numactl isn't installed by default, so, that would need to be changed before numactl could be used for this. For binding a VM to a single numa node, taskset works just fine -- it even doesn't kill the process if the numa node is OOM. When binding a VM to multiple numa nodes, there's a high probability of ending up with a big chunk of memory outside of the NUMA boundary.

--

Note: In my research, I found a few references saying that numactl cannot change the cpu cores that a running process is already bound to. I believe this to be the case, but I don't ultimately see that as a con.

But in that scenario, and on a single

Then about multiple numa nodes. About the RAM, surely the VM would be set up with a certain amount of non-ballooning RAM, that doesn't exceed the capacity of that numa node? If your numa node has 128GB of RAM, then running a VM pinned with numactl to that node would not be setup with 256GB of ram, and then the user would complain of RAM 'spilling over' to other nodes? That would be a user problem, not a downside of numactl.

Then again, there is the point of all this. Pinning a gaming or a desktop VM with passthrough to pcie hardware to the same numa node the pcie lanes are connected to, in order to get maximum performance and latency for them is a reasonable scenario. Same with pinning that VM to the local RAM of that node. But there is basically where the reasons to do it end.

In a enterprise scenario, where reliability, performance and/or latency is critical, well, those applications should be run on baremetal, not in qemu.

I just feel this pinning stuff is mostly futile, because the actual gain of doing it versus complexity and added fragility of the system is mostly limited to gaming VM's or high performance desktop vm's with pcie passthrough. Personally i'm interested in pinning my desktop VM's with passthrough to GPU cards to the same numa node and RAM those pcie lanes are routed to, but not to specific cores.

Also, in some initial benchmarks i found no difference in performance without pinning, it's not like the inter-numa node links (Quick path interconnect or QPI in intel i think) is some complete heap of trash that runs at a snail's pace

Then lastly about irq affinity, i would imagine that 'natural affinity' would direct the irq requests from pcie cards to the same node as the VM is pinned to using numactl? Or why would they go over QPI to other nodes?

Just my thoughts

Last edited:

My TR 3975WX is used for 4 gaming VMs and 6 other server VMs. I usually use this server in UMA mode, not NUMA. I switch to NUMA for the above tests. I pin each gaming VM each to their own set of CCDs. Each core has it's own L1-L2 cache and each CCD has it's own 16MB L3 cache along with 4 cores. The first 2 VMs get 2 CCDs each and the second 2 VMs get 1 CCD each. This leaves 2 CCDs for other, non-real time VMs. Pinning lets me make intelligent decisions about how I divide up my hardware.

Why do I pin my VMs? Microstuttering.

When I do not pin my gaming VMs, I experience micro stuttering in games. I suspect this is a result of a combination of cpu cycle competition, context switching, or L1-L3 cache misses. I don't know the exact reason, but pinning the VMs stops the micro stuttering I experience. This has happened across multiple servers for me. Pinning VMs has become a standard part of my server management.

Why do I pin my VMs? Microstuttering.

When I do not pin my gaming VMs, I experience micro stuttering in games. I suspect this is a result of a combination of cpu cycle competition, context switching, or L1-L3 cache misses. I don't know the exact reason, but pinning the VMs stops the micro stuttering I experience. This has happened across multiple servers for me. Pinning VMs has become a standard part of my server management.

So did the micro stuttering stop with numactl pinning as well? My aim is to find out if pinning cores is really necessary, versus pinning numa nodes, in order to simplify setups, and not needing to "count and manage cores"My TR 3975WX is used for 4 gaming VMs and 6 other server VMs. I usually use this server in UMA mode, not NUMA. I switch to NUMA for the above tests. I pin each gaming VM each to their own set of CCDs. Each core has it's own L1-L2 cache and each CCD has it's own 16MB L3 cache along with 4 cores. The first 2 VMs get 2 CCDs each and the second 2 VMs get 1 CCD each. This leaves 2 CCDs for other, non-real time VMs. Pinning lets me make intelligent decisions about how I divide up my hardware.

Why do I pin my VMs? Microstuttering.

When I do not pin my gaming VMs, I experience micro stuttering in games. I suspect this is a result of a combination of cpu cycle competition, context switching, or L1-L3 cache misses. I don't know the exact reason, but pinning the VMs stops the micro stuttering I experience. This has happened across multiple servers for me. Pinning VMs has become a standard part of my server management.

Also once again i'm an amateur in this, what i'd like to know is how to add the numactl --membind=<node> and --cpunodebind=<node> to a vmid.conf file, so i could test. I also didn't know about the numactl -C command at all, and i have no idea about AMD CCD's, as i'm a low-grade intel bottom feeder

But while waiting for official support for this in proxmox CPU setup, do you think it would be worth trying to fork the pve-helpers script and add the numactl options? I ask because setting taskset and numactl options in the VM summary notes field is really handy, no script editing needed. That way it would be easier for people to start testing various setups.

again, for example i have one VM with passthrough to a Nvidia P620 card using taskset core pinning + irq affinity on one of two 14 core E5-2680v4's by adding these lines to the notes field:

Code:

cpu_taskset 1-12

assign_interrupts --sleep=10s 1-12 --allresulting in this startup log, easily viewable in the cluster log: (note scroll to the right to see the vfio-intx bindings) The Two pcie devices are the P620, and the intel audio chip integrated on it.

Code:

Running exec-cmds for 100 on pre-start...

Running exec-cmds for 100 on post-start...

Running taskset with 1-12 for 481479...

pid 481479's current affinity list: 0-55

pid 481479's new affinity list: 1-12

pid 481480's current affinity list: 0-55

pid 481480's new affinity list: 1-12

pid 481481's current affinity list: 0-55

pid 481481's new affinity list: 1-12

pid 481482's current affinity list: 0-55

pid 481482's new affinity list: 1-12

pid 481634's current affinity list: 0-55

pid 481634's new affinity list: 1-12

pid 481635's current affinity list: 0-55

pid 481635's new affinity list: 1-12

pid 481636's current affinity list: 0-55

pid 481636's new affinity list: 1-12

pid 481637's current affinity list: 0-55

pid 481637's new affinity list: 1-12

pid 481638's current affinity list: 0-55

pid 481638's new affinity list: 1-12

pid 481639's current affinity list: 0-55

pid 481639's new affinity list: 1-12

pid 481640's current affinity list: 0-55

pid 481640's new affinity list: 1-12

pid 481641's current affinity list: 0-55

pid 481641's new affinity list: 1-12

pid 481642's current affinity list: 0-55

pid 481642's new affinity list: 1-12

pid 481643's current affinity list: 0-55

pid 481643's new affinity list: 1-12

pid 481644's current affinity list: 0-55

pid 481644's new affinity list: 1-12

pid 481645's current affinity list: 0-55

pid 481645's new affinity list: 1-12

pid 481646's current affinity list: 0-55

pid 481646's new affinity list: 1-12

pid 481782's current affinity list: 0-55

pid 481782's new affinity list: 1-12

Wating 10s seconds for all vfio-gpu interrupts to show up...

Moving 0000:04:00 interrupts to 1-12 cpu cores 100...

- IRQ: 29: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 IR-IO-APIC 16-fasteoi vfio-intx(0000:04:00.0)

- IRQ: 148: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 IR-IO-APIC 20-fasteoi vfio-intx(0000:04:00.1)

TASK OK

Last edited:

I obviously need to do more research on this topic, but I've been trying to investigate why my VM (that I use as my desktop vm) experiences small what I would describe as "micro-stuttering". I notice it more when the VM or other processes in other VM's (backup jobs, large network transfers, etc) are doing more work. How it manifests is just small 'freezes' in the UI (I'm using a macos vm).

My hardware is a threadripper 3975wx, and I have 256GB of memory installed. I have a couple of PCIE passthrough devices including two GPUs. Here is my current vm config for my workstation. https://clbin.com/yScEk. I don't have any of the VMs setup for NUMA or CPU affinity, but reading through this thread makes me think it might help. Any opinions or advice?

My hardware is a threadripper 3975wx, and I have 256GB of memory installed. I have a couple of PCIE passthrough devices including two GPUs. Here is my current vm config for my workstation. https://clbin.com/yScEk. I don't have any of the VMs setup for NUMA or CPU affinity, but reading through this thread makes me think it might help. Any opinions or advice?

Well as @Dot 's patches now have been accepted, you can now try pinning your GPU passthrough VM to certain cores. The main idea is to use the cores of the CPU that the PCIE lanes are connected to. I don't know how the the AMD cpu's work, but you can install hwloc, which includes lstopo, which will show you where your pcie lanes from your GPU's are routed. Then using numactl -H you can see which cores belong to which numa node (CPU socket). If pinning the cores doesn't help, then you might want to try setting irq affinity, the easiest way being using pve-helpers, as described earlier in this thread. ATM i'm using cpupinning using the Proxmox settings, from a 14 core/28 thread CPU that my PCIE lanes are routed to, i give a VM with GPU passthrough 12 cores, 6 'real' cores, and then 6 hyperthreads from those same cores, emulating a 6 core/12 thread CPU:I obviously need to do more research on this topic, but I've been trying to investigate why my VM (that I use as my desktop vm) experiences small what I would describe as "micro-stuttering". I notice it more when the VM or other processes in other VM's (backup jobs, large network transfers, etc) are doing more work. How it manifests is just small 'freezes' in the UI (I'm using a macos vm).

My hardware is a threadripper 3975wx, and I have 256GB of memory installed. I have a couple of PCIE passthrough devices including two GPUs. Here is my current vm config for my workstation. https://clbin.com/yScEk. I don't have any of the VMs setup for NUMA or CPU affinity, but reading through this thread makes me think it might help. Any opinions or advice?

My lstopo output is:

Code:

# lstopo

Machine (126GB total)

Package L#0

NUMANode L#0 (P#0 63GB)

L3 L#0 (35MB)

L2 L#0 (256KB) + L1d L#0 (32KB) + L1i L#0 (32KB) + Core L#0

PU L#0 (P#0)

PU L#1 (P#28)

L2 L#1 (256KB) + L1d L#1 (32KB) + L1i L#1 (32KB) + Core L#1

PU L#2 (P#1)

PU L#3 (P#29)

L2 L#2 (256KB) + L1d L#2 (32KB) + L1i L#2 (32KB) + Core L#2

PU L#4 (P#2)

PU L#5 (P#30)

L2 L#3 (256KB) + L1d L#3 (32KB) + L1i L#3 (32KB) + Core L#3

PU L#6 (P#3)

PU L#7 (P#31)

L2 L#4 (256KB) + L1d L#4 (32KB) + L1i L#4 (32KB) + Core L#4

PU L#8 (P#4)

PU L#9 (P#32)

L2 L#5 (256KB) + L1d L#5 (32KB) + L1i L#5 (32KB) + Core L#5

PU L#10 (P#5)

PU L#11 (P#33)

L2 L#6 (256KB) + L1d L#6 (32KB) + L1i L#6 (32KB) + Core L#6

PU L#12 (P#6)

PU L#13 (P#34)

L2 L#7 (256KB) + L1d L#7 (32KB) + L1i L#7 (32KB) + Core L#7

PU L#14 (P#7)

PU L#15 (P#35)

L2 L#8 (256KB) + L1d L#8 (32KB) + L1i L#8 (32KB) + Core L#8

PU L#16 (P#8)

PU L#17 (P#36)

L2 L#9 (256KB) + L1d L#9 (32KB) + L1i L#9 (32KB) + Core L#9

PU L#18 (P#9)

PU L#19 (P#37)

L2 L#10 (256KB) + L1d L#10 (32KB) + L1i L#10 (32KB) + Core L#10

PU L#20 (P#10)

PU L#21 (P#38)

L2 L#11 (256KB) + L1d L#11 (32KB) + L1i L#11 (32KB) + Core L#11

PU L#22 (P#11)

PU L#23 (P#39)

L2 L#12 (256KB) + L1d L#12 (32KB) + L1i L#12 (32KB) + Core L#12

PU L#24 (P#12)

PU L#25 (P#40)

L2 L#13 (256KB) + L1d L#13 (32KB) + L1i L#13 (32KB) + Core L#13

PU L#26 (P#13)

PU L#27 (P#41)

HostBridge

PCIBridge

PCI 01:00.0 (NVMExp)

Block(Disk) "nvme0n1"

PCIBridge

PCI 02:00.0 (VGA)

PCIBridge

PCI 03:00.0 (Ethernet)

Net "ens6f0np0"

PCI 03:00.1 (Ethernet)

Net "ens6f1np1"

4 x { PCI 03:00.2-7 (Ethernet) }

PCIBridge

PCI 04:00.0 (VGA)

PCI 00:11.4 (SATA)

Block(Disk) "sda"

PCIBridge

PCI 06:00.0 (Ethernet)

Net "enp6s0"

PCIBridge

PCI 07:00.0 (Ethernet)

Net "enp7s0"

PCIBridge

PCIBridge

PCI 09:00.0 (VGA)

PCI 00:1f.2 (SATA)

Block(Disk) "sdd"

Block(Disk) "sdb"

Block(Disk) "sde"

Block(Disk) "sdc"

Package L#1

NUMANode L#1 (P#1 63GB)

L3 L#1 (35MB)

L2 L#14 (256KB) + L1d L#14 (32KB) + L1i L#14 (32KB) + Core L#14

PU L#28 (P#14)

PU L#29 (P#42)

L2 L#15 (256KB) + L1d L#15 (32KB) + L1i L#15 (32KB) + Core L#15

PU L#30 (P#15)

PU L#31 (P#43)

L2 L#16 (256KB) + L1d L#16 (32KB) + L1i L#16 (32KB) + Core L#16

PU L#32 (P#16)

PU L#33 (P#44)

L2 L#17 (256KB) + L1d L#17 (32KB) + L1i L#17 (32KB) + Core L#17

PU L#34 (P#17)

PU L#35 (P#45)

L2 L#18 (256KB) + L1d L#18 (32KB) + L1i L#18 (32KB) + Core L#18

PU L#36 (P#18)

PU L#37 (P#46)

L2 L#19 (256KB) + L1d L#19 (32KB) + L1i L#19 (32KB) + Core L#19

PU L#38 (P#19)

PU L#39 (P#47)

L2 L#20 (256KB) + L1d L#20 (32KB) + L1i L#20 (32KB) + Core L#20

PU L#40 (P#20)

PU L#41 (P#48)

L2 L#21 (256KB) + L1d L#21 (32KB) + L1i L#21 (32KB) + Core L#21

PU L#42 (P#21)

PU L#43 (P#49)

L2 L#22 (256KB) + L1d L#22 (32KB) + L1i L#22 (32KB) + Core L#22

PU L#44 (P#22)

PU L#45 (P#50)

L2 L#23 (256KB) + L1d L#23 (32KB) + L1i L#23 (32KB) + Core L#23

PU L#46 (P#23)

PU L#47 (P#51)

L2 L#24 (256KB) + L1d L#24 (32KB) + L1i L#24 (32KB) + Core L#24

PU L#48 (P#24)

PU L#49 (P#52)

L2 L#25 (256KB) + L1d L#25 (32KB) + L1i L#25 (32KB) + Core L#25

PU L#50 (P#25)

PU L#51 (P#53)

L2 L#26 (256KB) + L1d L#26 (32KB) + L1i L#26 (32KB) + Core L#26

PU L#52 (P#26)

PU L#53 (P#54)

L2 L#27 (256KB) + L1d L#27 (32KB) + L1i L#27 (32KB) + Core L#27

PU L#54 (P#27)

PU L#55 (P#55)My numactl -h output is:

Code:

# numactl -H

available: 2 nodes (0-1)

node 0 cpus: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 28 29 30 31 32 33 34 35 36 37 38 39 40 41

node 0 size: 64304 MB

node 0 free: 32954 MB

node 1 cpus: 14 15 16 17 18 19 20 21 22 23 24 25 26 27 42 43 44 45 46 47 48 49 50 51 52 53 54 55

node 1 size: 64502 MB

node 1 free: 59635 MB

node distances:

node 0 1

0: 10 21

1: 21 10Then to see which cores are real cores, and which are hyperthreads, i use lscpu -e as per guide from pve-helpers :

Code:

# lscpu -e

CPU NODE SOCKET CORE L1d:L1i:L2:L3 ONLINE MAXMHZ MINMHZ

0 0 0 0 0:0:0:0 yes 3300.0000 1200.0000

1 0 0 1 1:1:1:0 yes 3300.0000 1200.0000

2 0 0 2 2:2:2:0 yes 3300.0000 1200.0000

3 0 0 3 3:3:3:0 yes 3300.0000 1200.0000

4 0 0 4 4:4:4:0 yes 3300.0000 1200.0000

5 0 0 5 5:5:5:0 yes 3300.0000 1200.0000

6 0 0 6 6:6:6:0 yes 3300.0000 1200.0000

7 0 0 7 7:7:7:0 yes 3300.0000 1200.0000

8 0 0 8 8:8:8:0 yes 3300.0000 1200.0000

9 0 0 9 9:9:9:0 yes 3300.0000 1200.0000

10 0 0 10 10:10:10:0 yes 3300.0000 1200.0000

11 0 0 11 11:11:11:0 yes 3300.0000 1200.0000

12 0 0 12 12:12:12:0 yes 3300.0000 1200.0000

13 0 0 13 13:13:13:0 yes 3300.0000 1200.0000

14 1 1 14 14:14:14:1 yes 3300.0000 1200.0000

15 1 1 15 15:15:15:1 yes 3300.0000 1200.0000

16 1 1 16 16:16:16:1 yes 3300.0000 1200.0000

17 1 1 17 17:17:17:1 yes 3300.0000 1200.0000

18 1 1 18 18:18:18:1 yes 3300.0000 1200.0000

19 1 1 19 19:19:19:1 yes 3300.0000 1200.0000

20 1 1 20 20:20:20:1 yes 3300.0000 1200.0000

21 1 1 21 21:21:21:1 yes 3300.0000 1200.0000

22 1 1 22 22:22:22:1 yes 3300.0000 1200.0000

23 1 1 23 23:23:23:1 yes 3300.0000 1200.0000

24 1 1 24 24:24:24:1 yes 3300.0000 1200.0000

25 1 1 25 25:25:25:1 yes 3300.0000 1200.0000

26 1 1 26 26:26:26:1 yes 3300.0000 1200.0000

27 1 1 27 27:27:27:1 yes 3300.0000 1200.0000

28 0 0 0 0:0:0:0 yes 3300.0000 1200.0000

29 0 0 1 1:1:1:0 yes 3300.0000 1200.0000

30 0 0 2 2:2:2:0 yes 3300.0000 1200.0000

31 0 0 3 3:3:3:0 yes 3300.0000 1200.0000

32 0 0 4 4:4:4:0 yes 3300.0000 1200.0000

33 0 0 5 5:5:5:0 yes 3300.0000 1200.0000

34 0 0 6 6:6:6:0 yes 3300.0000 1200.0000

35 0 0 7 7:7:7:0 yes 3300.0000 1200.0000

36 0 0 8 8:8:8:0 yes 3300.0000 1200.0000

37 0 0 9 9:9:9:0 yes 3300.0000 1200.0000

38 0 0 10 10:10:10:0 yes 3300.0000 1200.0000

39 0 0 11 11:11:11:0 yes 3300.0000 1200.0000

40 0 0 12 12:12:12:0 yes 3300.0000 1200.0000

41 0 0 13 13:13:13:0 yes 3300.0000 1200.0000

42 1 1 14 14:14:14:1 yes 3300.0000 1200.0000

43 1 1 15 15:15:15:1 yes 3300.0000 1200.0000

44 1 1 16 16:16:16:1 yes 3300.0000 1200.0000

45 1 1 17 17:17:17:1 yes 3300.0000 1200.0000

46 1 1 18 18:18:18:1 yes 3300.0000 1200.0000

47 1 1 19 19:19:19:1 yes 3300.0000 1200.0000

48 1 1 20 20:20:20:1 yes 3300.0000 1200.0000

49 1 1 21 21:21:21:1 yes 3300.0000 1200.0000

50 1 1 22 22:22:22:1 yes 3300.0000 1200.0000

51 1 1 23 23:23:23:1 yes 3300.0000 1200.0000

52 1 1 24 24:24:24:1 yes 3300.0000 1200.0000

53 1 1 25 25:25:25:1 yes 3300.0000 1200.0000

54 1 1 26 26:26:26:1 yes 3300.0000 1200.0000

55 1 1 27 27:27:27:1 yes 3300.0000 1200.0000Based on the above outputs, i give 6 cores and 6 hyperthreads from the CPU in NUMA node 0 the PCIE lanes are routed to in proxmox CPU settings:

If you want to try irq affinity, the same cpu pinning using pve-helpers, and including irq affinity would be:

Code:

cpu_taskset 1,2,3,4,5,6,29,30,31,32,33,34

assign_interrupts --sleep=10s 1,2,3,4,5,6,29,30,31,32,33,34 --allOr alternatively, just give it 12 real cores:

Code:

cpu_taskset 1-12

assign_interrupts --sleep=10s 1-12 --allpve-helpers has additional notes about AMD hardware. Read instructions on the github page on how to add the hookscript to proxmox.

All you can do is test these, if you find any of them help.

Last edited:

I know i'm repeating myself, but imo i think adding numactl options to simply bind a VM to a NUMA node, and to the RAM attached to that node would be an alternative more simple solution, as from what i understand the linux kernel is very good at choosing which cores to utilize automatically. I believe there's also 'natural' irq affinity.

Maybe in the future. Adding numactl to default proxmox installer should not be a big deal, it's standard in debian repo's.

Maybe in the future. Adding numactl to default proxmox installer should not be a big deal, it's standard in debian repo's.

Last edited:

my 14 gen i7 has Performance-cores (8) and Efficient-cores (12). is there a way to see witch im pinning down? is it like first performance core from 0-7 and then 8-19 are efficient cores?

thanks for clarification

thanks for clarification

i have no idea, but you could start with numactl -Hmy 14 gen i7 has Performance-cores (8) and Efficient-cores (12). is there a way to see witch im pinning down? is it like first performance core from 0-7 and then 8-19 are efficient cores?

thanks for clarification

For anyone trying to dedicate specific cores to a VM while preventing the host to use them here's what I did (with success) for my virtualized OPNsense setup.

I used @t.lamprecht 's hookscript for assigning the VM to specific cores, in my case 2,3. I have 4 cores total, and decided to keep 0 and 1 for the host, while dedicated 2 and 3 to OPNsense VM. It works great.

Now, as for ensuring the host doesn't try to use those VM cores for anything I used kernel boot parameter `isolcpus=2,3`, which I added to GRUB_CMDLINE_LINUX_DEFAULT in /etc/default/grub. Then I ran `update-grub` and rebooted.

I did some stress testing while having htop open on pve host to monitor usage of the CPU cores. When I run `openssl speed -multi 4` on the host then only the core 0 and 1 are hot, while when I run the same command inside the VM only the core 2 and 3 are hot.

I hope this helps someone looking for a similar outcome.

I used @t.lamprecht 's hookscript for assigning the VM to specific cores, in my case 2,3. I have 4 cores total, and decided to keep 0 and 1 for the host, while dedicated 2 and 3 to OPNsense VM. It works great.

Now, as for ensuring the host doesn't try to use those VM cores for anything I used kernel boot parameter `isolcpus=2,3`, which I added to GRUB_CMDLINE_LINUX_DEFAULT in /etc/default/grub. Then I ran `update-grub` and rebooted.

I did some stress testing while having htop open on pve host to monitor usage of the CPU cores. When I run `openssl speed -multi 4` on the host then only the core 0 and 1 are hot, while when I run the same command inside the VM only the core 2 and 3 are hot.

I hope this helps someone looking for a similar outcome.