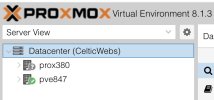

I'm having issues getting corosync to start up, causing my node to be unable to connect to the other.

Diagnostics so far

I've tested the basics like pinging one node from the other and it works fine.\

Results from journalctl -xeu pve-cluster.service

results of cat /etc/pve/corosync.conf

Output from pveversion -v

output from pvecm status

I don't see anything that is obvious for why it's not able to start, I'm at a total loss?

Diagnostics so far

I've tested the basics like pinging one node from the other and it works fine.\

Results from journalctl -xeu pve-cluster.service

Jan 15 18:15:49 pve847 pmxcfs[122430]: [quorum] crit: quorum_initialize failed: 2

Jan 15 18:15:49 pve847 pmxcfs[122430]: [confdb] crit: cmap_initialize failed: 2

Jan 15 18:15:49 pve847 pmxcfs[122430]: [dcdb] crit: cpg_initialize failed: 2

Jan 15 18:15:49 pve847 pmxcfs[122430]: [status] crit: cpg_initialize failed: 2

Jan 15 18:15:55 pve847 pmxcfs[122430]: [quorum] crit: quorum_initialize failed: 2

Jan 15 18:15:55 pve847 pmxcfs[122430]: [confdb] crit: cmap_initialize failed: 2

Jan 15 18:15:55 pve847 pmxcfs[122430]: [dcdb] crit: cpg_initialize failed: 2

Jan 15 18:15:55 pve847 pmxcfs[122430]: [status] crit: cpg_initialize failed: 2

Jan 15 18:16:01 pve847 pmxcfs[122430]: [quorum] crit: quorum_initialize failed: 2

Jan 15 18:16:01 pve847 pmxcfs[122430]: [confdb] crit: cmap_initialize failed: 2

Jan 15 18:16:01 pve847 pmxcfs[122430]: [dcdb] crit: cpg_initialize failed: 2

Jan 15 18:16:01 pve847 pmxcfs[122430]: [status] crit: cpg_initialize failed: 2

Jan 15 18:16:07 pve847 pmxcfs[122430]: [quorum] crit: quorum_initialize failed: 2

Jan 15 18:16:07 pve847 pmxcfs[122430]: [confdb] crit: cmap_initialize failed: 2

Jan 15 18:16:07 pve847 pmxcfs[122430]: [dcdb] crit: cpg_initialize failed: 2

Jan 15 18:16:07 pve847 pmxcfs[122430]: [status] crit: cpg_initialize failed: 2

Jan 15 18:16:13 pve847 pmxcfs[122430]: [quorum] crit: quorum_initialize failed: 2

Jan 15 18:16:13 pve847 pmxcfs[122430]: [confdb] crit: cmap_initialize failed: 2

Jan 15 18:16:13 pve847 pmxcfs[122430]: [dcdb] crit: cpg_initialize failed: 2

Jan 15 18:16:13 pve847 pmxcfs[122430]: [status] crit: cpg_initialize failed: 2

Jan 15 18:16:19 pve847 pmxcfs[122430]: [quorum] crit: quorum_initialize failed: 2

Jan 15 18:16:19 pve847 pmxcfs[122430]: [confdb] crit: cmap_initialize failed: 2

Jan 15 18:16:19 pve847 pmxcfs[122430]: [dcdb] crit: cpg_initialize failed: 2

Jan 15 18:16:19 pve847 pmxcfs[122430]: [status] crit: cpg_initialize failed: 2

Jan 15 18:16:25 pve847 pmxcfs[122430]: [quorum] crit: quorum_initialize failed: 2

Jan 15 18:16:25 pve847 pmxcfs[122430]: [confdb] crit: cmap_initialize failed: 2

Jan 15 18:16:25 pve847 pmxcfs[122430]: [dcdb] crit: cpg_initialize failed: 2

Jan 15 18:16:25 pve847 pmxcfs[122430]: [status] crit: cpg_initialize failed: 2

results of cat /etc/pve/corosync.conf

logging {

debug: off

to_syslog: yes

}

nodelist {

node {

name: prox380

nodeid: 1

quorum_votes: 1

ring0_addr: xxx.xxx.xxx.xxx

}

node {

name: pve847

nodeid: 2

quorum_votes: 1

ring0_addr: xxx.xxx.xxx.xxx

}

}

quorum {

provider: corosync_votequorum

}

totem {

cluster_name: CelticWebs

config_version: 2

interface {

linknumber: 0

}

ip_version: ipv4-6

link_mode: passive

secauth: on

version: 2

}

Output from pveversion -v

proxmox-ve: 8.1.0 (running kernel: 6.5.11-7-pve)

pve-manager: 8.1.3 (running version: 8.1.3/b46aac3b42da5d15)

proxmox-kernel-helper: 8.1.0

proxmox-kernel-6.5: 6.5.11-7

proxmox-kernel-6.5.11-7-pve-signed: 6.5.11-7

proxmox-kernel-6.5.11-4-pve-signed: 6.5.11-4

ceph-fuse: 17.2.7-pve1

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx8

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-4

libknet1: 1.28-pve1

libproxmox-acme-perl: 1.5.0

libproxmox-backup-qemu0: 1.4.1

libproxmox-rs-perl: 0.3.3

libpve-access-control: 8.0.7

libpve-apiclient-perl: 3.3.1

libpve-common-perl: 8.1.0

libpve-guest-common-perl: 5.0.6

libpve-http-server-perl: 5.0.5

libpve-network-perl: 0.9.5

libpve-rs-perl: 0.8.7

libpve-storage-perl: 8.0.5

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 5.0.2-4

lxcfs: 5.0.3-pve4

novnc-pve: 1.4.0-3

proxmox-backup-client: 3.1.2-1

proxmox-backup-file-restore: 3.1.2-1

proxmox-kernel-helper: 8.1.0

proxmox-mail-forward: 0.2.2

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.3

proxmox-widget-toolkit: 4.1.3

pve-cluster: 8.0.5

pve-container: 5.0.8

pve-docs: 8.1.3

pve-edk2-firmware: 4.2023.08-2

pve-firewall: 5.0.3

pve-firmware: 3.9-1

pve-ha-manager: 4.0.3

pve-i18n: 3.1.5

pve-qemu-kvm: 8.1.2-6

pve-xtermjs: 5.3.0-3

qemu-server: 8.0.10

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.2.2-pve1

output from pvecm status

Cluster information

-------------------

Name: CelticWebs

Config Version: 2

Transport: knet

Secure auth: on

Cannot initialize CMAP service

I don't see anything that is obvious for why it's not able to start, I'm at a total loss?

Last edited: