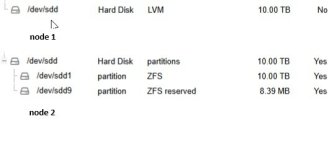

So I have added my 2nd node in a proxmox cluster  . I wanted to use "replicate" and discovered I needed ZFS for that... bump.

. I wanted to use "replicate" and discovered I needed ZFS for that... bump.

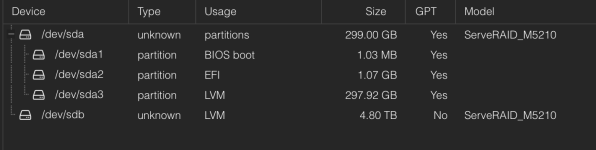

So I have been crawling this forum and google and fund no clear answer. Without reinstalling the entire host, is it possible to convert local-lvm to zfs?

What is the best way to get to a dual-single-node-cluster (2x intel NUC8) with ZFS on the NVME drives?

So I have been crawling this forum and google and fund no clear answer. Without reinstalling the entire host, is it possible to convert local-lvm to zfs?

What is the best way to get to a dual-single-node-cluster (2x intel NUC8) with ZFS on the NVME drives?