Hello everybody ...

I am in need of a big help...

I will explain my environment.

I have a Storage DELL ME5012 with 2 controllers and 4 fiber channel ports.

They are configured like this

Controller A: Port 1 -> IP 172.16.0.1/24

Controller A: Port 3 -> IP 172.16.2.1/24

Controller B: Port 1 -> IP 172.16.1.1/24

Controller B: Port 3 -> IP 172.16.3.1/24

The storage only has a single 7TB LUN.

I have 2 DELL Hosts with 2 fiber channel ports each.

Host A (lion): -> IP 172.16.0.2/24

Host A (lion): -> IP 172.16.1.2/24

Host B (pantrho): -> IP 172.16.2.2/24

Host B (pantrho): -> IP 172.16.3.2/24

The cables are connected directly from the servers to the storage and with the ping test working.

I set up a cluster with Hosts A and B.

On Host A I create the cluster and on B I do the join.

Now I add the LUN in the respective Hosts:

Host A: STORAGE_0.1 and STORAGE_1.1

Host B: STORAGE_2.1 and STORAGE_3.1

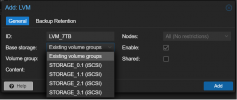

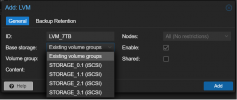

Then I create an LVM to use in my virtual machines. In Base Storage, I choice EXISTING VOLUME GROUPS.

Host A connects, but Host B always has the ? in volume And I can't migrate machine 100 from Host A to Host B

What is the correct way to assemble this environment that I have?

Thank you.

ps: sorry about my English.

I am in need of a big help...

I will explain my environment.

I have a Storage DELL ME5012 with 2 controllers and 4 fiber channel ports.

They are configured like this

Controller A: Port 1 -> IP 172.16.0.1/24

Controller A: Port 3 -> IP 172.16.2.1/24

Controller B: Port 1 -> IP 172.16.1.1/24

Controller B: Port 3 -> IP 172.16.3.1/24

The storage only has a single 7TB LUN.

I have 2 DELL Hosts with 2 fiber channel ports each.

Host A (lion): -> IP 172.16.0.2/24

Host A (lion): -> IP 172.16.1.2/24

Host B (pantrho): -> IP 172.16.2.2/24

Host B (pantrho): -> IP 172.16.3.2/24

The cables are connected directly from the servers to the storage and with the ping test working.

I set up a cluster with Hosts A and B.

On Host A I create the cluster and on B I do the join.

Now I add the LUN in the respective Hosts:

Host A: STORAGE_0.1 and STORAGE_1.1

Host B: STORAGE_2.1 and STORAGE_3.1

Then I create an LVM to use in my virtual machines. In Base Storage, I choice EXISTING VOLUME GROUPS.

Host A connects, but Host B always has the ? in volume And I can't migrate machine 100 from Host A to Host B

What is the correct way to assemble this environment that I have?

Thank you.

ps: sorry about my English.