Hi,

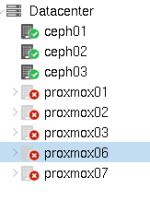

we updated our Ceph nodes from Jewel to Luminous. After the update the cluster seems to have a problem (see screenshot), though on console it seems fine:

Alle nodes are running the latest proxmox 4.4, the 3 ceph nodes are updated to ceph Luminous (https://pve.proxmox.com/wiki/Ceph_Jewel_to_Luminous)

we updated our Ceph nodes from Jewel to Luminous. After the update the cluster seems to have a problem (see screenshot), though on console it seems fine:

Code:

# pvecm status

Quorum information

------------------

Date: Wed Dec 27 10:32:56 2017

Quorum provider: corosync_votequorum

Nodes: 8

Node ID: 0x00000003

Ring ID: 1/24060

Quorate: Yes

Votequorum information

----------------------

Expected votes: 8

Highest expected: 8

Total votes: 8

Quorum: 5

Flags: Quorate

Membership information

----------------------

Nodeid Votes Name

0x00000001 1 10.1.4.1

0x00000002 1 10.1.4.2

0x00000003 1 10.1.4.3 (local)

0x00000008 1 10.1.4.101

0x00000006 1 10.1.4.102

0x00000007 1 10.1.4.103

0x00000004 1 10.1.4.106

0x00000005 1 10.1.4.107

Code:

# ceph -s

cluster:

id: 835fa7e9-f9ef-459e-a949-b6cfe76b9dd8

health: HEALTH_WARN

too many PGs per OSD (256 > max 200)

services:

mon: 3 daemons, quorum 0,1,2

mgr: ceph01(active), standbys: ceph03, ceph02

osd: 12 osds: 12 up, 12 in

data:

pools: 1 pools, 1024 pgs

objects: 927k objects, 3677 GB

usage: 10922 GB used, 33768 GB / 44690 GB avail

pgs: 1022 active+clean

2 active+clean+scrubbing+deep

io:

client: 70813 B/s rd, 3332 kB/s wr, 4 op/s rd, 296 op/s wr

Code:

root@ceph02:~# ceph osd versions

{

"ceph version 12.2.2 (cf0baeeeeba3b47f9427c6c97e2144b094b7e5ba) luminous (stable)": 12

}

root@ceph02:~# ceph mon versions

{

"ceph version 12.2.2 (cf0baeeeeba3b47f9427c6c97e2144b094b7e5ba) luminous (stable)": 3

}Alle nodes are running the latest proxmox 4.4, the 3 ceph nodes are updated to ceph Luminous (https://pve.proxmox.com/wiki/Ceph_Jewel_to_Luminous)