Hi,

I have a little project with 3 nodes 64GB ram each

I use gluster on top ZFS as network filesystem on dedicated Nic's at 10GB ad another 10GB for corosynch (plus a third 10GB for internet access).

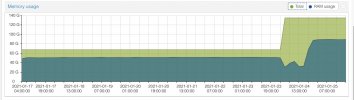

I know that ZFS use from 4 to 8GB of RAM, but the problem is that much more ram missing and I don't know why.

For example: on node one I have 4 VM (KVM) that uses in total 22GB of ram, but proxmox said that memory in use is 88% (55GB).

33GB of ram for proxmox and ZFS seems too much for me...

In the others 2 nodes the situation is pretty much the same...

Can you help me on this?

I have a little project with 3 nodes 64GB ram each

I use gluster on top ZFS as network filesystem on dedicated Nic's at 10GB ad another 10GB for corosynch (plus a third 10GB for internet access).

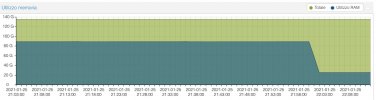

I know that ZFS use from 4 to 8GB of RAM, but the problem is that much more ram missing and I don't know why.

For example: on node one I have 4 VM (KVM) that uses in total 22GB of ram, but proxmox said that memory in use is 88% (55GB).

33GB of ram for proxmox and ZFS seems too much for me...

In the others 2 nodes the situation is pretty much the same...

Can you help me on this?

Attachments

Last edited: