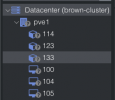

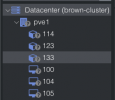

Since rebooting one node of my three-node cluster this morning, I'm seeing some strange behavior, in that one node (often, but not always, the one I rebooted) appears offline, like this:

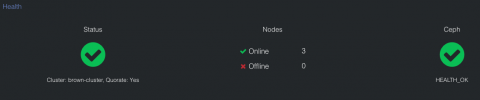

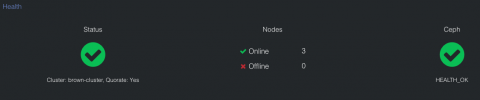

The node is up and running, I can ssh to it, and in this case, I'm even logged into that node's web GUI. The datacenter summary looks good:

I can ping at least one of the VMs that should be (and apparently is) running on pve1. I can even click on a running VM and it will show its status, though CPU/memory reporting stops about a half hour ago:

Where should I be looking to correct this?

Again, it's a three-node cluster, running on three nearly-identical nodes of a Dell Poweredge C6220 (only difference is the amount of RAM, 80+ GB), each with 2x Xeon E5-2680v2. pveversion-v on pve1 shows:

While I was typing, the same thing happened to pve3, which is the node I rebooted this morning (and have since rebooted a couple of times), but reversed itself within a minute or so. But pve1 continues to be as shown.

The node is up and running, I can ssh to it, and in this case, I'm even logged into that node's web GUI. The datacenter summary looks good:

I can ping at least one of the VMs that should be (and apparently is) running on pve1. I can even click on a running VM and it will show its status, though CPU/memory reporting stops about a half hour ago:

Where should I be looking to correct this?

Again, it's a three-node cluster, running on three nearly-identical nodes of a Dell Poweredge C6220 (only difference is the amount of RAM, 80+ GB), each with 2x Xeon E5-2680v2. pveversion-v on pve1 shows:

Code:

root@pve1:~# pveversion -v

proxmox-ve: 7.0-2 (running kernel: 5.11.22-3-pve)

pve-manager: 7.0-11 (running version: 7.0-11/63d82f4e)

pve-kernel-5.11: 7.0-7

pve-kernel-helper: 7.0-7

pve-kernel-5.4: 6.4-4

pve-kernel-5.11.22-4-pve: 5.11.22-8

pve-kernel-5.11.22-3-pve: 5.11.22-7

pve-kernel-5.4.124-1-pve: 5.4.124-1

pve-kernel-4.15: 5.3-3

pve-kernel-4.15.18-12-pve: 4.15.18-35

ceph: 16.2.5-pve1

ceph-fuse: 16.2.5-pve1

corosync: 3.1.2-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown: residual config

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.21-pve1

libproxmox-acme-perl: 1.3.0

libproxmox-backup-qemu0: 1.2.0-1

libpve-access-control: 7.0-4

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.0-6

libpve-guest-common-perl: 4.0-2

libpve-http-server-perl: 4.0-2

libpve-storage-perl: 7.0-10

libqb0: 1.0.5-1

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 4.0.9-4

lxcfs: 4.0.8-pve2

novnc-pve: 1.2.0-3

proxmox-backup-client: 2.0.9-2

proxmox-backup-file-restore: 2.0.9-2

proxmox-mini-journalreader: 1.2-1

proxmox-widget-toolkit: 3.3-6

pve-cluster: 7.0-3

pve-container: 4.0-9

pve-docs: 7.0-5

pve-edk2-firmware: 3.20200531-1

pve-firewall: 4.2-2

pve-firmware: 3.3-1

pve-ha-manager: 3.3-1

pve-i18n: 2.4-1

pve-qemu-kvm: 6.0.0-3

pve-xtermjs: 4.12.0-1

qemu-server: 7.0-13

smartmontools: 7.2-pve2

spiceterm: 3.2-2

vncterm: 1.7-1

zfsutils-linux: 2.0.5-pve1While I was typing, the same thing happened to pve3, which is the node I rebooted this morning (and have since rebooted a couple of times), but reversed itself within a minute or so. But pve1 continues to be as shown.

Last edited: