hi all,

i've been scratching my head around a strange issue with a cluster.

I originally have two nodes:

node1

node2

these two nodes are not in the same subnet

in a cluster and both are working fine. Last night I added a new cluster member: node3 and since I have done this i've observed really strange behaviour.

node 1 and node3 are in the same subnet.

the behaviour I see is:

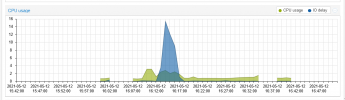

when node1, node2 and node3 are on, i lose connectivity to node3 and graphs dont' update

when node1 and node2 are on, but node 3 isn't, no issues

when node1 and node3 are on, but node2 isn't, i don't lose connectivity and graphs update on all sides.

there seems to be a conflict between node2 and node3. These do not share an ip which I checked, and as far as I can see they have end-to-end ip connectivity. The known keys are seeminly correct I updated them as well with pvecm updatecerts.

even with node2 up and running, but shutting down the the cluster service I regain access to node3.

I had a look at the documentation, but it doesn't really seem to point to where to start digging more, any help would be much appreciated,

i've been scratching my head around a strange issue with a cluster.

I originally have two nodes:

node1

node2

these two nodes are not in the same subnet

in a cluster and both are working fine. Last night I added a new cluster member: node3 and since I have done this i've observed really strange behaviour.

node 1 and node3 are in the same subnet.

the behaviour I see is:

when node1, node2 and node3 are on, i lose connectivity to node3 and graphs dont' update

when node1 and node2 are on, but node 3 isn't, no issues

when node1 and node3 are on, but node2 isn't, i don't lose connectivity and graphs update on all sides.

there seems to be a conflict between node2 and node3. These do not share an ip which I checked, and as far as I can see they have end-to-end ip connectivity. The known keys are seeminly correct I updated them as well with pvecm updatecerts.

even with node2 up and running, but shutting down the the cluster service I regain access to node3.

I had a look at the documentation, but it doesn't really seem to point to where to start digging more, any help would be much appreciated,